D7. User Interfaces in VR

Learning Outcomes

- Describe the role of spatial UIs in VR environments. Before class, review how immersive UIs support interaction, control, and feedback, drawing on engineering examples like CNC HMIs, teach pendants, and super displays in the XFactory environment.

- Explain how VR UIs function as input systems. Ahead of the session, study how components such as buttons, toggles, and sliders can trigger actions like animations or custom scripts, focusing especially on the CNC HMI example.

- Explain how VR UIs function as output systems. To prepare, explore how UIs convey system status, warnings, and environmental data, using the logistics scale example that outputs weight and visual alerts as a reference.

- Apply best practices for VR UI design. In advance, identify 2–3 key principles—such as clarity, performance, or ergonomic design—and reflect on how they improve trust, comfort, and usability in VR engineering contexts.

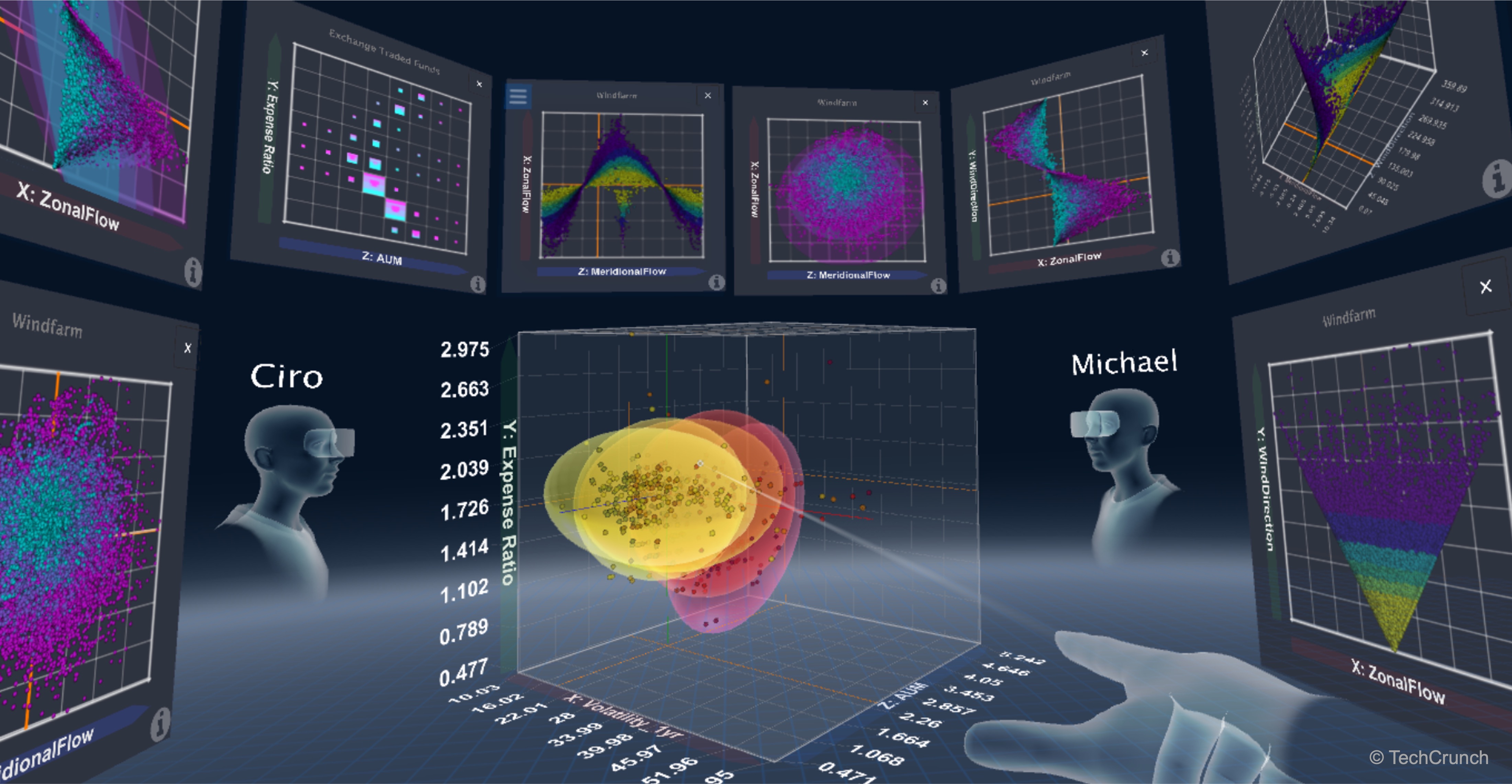

Role of UI in VR

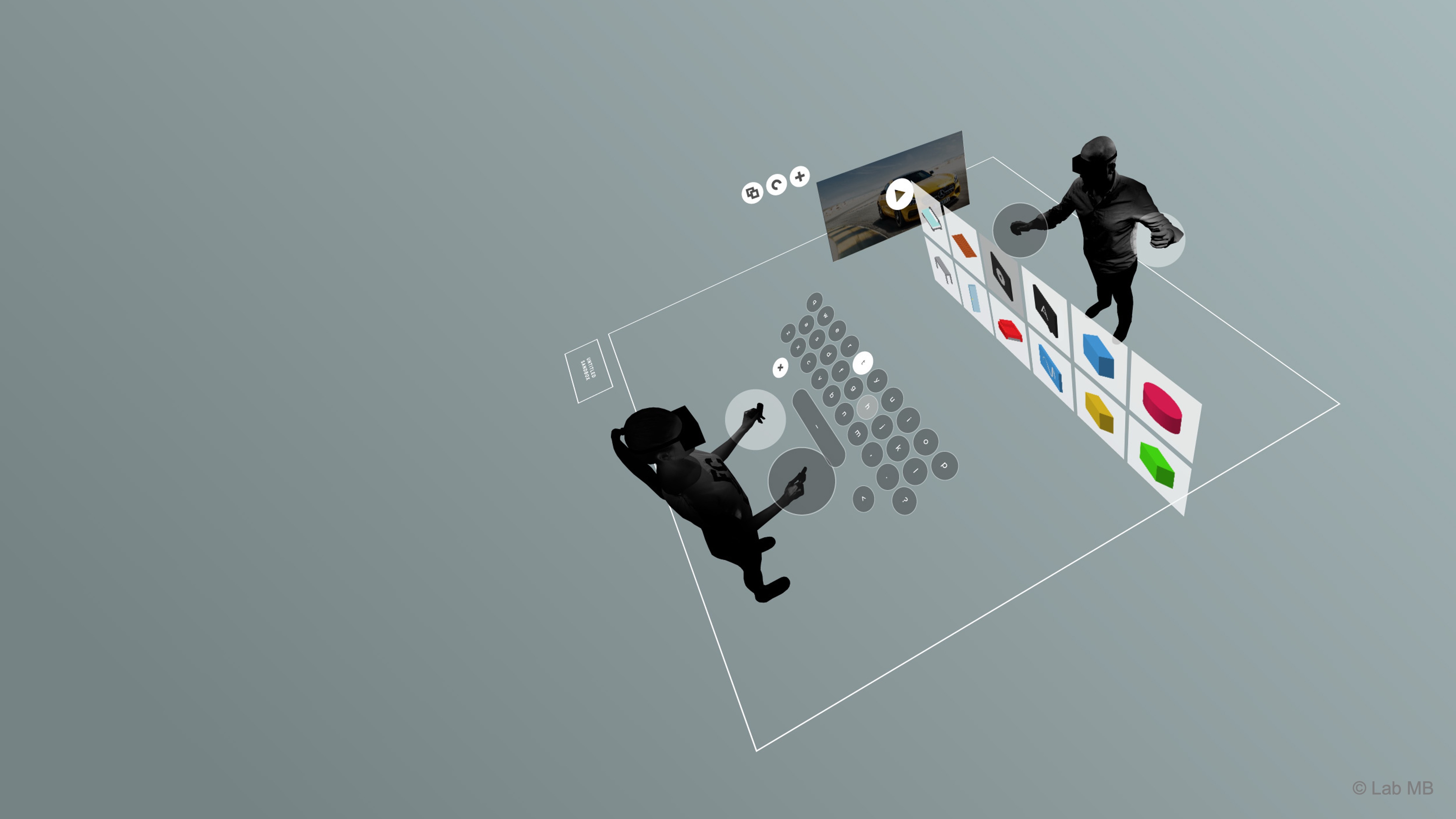

User interfaces (UIs) in VR form the connection between the user and the virtual environment, enabling inputs that influence the system and providing outputs that communicate feedback or change. Examples include virtual control panels for machines, immersive dashboards for real-time monitoring, and collaborative 3D workspaces. Well-designed VR UIs create intuitive, spatial interactions that let users engage with digital representations of real systems naturally and effectively. Core Unity UI concepts include:

-

User Interface (UI): In VR, a UI is a spatial, interactive element within the 3D world, functioning as both input and output. For example, in XFactory, the robot teach pendant acts as a floating tablet beside the UR10e robot. Users interact to start sequences or jog joints (input), and the interface responds through visual highlights, sound cues, or haptic feedback (output).

-

World Space UI: A UI rendered directly in 3D space like any other scene object. This approach supports natural interaction (input) and visible or sensory response (output). In XFactory, the CNC HMI appears as a wall-mounted panel—users touch buttons or sliders to control processes (input), and the panel displays live data such as spindle speed or system status (output). World space canvases typically use the

Tracked Device Graphic Raycastercomponent to receive events from XR interactors such as rays or pokes. -

Screen Space UI: A UI attached to the user’s view rather than the world. While less immersive, it is useful for persistent output elements like status overlays, calibration instructions, or safety warnings that must remain visible regardless of orientation.

-

XR Ray Interactor: A component that projects a laser-style ray from a controller or hand, allowing users to interact with UI elements from a distance. It provides input by selecting buttons, sliders, or menus, and gives output through responsive animations, sound, and visual states. In the curved display of the exhibit station, ray interaction allows users to browse data, play tutorials, or filter metrics with minimal movement.

-

Event System: Unity’s framework that routes user actions to UI elements. In VR, it uses the XR UI Input Module, which integrates with the XR Interaction Toolkit and the new Input System. This ensures controller or hand interactions—whether via raycasting or direct touch—trigger familiar UI events like

OnClickorOnValueChanged. In XFactory, when the user presses the “Emergency Stop” button (input), the system displays alert indicators and initiates shutdown sequences (output).

Key Concepts

-

UI as Interaction: In VR, UIs act as the primary interface for both command and communication. Users perform deliberate actions—pressing buttons, moving sliders, or pointing with ray interactors—to control machines or change system states (input). In return, the environment responds with visual, auditory, or haptic cues that confirm actions and display current system status (output). For instance, the robot teach pendant lets users jog joints or start routines, while the CNC HMI panel visually updates spindle speed or alerts after each user action.

-

Spatial Context: Because UIs exist in 3D space, their placement and scale must support depth perception and movement. Interfaces like the robot teach pendant sit within comfortable reach, while large displays such as the exhibit station’s curved screen are positioned for clear visibility from a distance—encouraging natural head and body motion.

-

Feedback and Responsiveness: Every user action should trigger immediate, multimodal feedback. When users press “Start Spindle” on the CNC HMI, a sound plays, the button animates, and the system display updates. This synchronized response reinforces cause and effect, making interactions feel tangible and reliable.

-

Visual Integration: UI design should blend with the virtual environment’s logic and aesthetics. Industrial panels use realistic frames, materials, and colors that match the surrounding machinery. Even though interactive, these panels maintain immersion by looking like part of the physical world.

-

Ergonomics and Clarity: UI elements must accommodate hand or controller size, movement range, and comfort. Buttons on the teach pendant are large and well spaced to prevent accidental touches, while critical controls like “Emergency Stop” use clear color and position cues. Consistent button styles, icons, and text across all stations reduce confusion and make the system intuitive to navigate.

Design Principles

-

Leverage Spatial Interaction: Design interfaces that take advantage of VR’s 3D nature. The curved display in the exhibit station surrounds the user for a panoramic data view, while the teach pendant automatically orients toward the user’s gaze for optimal readability.

-

Match Interaction Method to Context: Use direct touch for close, precise tasks and ray-based interaction for distant or large-scale interfaces. The CNC panel supports tactile-style input, while the exhibit display favors ray pointing. The teach pendant flexibly switches between both modes depending on user proximity.

-

Maintain Performance Efficiency: VR UI systems can be performance-heavy. Optimize by batching canvases, minimizing overdraw, and using lightweight materials. In XFactory, large displays remain responsive even under load, ensuring smooth interaction across devices.

-

Ensure Accessibility and Adaptability: Interfaces should adjust to user needs. The super display includes settings for text scaling and contrast, while the teach pendant dynamically repositions to accommodate different heights or seated users—ensuring comfort and inclusivity for all participants.

UI as Input

In VR, UI serves as a primary means of user input, allowing users to interact with digital systems as they would with real-world objects. UI as input enables the triggering of actions such as starting a motor, toggling power, or initiating sequences. These inputs often simulate pressing physical buttons, adjusting sliders, or flipping switches in a spatial, embodied context. In engineering scenarios, UI as input can replicate real industrial control logic, where a physical interface sends signals to a machine. In VR, we map this interaction into 3D space using Unity’s XR Interaction Toolkit.

UI Input Elements

UI input elements are the core components that let users control and interact with systems in VR. They translate physical-like gestures—pressing, sliding, pointing, or typing—into digital signals that drive behavior in the virtual environment.

-

Virtual Buttons and Switches: Trigger discrete or toggle-based actions. Buttons typically perform immediate commands (e.g., “Start,” “Stop”), while switches maintain ongoing states like power, mode, or safety status.

-

Toggles and Sliders: Toggles emulate on/off switches, and sliders control continuous variables such as speed, pressure, or feed rate. Their design should allow fine adjustment and clear visual indication of current value.

-

Touch and Ray Interaction: UIs can be operated through direct touch (hand or controller contact) or remotely via ray interaction. The method depends on distance and task context—close-range panels favor touch, while large or distant displays work best with rays.

-

Dropdowns and Scrollbars: Enable selection from multiple options or navigation through extended content. In VR, these should be simplified, large, and responsive to avoid precision issues caused by controller movement or hand tracking.

-

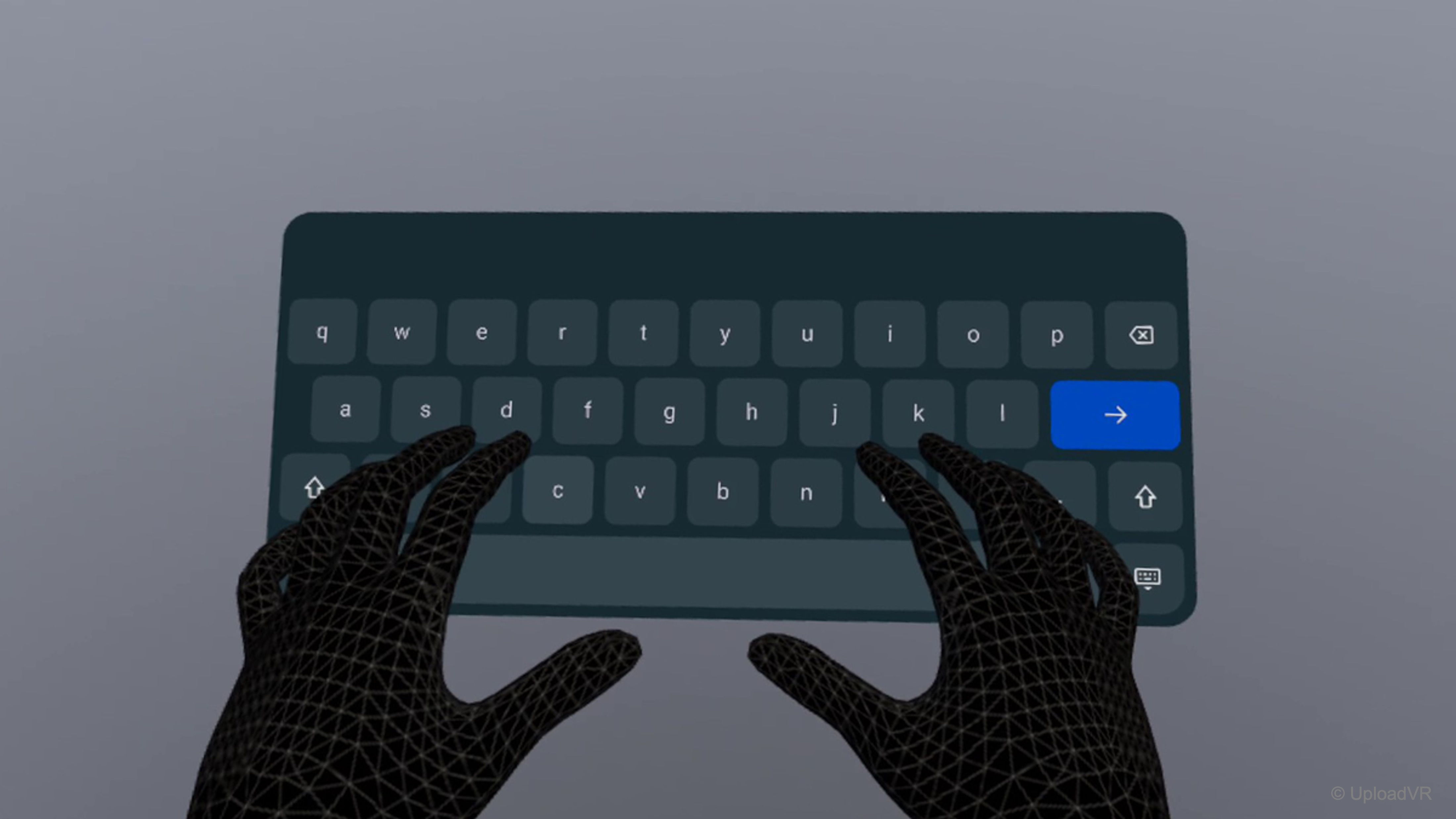

Input Fields and Virtual Keyboards: Allow users to enter text or numeric data. When selected, an on-screen virtual keyboard appears—either attached to the panel or floating near the user’s view. Keys are pressed via ray or direct touch, with visual and audio feedback confirming each input. This is useful for entering machine parameters, naming saved configurations, or typing short commands without leaving the VR context.

-

Gestural Zones and Proximity Triggers: Regions or sensors that detect user presence or motion, triggering actions without explicit button presses. For instance, stepping into an interaction zone may wake a UI, or a hand gesture may confirm an operation—reducing interface clutter and maintaining immersion.

Interactive UI Elements

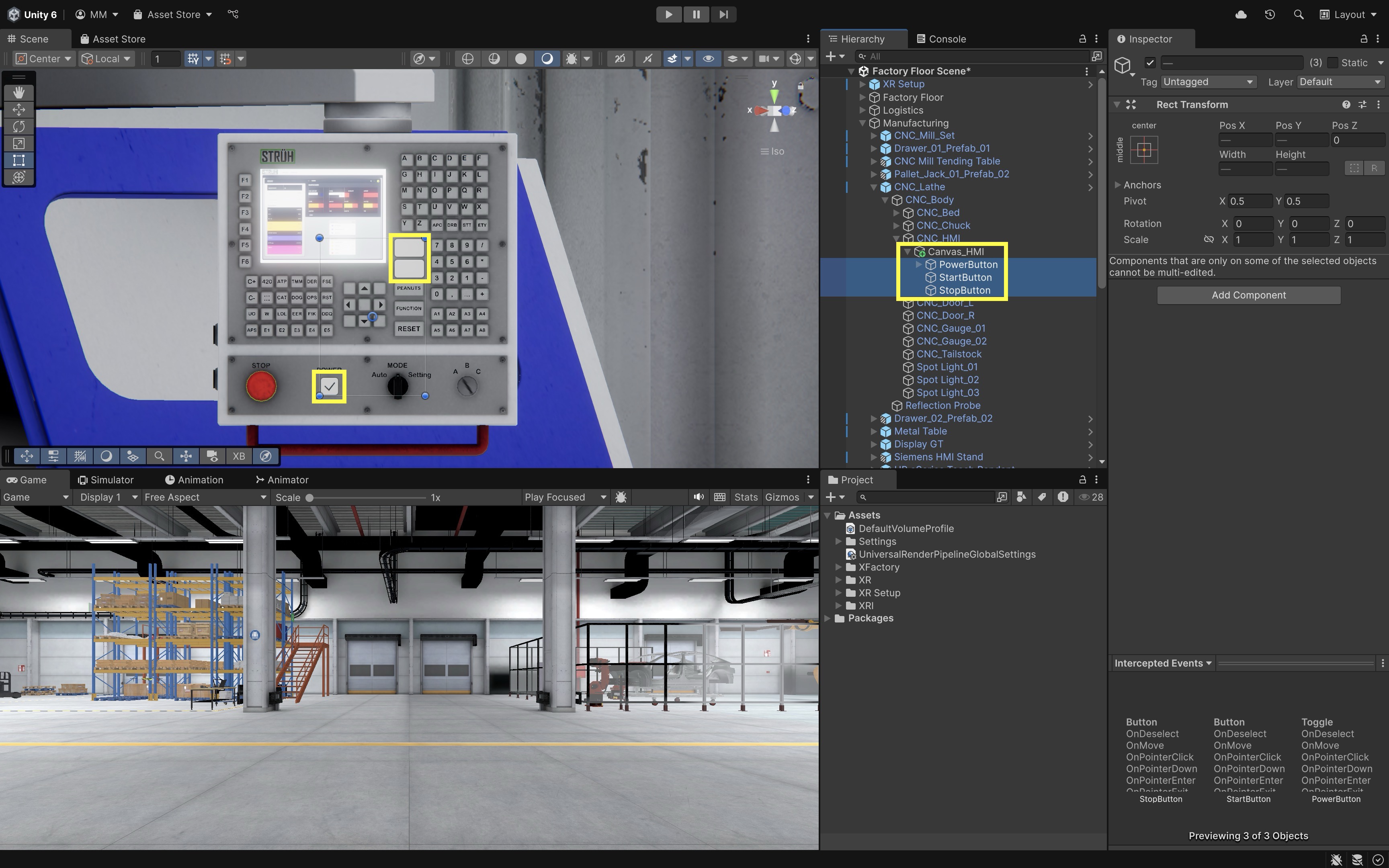

Let’s implement a fully interactive VR UI panel on the CNC lathe machine (CNC_Lathe) in the manufacturing station. Users will be able to POWER the machine on/off (i.e., turn machine ON/OFF, play/stop machine sound, and activates/deactivates machine lights), START it (close doors and rotate chuck), and STOP it (open doors and stop chuck). Here is how to set up the UI input and implement this behavior.

- Configure the Machine HMI’s Canvas:

- In the

Hierarchy, navigate toManufacturing > CNC_Lathe > CNC_HMI. This is a 3D model representing the machine’s control interface. It does not yet include aCanvasfor UI elements. - With

CNC_HMIselected, right-click and chooseXR > UI Canvas. This adds a new childCanvasset up for XR interaction. - Rename the new object to

Canvas_HMIfor clarity. - Use the gizmos and

Rect Toolto rescale and reposition the Canvas so it fits cleanly on the front face of theCNC_HMImodel. Make sure it is not embedded inside or blocked by the mesh. - Confirm

Render Modeis set toWorld Space. - Use

XR UI Input Module(removeStandalone Input Module). - Canvas must include

Tracked Device Graphic Raycaster.

- In the

- Create Overlay Buttons on the Canvas:

- Under

Canvas_HMI, add 3 Unity UI Buttons:PowerButton(Toggle),StartButton, andStopButton. To do so, right-click onCanvas_HMIand chooseUI > ToggleorUI > Button - TextMeshPro. - Position each UI button directly over the corresponding 3D button model in the scene.

- Use the

RectTransformto size each button so it matches the shape and boundary of the physical button model. - Select each button’s child

Text (TMP)object and disable or delete it.

The CNC_HMI model already includes 3D models of the POWER, START, and STOP buttons. Rather than replacing these with visible Unity UI elements, we’ll overlay invisible, functional UI buttons on top of the models to make them interactive.

- Under

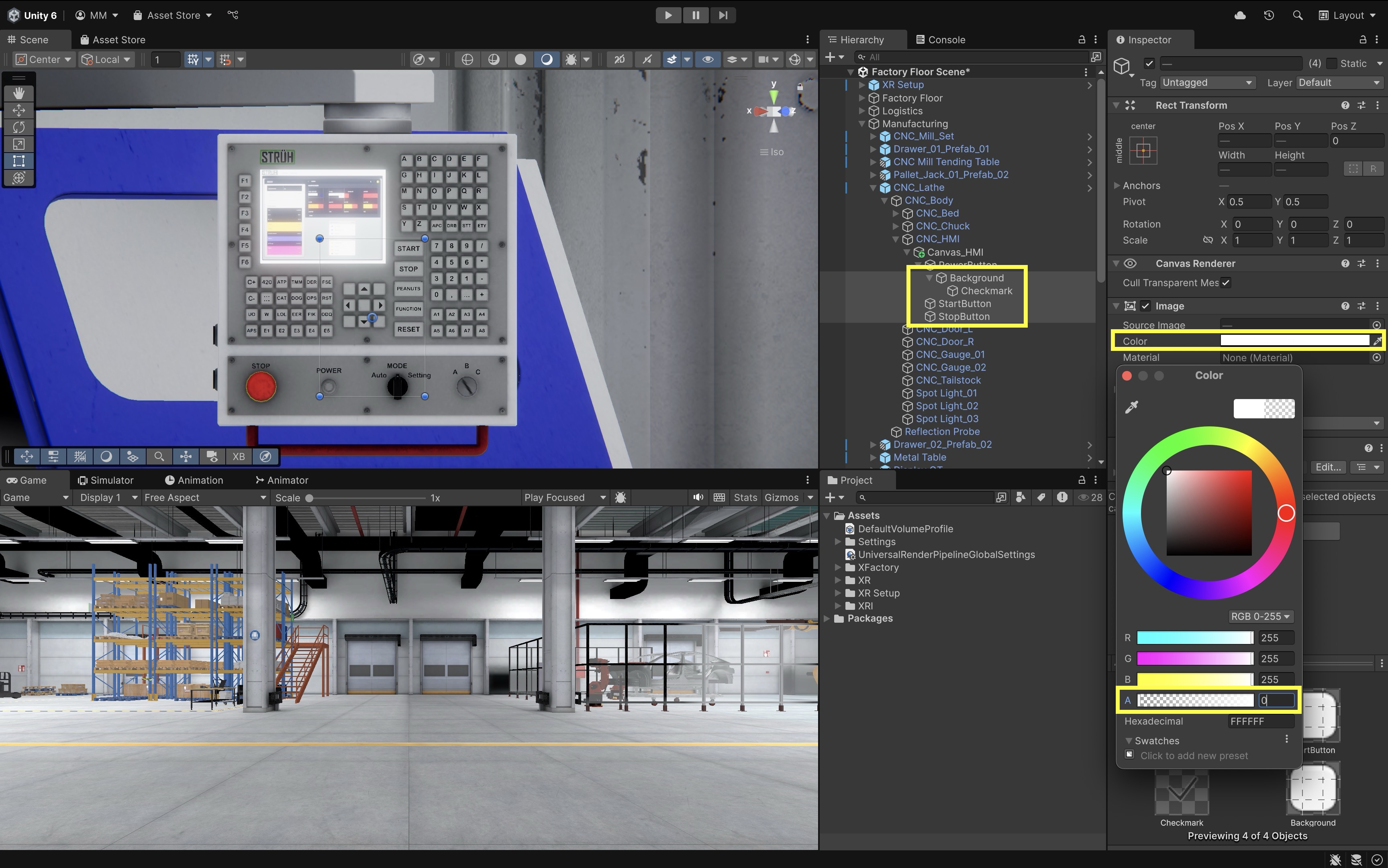

- Make Buttons and Toggle Transparent:

- Select the button objects (

StartButtonandStartButton). - Go to the

Imagecomponent in theInspector. - Set the

A(color alpha) to0(fully transparent). Alternatively, assign a transparent UI sprite as the source image. - Disable or delete the child

Text (TMP)element if you haven’t done so already. - Select the toggle object (

PowerButton). - Expand its child hierarchy.

- Select the

Backgroundimage. SetAto0. - Select the

Checkmarkimage. SetAto0. - Disable or delete the

Label(TMP) object if you haven’t done so already.

These steps ensure the UI components remain invisible but fully functional, enabling interaction through ray or touch without obscuring the 3D button models.

- Select the button objects (

- Confirm Interaction Readiness:

- Ensure the Canvas includes a

Tracked Device Graphic Raycaster. - Ensure buttons have

Interactableenabled (Toggle > InteractableandButton > Interactable). - Leave space between buttons to prevent accidental interaction.

At runtime, the user will see only the original button models, but interaction will be handled via the overlaid invisible Unity UI buttons. This preserves the realism of the HMI panel while enabling XR input support.

- Ensure the Canvas includes a

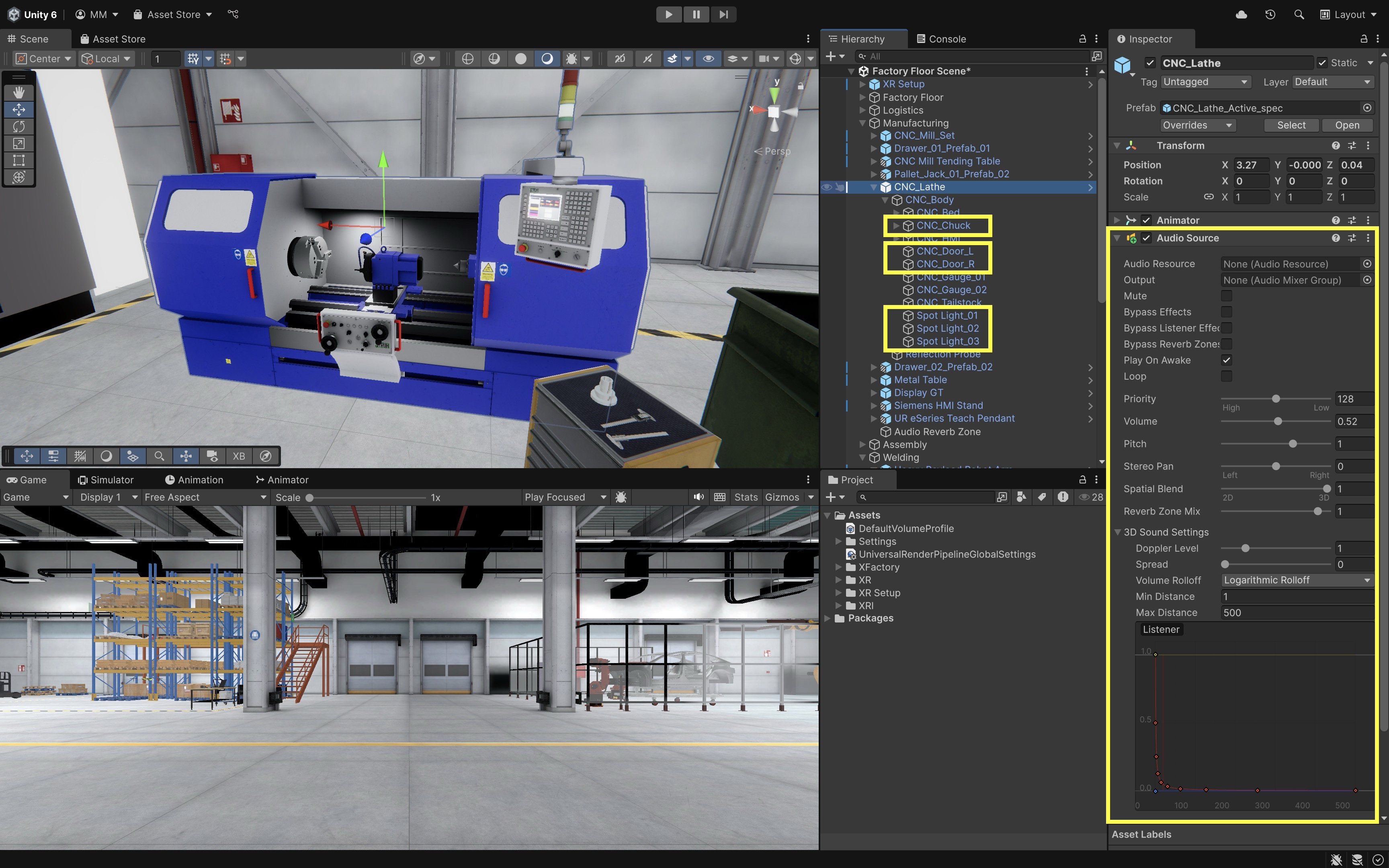

- Prepare Scene Elements for Interaction:

- Attach an

AudioSourceto CNC machine for machine sound. - Locate the three machine light GameObjects (

Spot Light_01,Spot Light_02,Spot Light_03). - Locate the chuck (the rotating part of the machine) GameObject (

CNC_Chuck). - Locate the left and right door GameObjects (

CNC_Door_L,CNC_Door_R).

- Attach an

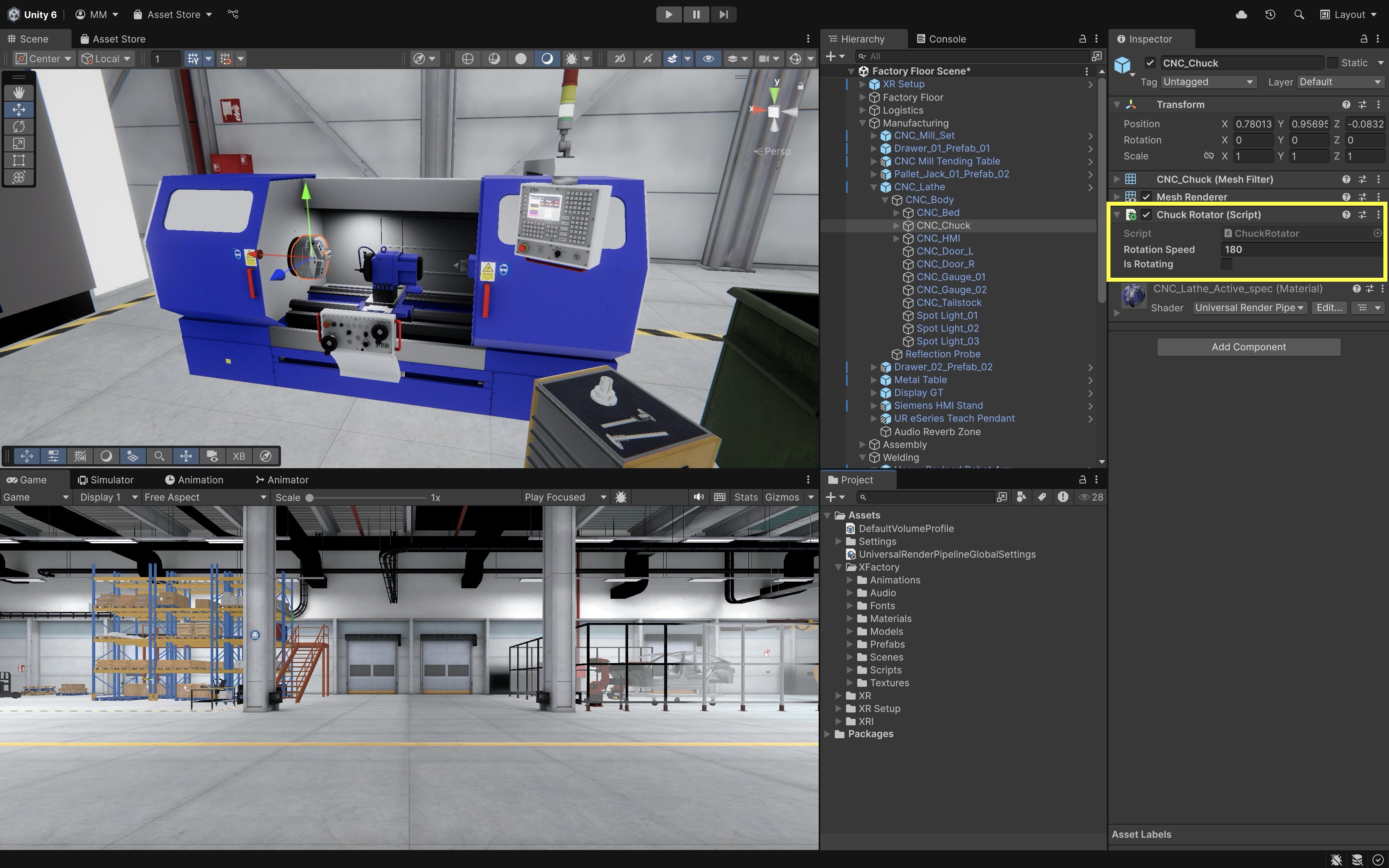

- Script the Chuck Rotation Behavior:

- Create the following script and attach it to the

CNC_ChuckGameObject to enable/disable rotation. This a prerequisite for scripting the HMI behavior as discussed next.

using UnityEngine; public class ChuckRotator : MonoBehaviour { public float rotationSpeed = 180f; public bool isRotating = false; void Update() { if (isRotating) transform.Rotate(Vector3.right, rotationSpeed * Time.deltaTime); } } - Create the following script and attach it to the

- Configure the Script:

Rotation Speed: Set to180(or adjust for desired RPM effect).Is Rotating: Leave unchecked by default. This will be controlled by the following HMI script at runtime.

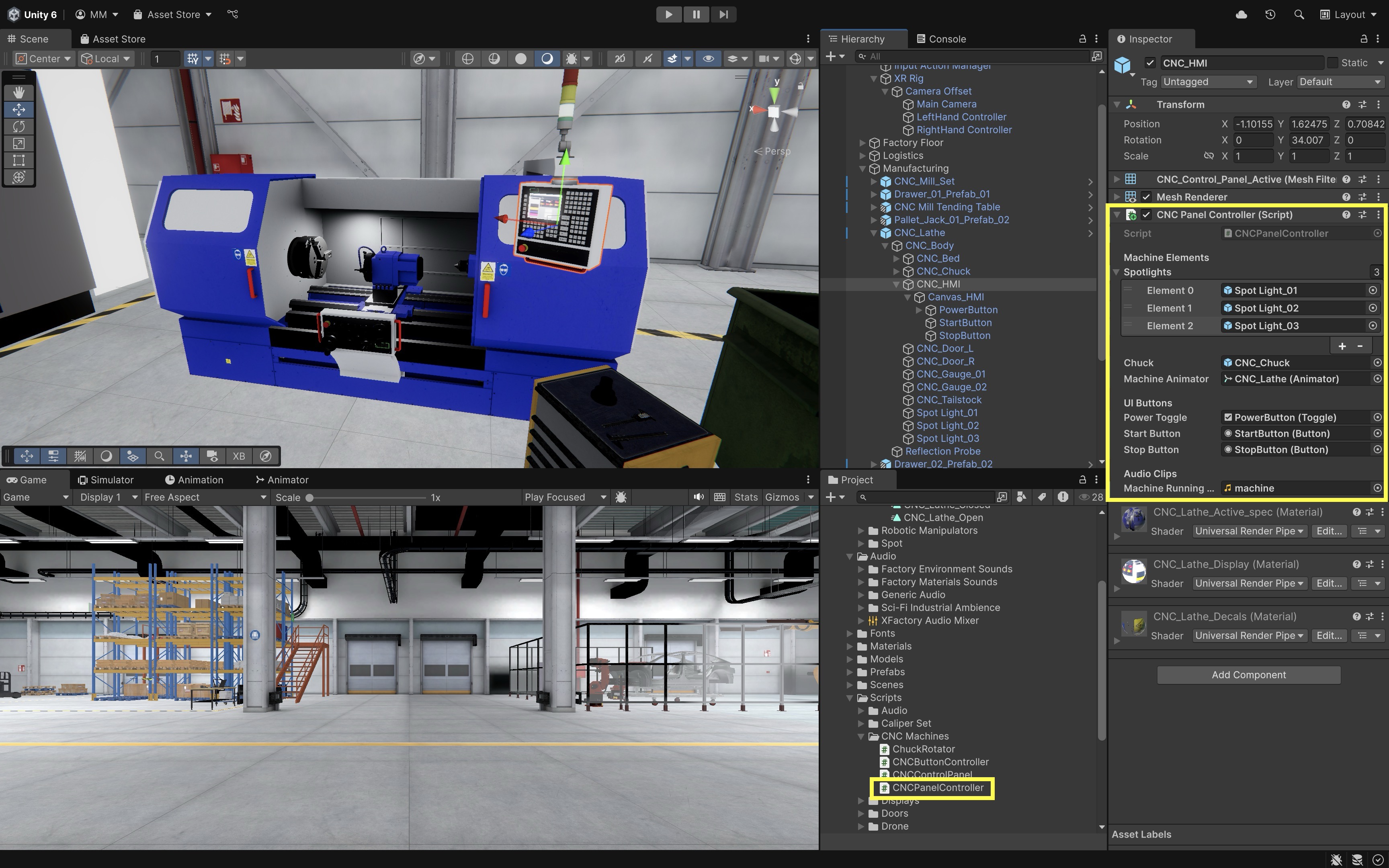

- Script the HMI Behavior:

- Attach the following script to

Canvas_HMI. - This script handles input events from buttons and triggers machine animation and chuck control based on user interaction.

using UnityEngine; using UnityEngine.UI; public class CNCPanelController : MonoBehaviour { [Header("Machine Elements")] public GameObject[] spotlights; public GameObject chuck; public Animator machineAnimator; // Animator on CNC_Lathe [Header("UI Buttons")] public Toggle powerToggle; public Button startButton; public Button stopButton; [Header("Audio Clips")] public AudioClip machineRunningClip; private AudioSource audioSource; private bool isMachineOn = false; private bool isRunning = false; void Awake() { audioSource = GetComponent<AudioSource>(); if (audioSource == null) { audioSource = gameObject.AddComponent<AudioSource>(); } audioSource.playOnAwake = false; audioSource.loop = true; } void Start() { if (powerToggle != null) powerToggle.onValueChanged.AddListener(OnPowerToggle); if (startButton != null) startButton.onClick.AddListener(OnStart); if (stopButton != null) stopButton.onClick.AddListener(OnStop); } void OnPowerToggle(bool isOn) { isMachineOn = isOn; if (isOn && machineRunningClip != null) { audioSource.clip = machineRunningClip; audioSource.Play(); } else { audioSource.Stop(); } foreach (var light in spotlights) if (light != null) light.SetActive(isOn); if (!isOn) { isRunning = false; SetChuckRotation(false); // Doors are not affected by power toggle } } void OnStart() { if (!isMachineOn) return; TriggerDoorAnimation("Close_Doors"); SetChuckRotation(true); isRunning = true; } void OnStop() { if (!isMachineOn) return; SetChuckRotation(false); TriggerDoorAnimation("Open_Doors"); isRunning = false; } void SetChuckRotation(bool rotate) { if (chuck == null) return; var rotator = chuck.GetComponent<ChuckRotator>(); if (rotator != null) rotator.isRotating = rotate; } void TriggerDoorAnimation(string triggerName) { if (machineAnimator != null) machineAnimator.SetTrigger(triggerName); } } - Attach the following script to

- Configure the Script:

- Machine Elements:

Spotlights: Add all 3 spotlight GameObjects to the array.Chuck: Drag theCNC_ChuckGameObject (must haveChuckRotator.csscript).Machine Animator: Drag theCNC_LatheGameObject that has the Animator with theOpen_DoorsandClose_Doorstriggers. - Buttons:Power Toggle: Drag theToggleUI element for the Power button.Start Button: Drag theButtonUI element for Start.Stop Button: Drag theButtonUI element for Stop. - Audio Clips:Machine Running Clip: Drag the looping audio file to play while the machine is powered on.

- Play and Test in VR:

- Enter Play Mode in Unity with your VR headset connected.

- Point at the invisible UI areas over the HMI buttons and use your VR controller or hand tracking to interact.

- Toggle Power and watch the spotlights turn on/off and machine sound start/stop.

- Press Start to see the chuck begin rotating and doors close automatically.

- Press Stop to halt rotation and open the doors.

- Check that all actions feel natural in VR, with no accidental button presses or alignment issues.

- Optional Extensions:

- You can extend this system with UI as output by adding status text (e.g., “Machine Running” or “Doors Closed”), visual button feedback (e.g., color change or glow on press), or haptic or audio cues to confirm each action.

- To further improve realism, consider animating the door movement instead of snapping their positions or fading spotlights in and out smoothly rather than toggling instantly

This entire system demonstrates UI as input—the user presses buttons that cause state changes in the virtual environment, such as turning the machine on/off, starting/stopping the chuck, or opening/closing doors.

Spatial Keyboard

In VR, typing can be tricky since users don’t have access to a physical keyboard. A spatial keyboard solves this by letting users enter text directly inside the virtual world—for example, to name files, send chat messages, or fill in login fields. The XR Interaction Toolkit’s Spatial Keyboard provides an easy, built-in solution that works with TextMesh Pro (TMP) input fields and responds naturally to XR ray or direct interactors.

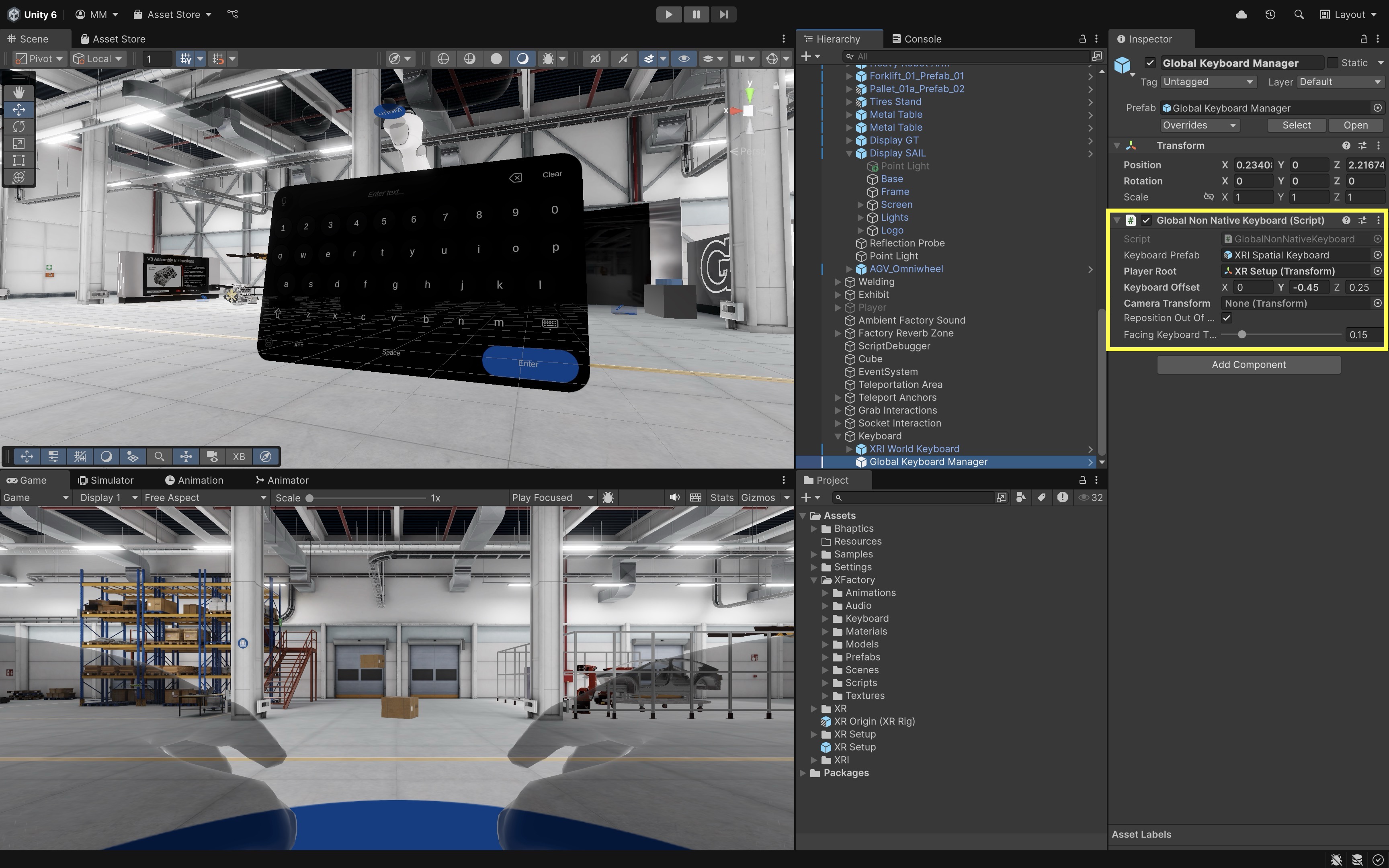

Follow the steps below to quickly add the Spatial Keyboard to your own VR scene:

- Import the Sample:

- Go to

Window > Package Management > Package Manager > XR Interaction Toolkit > Samples. - Import

Spatial Keyboard.

- Go to

- Make Prefabs:

- In the sample scene, drag

XRI World Keyboard,Global Keyboard Manager, and anInput Fieldinto yourProjectwindow. - Save them as prefab variants.

- In the sample scene, drag

- Add to Your Scene:

- Open your own VR scene and drag these prefabs in.

- Position

XRI World KeyboardandGlobal Keyboard Managersomewhere at the scene root. - On the

Global Keyboard Manager’sInspectorwindow, assign yourXR SetuptoPlayer Root. - Position the

TMP Input Fieldinside a world space UI (e.g., the large display in the assembly station).

- Link Keyboard to Input Field:

- In the XRI World Keyboard inspector, assign your TMP_InputField as the Target Field (or “Linked Input Field”).

- When the user selects the field, the keyboard will automatically appear.

- Deploy and Test:

- Deploy the app.

- Click or select the text field in VR, and the keyboard will pop up automatically.

- Use the keyboard icon button on the keyboard to close it when finished typing.

UI as Output

In VR, UI elements serve as critical output channels, displaying information from the environment back to the user. These outputs help users understand system status, receive confirmation of actions, monitor sensor readings, or respond to changing conditions. UI as output mimics real-world feedback systems, like indicator lights, gauges, or control displays, that reflect the internal state of a machine or process. In VR, this concept is extended through dynamic text updates, color changes, flashing indicators, and sound or haptic responses that mirror real-world cues. In Unity, these outputs are typically driven through scripts that update UI elements based on runtime events or data.

UI Output Elements

UI output components visually communicate real-time or event-based data from the virtual environment. These elements often work in tandem with system states or user input to present contextual feedback.

-

Dynamic Text Fields: Display numerical or string values (e.g., machine status, object weight, task completion messages) updated via scripts. These fields can reflect constantly changing variables like temperature, load, or runtime conditions in real-world equipment simulations.

-

Image Components and Icons: Change sprite, color, or visibility based on system state (e.g., warnings, indicators, error lights). They serve as intuitive visual cues, replacing or enhancing physical LEDs or indicator panels in industrial contexts.

-

Animations or Flashing Effects: Draw user attention to critical changes, such as alerts or unsafe conditions. Flashing, pulsing, or movement effects increase visibility and urgency, especially in safety-critical applications.

-

Audio or Haptic Feedback: While technically sensory, these often accompany UI output to reinforce state transitions or warnings. Sounds like beeps or buzzers and controller vibrations strengthen immersion and ensure important events are noticed even if not directly seen.

-

UI Color States: Color changes (e.g., green = safe, red = warning) are widely used in engineering contexts to indicate output status. These visual conventions provide instant recognition and align with industry-standard signage for hazards and system conditions.

-

Graphs, Gauges, and Meters: More complex UIs may visualize data trends or magnitudes, though simplified for VR usability. These elements help users monitor performance or diagnostics over time, useful in training dashboards or factory analytics displays.

UI Display Elements

Let’s create a fully functional HMI display for the Industrial_Scale_01a located in the logistics station. We will simulate a real industrial weighing scale that reads the weight (i.e., Rigidbody.mass) of any GameObject placed on it and outputs that weight to a display. If the object exceeds a configurable maximum weight threshold, the display turns red and begins flashing to indicate overload. Here is how to implement this behavior.

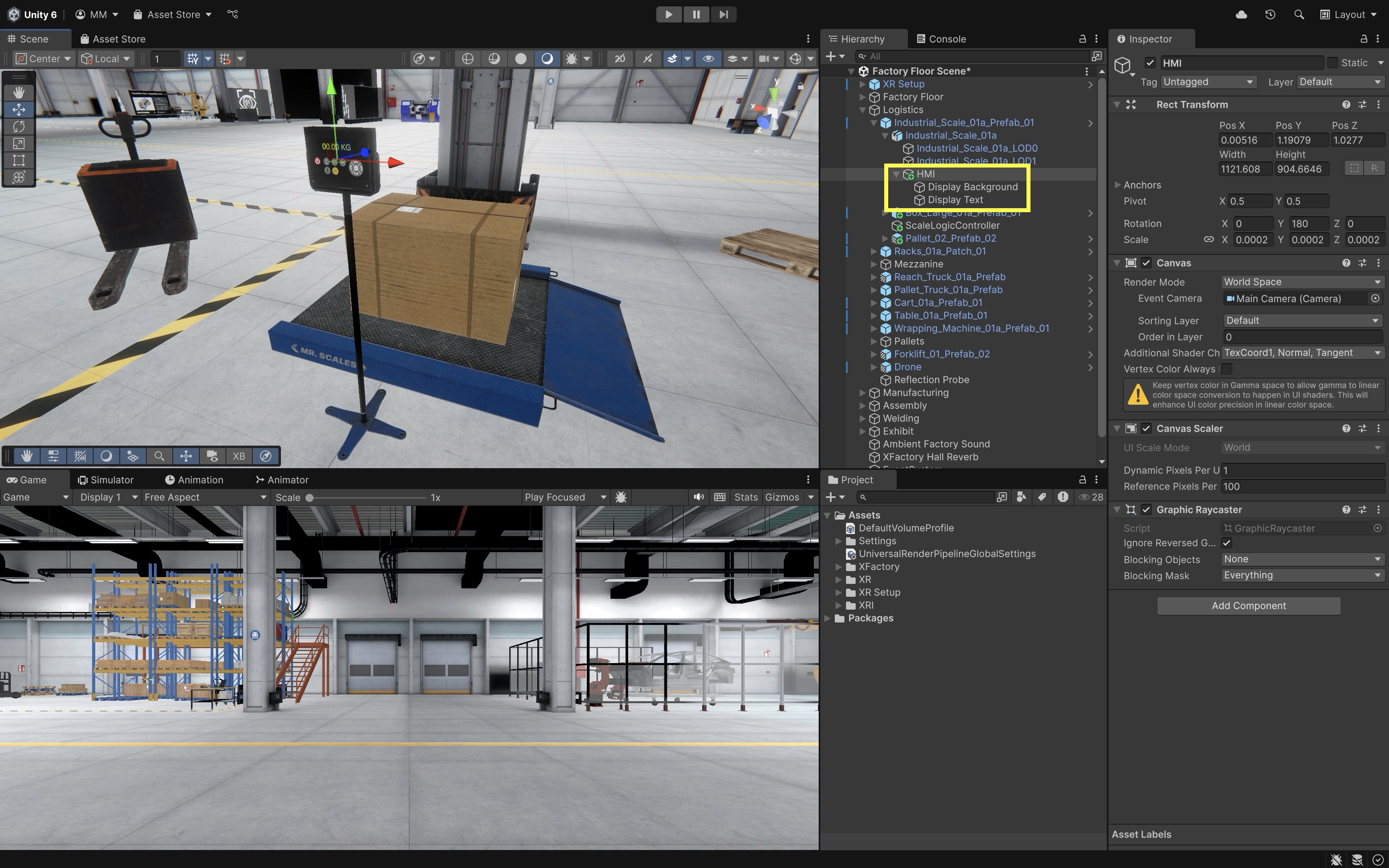

- Identify and Prepare UI Elements:

- In the

Hierarchy, locateIndustrial_Scale_01a > HMI. - Confirm the HMI contains a

Display Background(anImagecomponent for visual styling) and aDisplay Text: ATextMeshProUGUIcomponent used to show the numeric weight.

These elements are pre-designed but currently static. We will drive them through a new script that monitors object placement and updates the text dynamically.

- In the

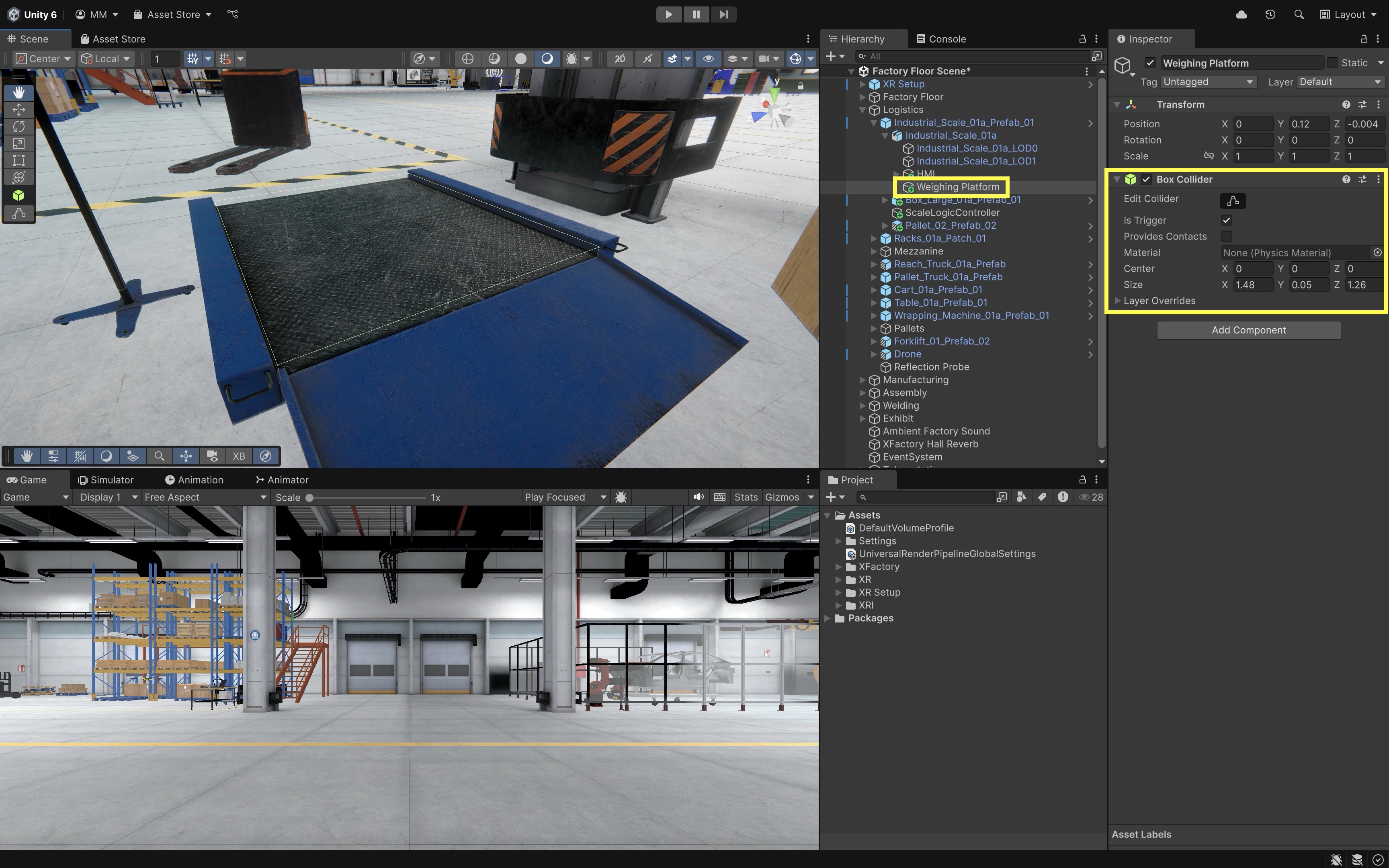

- Add a Trigger Zone for Weighing:

- In the

Hierarchy, right-click onIndustrial_Scale_01aand selectCreate Empty. Rename the new GameObject toWeighing Platform. - Set its

Positionto0, 0, 0, then adjust its local position (via theTransform) so it sits slightly above the actual platform surface, right where the user would place a box or object for weighing. - With

WeighingPlatformselected, go toAdd Componentand add aBox Collider. - In the

Box Collidercomponent, check theIs Triggerbox. - Use the

Box Collider’sCenterandSizevalues to define a tight bounding box that covers only the weighable surface area of the scale.

This method avoids modifying the original

Mesh Colliderused for physical collisions, while still enabling trigger-based logic for detecting when objects are placed on the scale. - In the

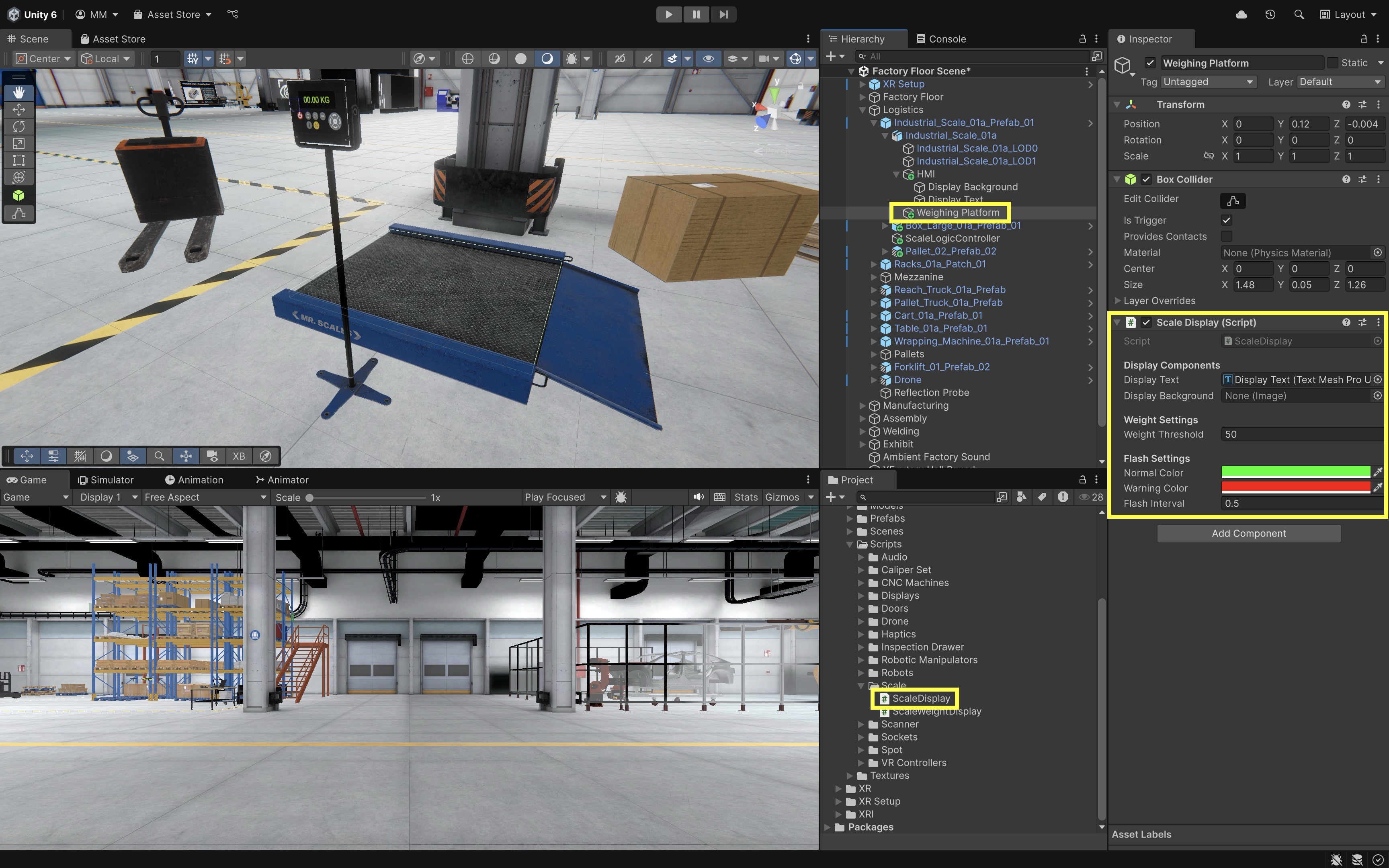

- Create and Configure the Scale Display Script:

- Create a script called

ScaleDisplay.cs. - Attach it to the

Weighing PlatformGameObject.

using UnityEngine; using TMPro; using UnityEngine.UI; public class ScaleDisplay : MonoBehaviour { [Header("Display Components")] public TextMeshProUGUI displayText; public Image displayBackground; [Header("Weight Settings")] public float weightThreshold = 50f; // Max allowed weight in kg private Rigidbody currentObject; private float currentWeight; [Header("Flash Settings")] public Color normalColor = Color.green; public Color warningColor = Color.red; public float flashInterval = 0.5f; private bool isOverloaded = false; private float flashTimer = 0f; private bool flashState = false; void Update() { if (currentObject != null) { currentWeight = currentObject.mass; displayText.text = $"{currentWeight:F1} kg"; if (currentWeight > weightThreshold) { if (!isOverloaded) { isOverloaded = true; flashTimer = 0f; flashState = true; } HandleFlashing(); } else { isOverloaded = false; ResetDisplayColor(); } } else { currentWeight = 0f; displayText.text = "---"; ResetDisplayColor(); } } private void HandleFlashing() { flashTimer += Time.deltaTime; if (flashTimer >= flashInterval) { flashTimer = 0f; flashState = !flashState; displayText.color = flashState ? warningColor : normalColor; } } private void ResetDisplayColor() { displayText.color = normalColor; } void OnTriggerEnter(Collider other) { var rb = other.attachedRigidbody; if (rb != null) { currentObject = rb; } } void OnTriggerExit(Collider other) { if (currentObject != null && other.attachedRigidbody == currentObject) { currentObject = null; } } } - Create a script called

- Configure the Script:

- Drag the

Display Textobject fromIndustrial_Scale_01a > HMI > Display Textinto theDisplay Textfield. - Drag the

Display Backgroundobject fromIndustrial_Scale_01a > HMI > Display Backgroundinto theDisplay Backgroundfield. - Set

Weight Thresholdto50kg (or another appropriate limit). - Set

Normal Colorto green (e.g.,#00FF00). - Set

Warning Colorto red (e.g.,#FF0000). - Set

Flash Intervalto0.5seconds for a visible flashing rhythm.

This setup links the virtual scale’s display to the underlying system logic so that UI elements are updated automatically in real time based on physical object interaction.

- Drag the

- Test the Output Behavior:

- Place several objects with different

Rigidbody.massvalues in the scene. - During play mode, place an object onto the scale surface.

- If the object has a mass below the threshold, the display shows the weight in green.

- If the object exceeds the threshold, the weight text begins flashing red to signal overload.

- Remove the object to reset the display, which returns to showing

---and green text. - This is a pure UI as output example. The user does not directly manipulate the interface—the UI passively displays dynamic system feedback, visually communicating the environment’s state.

- Place several objects with different

- Optional Extensions:

- Audio Alert: Add an

AudioSourceto the scale and play an alert sound when overload is detected. You can trigger this in theOnTriggerEnteror within theHandleFlashing()logic. - Animated Background: Animate the

Display Backgroundto pulse or fade during overload using a coroutine or material animation. - Unit Toggle (kg/lb): Extend the display to allow unit switching by integrating a small UI as Input toggle nearby.

- Weight History Log: Add a small scrollable log UI that records the last few weight readings for QA or training purposes.

- Audio Alert: Add an

These enhancements further bridge UI output with additional sensory modalities (audio, motion) and can introduce secondary UI inputs to control display behavior, creating a more complete I/O interaction model.

Best Practices

Designing effective VR user interfaces requires attention to clarity, spatial usability, and immersive interaction. Below are key best practices—grounded in both design theory and real-world implementation using Unity’s XR Interaction Toolkit.

-

Clarity & Simplicity: Use large, legible fonts like

TextMeshProwith strong contrast (e.g., white on dark panels), and reduce UI clutter by showing only contextually relevant information. High-contrast color schemes and minimal visible elements help focus user attention. Clear and simple UI reduces cognitive load, speeds up decision-making, and prevents user error—especially in time-sensitive or technical tasks. In the CNC HMI panel, use bold text to indicate spindle state, and hide G-code settings when the spindle is running. -

Spatial Layout Consistency: Arrange UI elements in realistic, logical groupings that mirror real-world controls, with consistent padding, spacing, and alignment across panels. Use left-aligned or center-aligned layouts consistently within the same interaction context. Consistent layouts make interfaces more predictable and easier to learn, which is crucial for users switching between different stations or tools in a complex VR environment. In XFactory, all large wall displays follow a consistent top-to-bottom flow: title → message → action buttons → secondary links.

-

Performance Optimization: Use lightweight materials, throttle frequent updates (e.g., limit chart refreshes to once per second), and avoid excessive animations or high-resolution textures. Rely on event-based scripting instead of polling to keep frame rates above 90 FPS. Optimized UIs ensure smooth, responsive interaction—especially important in VR, where dropped frames or stutters can cause discomfort, motion sickness, or loss of immersion. On the curved analytics dashboard in the exhibit station, dynamic charts should update at a throttled rate (e.g., every 1 second) rather than every frame.

-

Robust Interaction Feedback: Provide immediate visual (e.g., button glow), audio (click sounds), and haptic (controller vibration) feedback on interactions. Make this feedback consistent across all UI to build trust and improve usability. Immediate feedback reassures users that their actions have registered and helps confirm successful input, reducing frustration and accidental repetitions. Teach pendant buttons highlight and play a subtle tone when pressed, confirming joint jog commands.

-

Cross-Device Compatibility: Since different headsets vary in field of view, resolution, and input style, use Unity’s

XR Device Simulatorand test on multiple devices (e.g., Quest, Vive, Rift). Provide adjustable UI scaling and repositioning for user comfort and accessibility. Designing for device variability ensures a consistent and inclusive experience across different hardware, which is especially important for courses, deployments, or teams using mixed equipment. In the factory-wide reset display, allow users to reposition or scale the panel using VR controllers to accommodate height and reach differences. -

Design for Ergonomics: Position UI panels in a comfortable reach zone (typically 0.5m–2m away and 15° below eye level), and use angled or floating panels for wall-mounted interfaces. Buttons should be at least ~2cm in world units for reliable interaction. Ergonomic design prevents fatigue, improves precision, and allows users to maintain a natural posture—essential for longer sessions or physically demanding tasks. The robot teach pendant is angled slightly toward the user and positioned at waist height to reduce arm fatigue.

-

Error Recovery: Include undo, cancel, and reset options for actions that change system state, and confirm critical tasks with dialog prompts. Allow users to safely back out of flows or retry without restarting the scene. Supporting graceful recovery from mistakes builds user confidence and prevents irreversible errors—key for high-stakes or training-based environments like virtual factories. In the manufacturing station, the CNC HMI includes a “Reset System” button that first opens a confirmation panel with

ConfirmandCanceloptions. IfConfirmis pressed, a second dialog asks the user to validate the current machine state (e.g., “Is the spindle stopped?”) before finalizing the reset. This prevents unsafe interruptions and gives users a chance to back out safely. If no response is given within 10 seconds, the UI defaults to canceling the operation.

Key Takeaways

Effective VR user interfaces bridge the gap between the user and immersive environments by serving as both input mechanisms—enabling precise control through buttons, sliders, toggles, and ray-based interactions—and output channels that provide timely feedback via visual, audio, or haptic cues. In engineering contexts like the XFactory environment, spatial UIs replicate real-world industrial controls while leveraging VR’s 3D capabilities for intuitive placement, ergonomic design, and rich feedback loops. Well-designed VR UIs balance clarity, consistency, and performance with accessibility and adaptability, ensuring they remain comfortable, responsive, and trustworthy across diverse hardware setups. By integrating robust feedback, maintaining immersive presence, and following ergonomic and usability best practices, these interfaces support confident decision-making, reduce user error, and create seamless, high-fidelity interaction experiences.