D6. Touch and Sound in VR

Learning Outcomes

- Explain and apply multimodal feedback principles in VR. Before class, review how coordinated audio–haptic cues (consistency, proportional intensity, tight synchronization, localization, and comfort controls) improve realism, situational awareness, and safety in engineering scenarios like assembly, teleoperation, and diagnostics.

- Implement controller and glove haptics with Unity XRI. In preparation, configure

HapticImpulsePlayerandSimpleHapticFeedbackfor hover/select/attach events, and verify yourXR Riginteractors. Skim the per-object patterns (CustomHoverHaptics,CustomSelectHaptics) and, if available, note the setup for hand tracking and bHaptics gloves (colliders +SimpleFingerHaptics) to deliver object-specific fingertip feedback.- Configure spatial, event-driven, and proximity-triggered audio. Ahead of the session, attach and tune an

AudioSource(setSpatial Blend,Min/Max Distance, loop/3D), prepare clips for event-based playback (e.g., surface-tag switcher), and review optionalAudio Reverb Zoneand proximity scripts so sounds activate and attenuate naturally as users move through the environment.

Multimodal VR Experiences

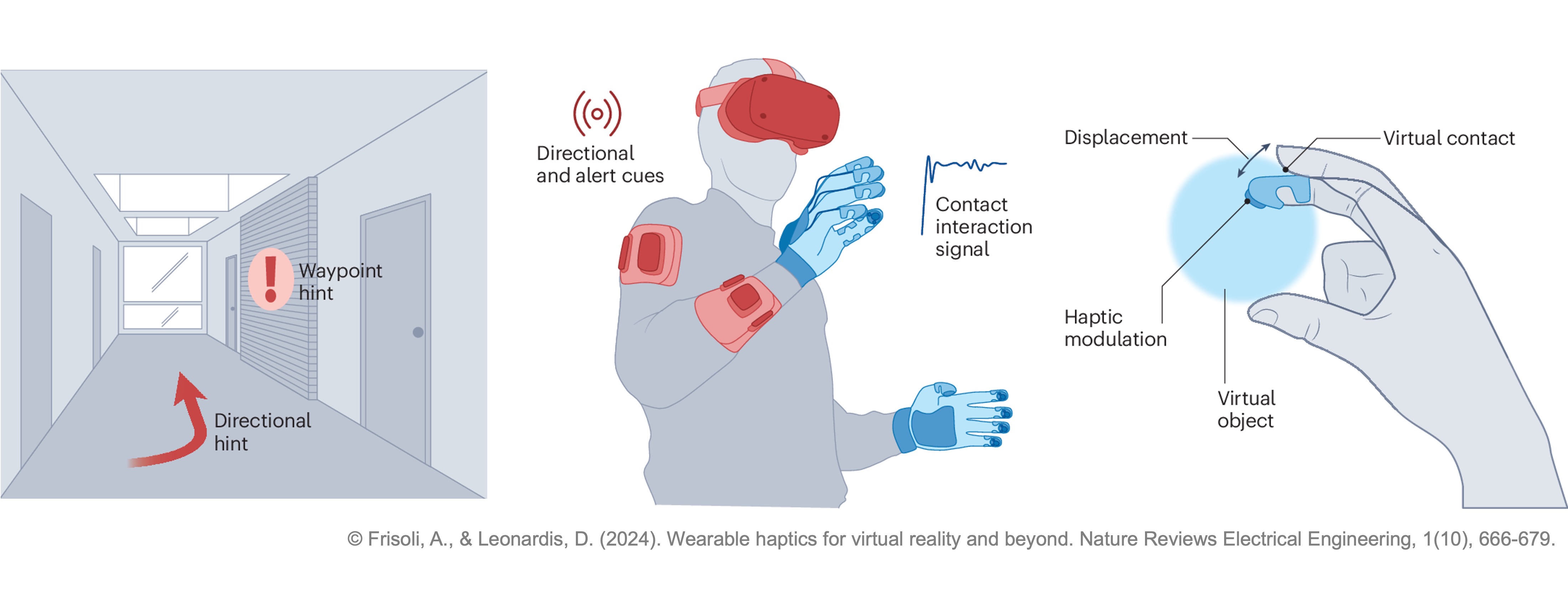

In VR, creating a truly immersive experience goes beyond realistic visuals. The senses of hearing and touch play pivotal roles in bridging the gap between the virtual and the physical world. In engineering applications—such as industrial simulations, training environments, and control systems—accurate auditory cues and haptic feedback are critical for:

- Enhanced Situational Awareness: Operators in control rooms or simulation environments can quickly gauge system status and spatial relationships without relying solely on visual displays.

- Improved Safety and Efficiency: Timely and clear sensory feedback helps users confirm actions (such as engaging emergency controls) and reduces the risk of errors.

- Realistic Simulation of Real-World Systems: Sound cues and tactile sensations, such as the click of a button or the vibration of a control panel, closely mimic physical interactions, making simulations more believable.

- Increased User Engagement: A rich, multimodal experience keeps users immersed in the virtual environment, essential for effective training and remote operations.

Engineering Use Cases

-

Industrial Training Simulators: Teach operators to handle machinery, control panels, or robotic arms by providing immediate sensory feedback. In the assembly station of XFactory, users can be trained to work alongside a robotic arm to assemble a complex part like the V8 engine. As they correctly (or incorrectly) assemble each component, the system gives a confirmation beep and a short vibration pulse to the controller.

-

Remote Monitoring and Teleoperation: Allow operators to “feel” and “hear” system statuses and anomalies when controlling equipment remotely. In the logistics station of XFactory, the drone is operated remotely. If the path becomes cluttered or a system error is detected (e.g., battery low or sensor failure), a warning tone is played, and a longer vibration indicates urgency.

-

Design and Prototyping: Enable engineers to evaluate new control interfaces or equipment designs by simulating the sensory experience of interacting with physical components. In the exhibit station of XFactory, users can import and evaluate a prototype, touch-sensitive control panel. Each button provides a different audio-haptic profile to simulate physical differences (e.g., soft rubbery button vs. hard metallic switch).

-

Maintenance and Troubleshooting: Help diagnose issues by associating specific haptic and audio cues with malfunctioning systems, such as abnormal vibrations or unexpected sounds. In the welding station of XFactory, a robot’s welding head alignment is faulty. When diagnostics are run, the VR system plays a distorted buzzing sound and sends a repetitive low-pulse vibration to indicate a hardware malfunction.

Design Principles

Effective haptic design enhances realism, reinforces user actions, and maintains comfort across devices. The following principles ensure feedback feels intentional, synchronized, and adaptable in any VR experience.

-

Consistency and Predictability: Each user action should always produce the same, recognizable response. A light tap might yield a quick, soft pulse and click sound, while a firm press delivers a stronger, deeper vibration. Predictable feedback builds user trust and supports muscle memory.

-

Proportional and Context-Aware Feedback: Scale haptic strength and duration to match the significance of an interaction. Routine actions (like opening drawers or pressing buttons) should feel subtle and brief, while critical events (such as warnings or heavy impacts) should deliver stronger, layered feedback patterns that capture attention.

-

Multimodal Synchronization: Align haptic, audio, and visual feedback to occur simultaneously—ideally within a few milliseconds. When a button is pressed, the user should feel a pulse, hear a click, and see a corresponding animation at the same moment. Tight timing reinforces realism and immersion.

-

Frequency and Texture Pairing: Use different vibration frequencies to convey material qualities or intensity. High-frequency vibrations can simulate texture or fine detail, while low-frequency rumbles suggest weight or impact. Pair these with complementary audio tones so that both sensations enhance, rather than compete with, each other.

-

Localization and Device Awareness: Deliver haptic impulses only to the controller or device involved in the interaction. Pressing a control lever with the left hand should cause only the left controller to vibrate. Because vibration strength and frequency vary between devices, calibrate haptics per hardware profile and degrade gracefully when certain effects aren’t supported.

-

Comfort and Adaptability: Avoid excessive or continuous vibrations that cause fatigue. Provide user settings to adjust intensity or switch between “audio-only,” “haptic-only,” or combined feedback modes. Flexible control ensures accessibility and comfort during extended sessions.

Controller Haptics

In VR, haptics refers to the use of technology that simulates the sense of touch, allowing users to feel physical sensations in virtual environments. This is achieved through devices like gloves, vests, or controllers that provide feedback such as vibrations, pressure, or force, enhancing immersion and realism in virtual experiences. In XFactory, haptics bring machinery and tools to life. From operating a robot in the assembly station to pushing a button on a forklift dashboard in the logistics station, carefully designed haptic responses reinforce the user’s sense of presence and interaction. Note that good haptic design should balance consistency, context, and comfort, synchronizing multiple sensory cues while adapting gracefully to different users and hardware.

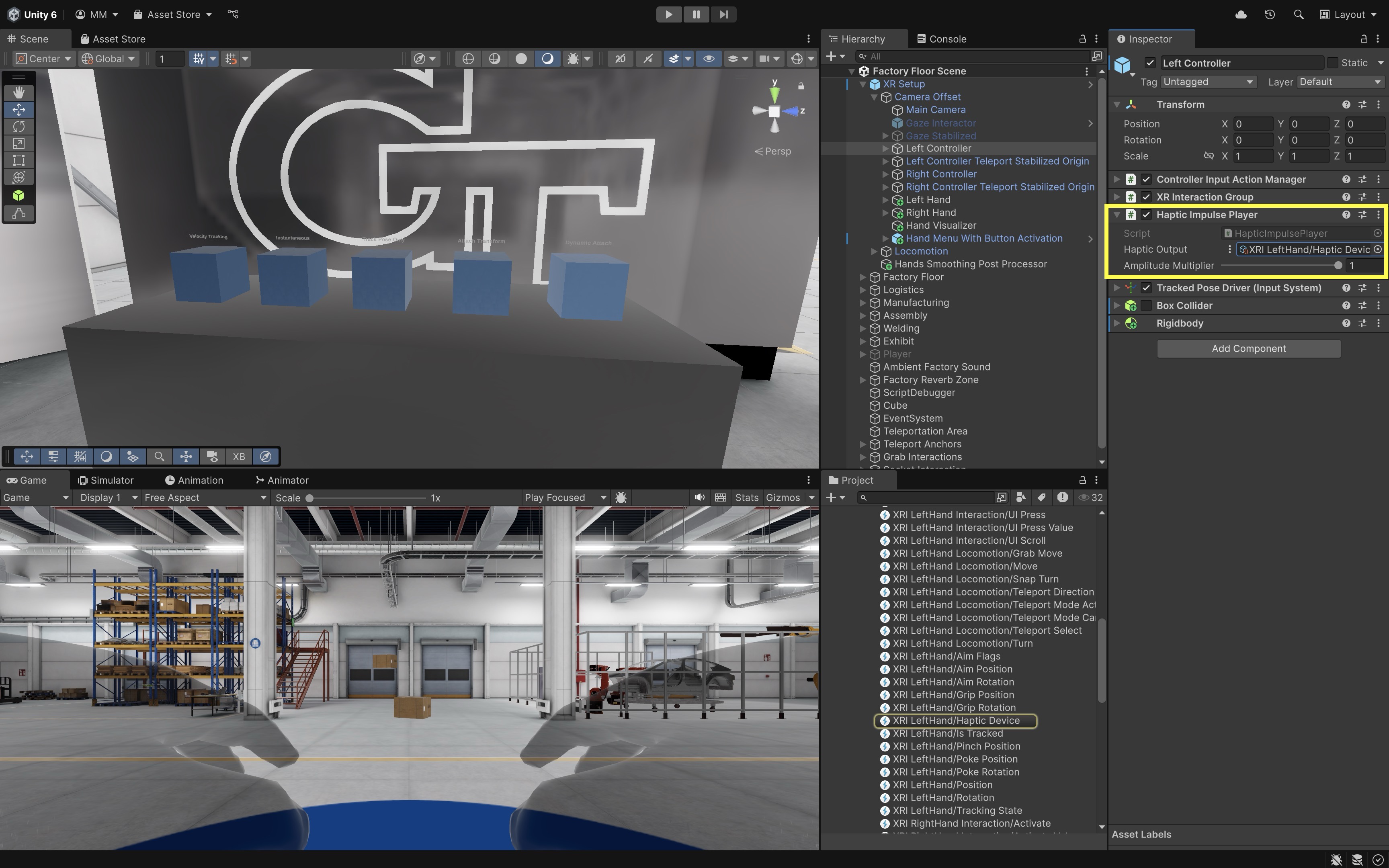

Haptic Impulse Player

The HapticImpulsePlayer component attached to each controller is the core output driver in Unity’s XR Interaction Toolkit haptic system. It is responsible for sending vibration impulses to the controller’s haptic motor. Rather than deciding when vibrations should play, the HapticImpulsePlayer defines how they are delivered once triggered. It provides a unified interface for sending impulses from other components, such as SimpleHapticFeedback, directly to a specific XR controller. Typically, you attach one HapticImpulsePlayer to each controller in your XR Rig (for example, the left and right hands). Other components then reference it to trigger haptics during interactions. Key properties include:

Haptic Output: Specifies the controller or haptic control that impulses are sent to.Amplitude Multiplier: A global scaling factor that amplifies or dampens all outgoing haptic impulses from this player.

In essence, the

HapticImpulsePlayerfunctions like an amplifier—it doesn’t initiate haptics on its own but ensures that all incoming impulses are properly routed and adjusted before reaching the hardware device.

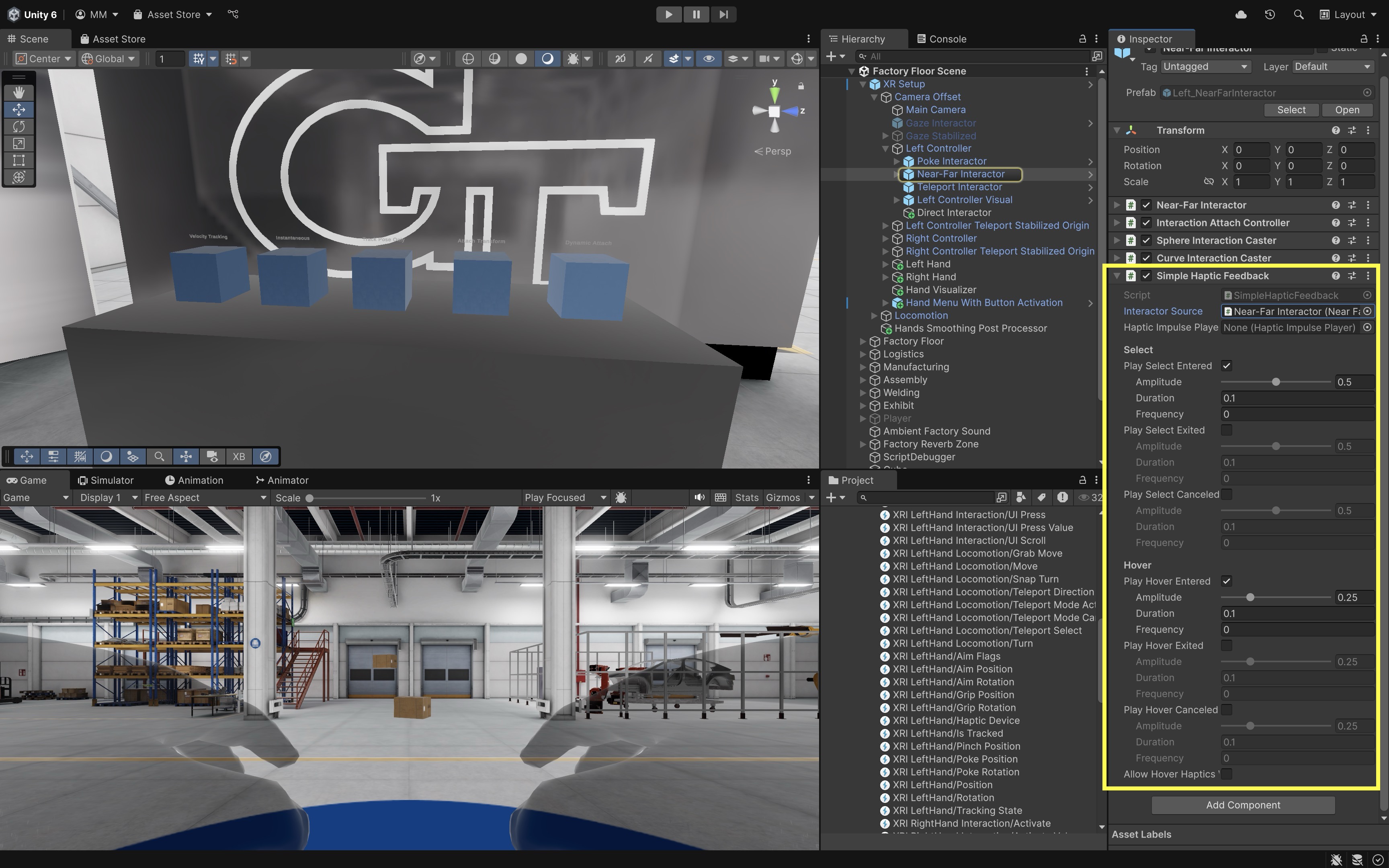

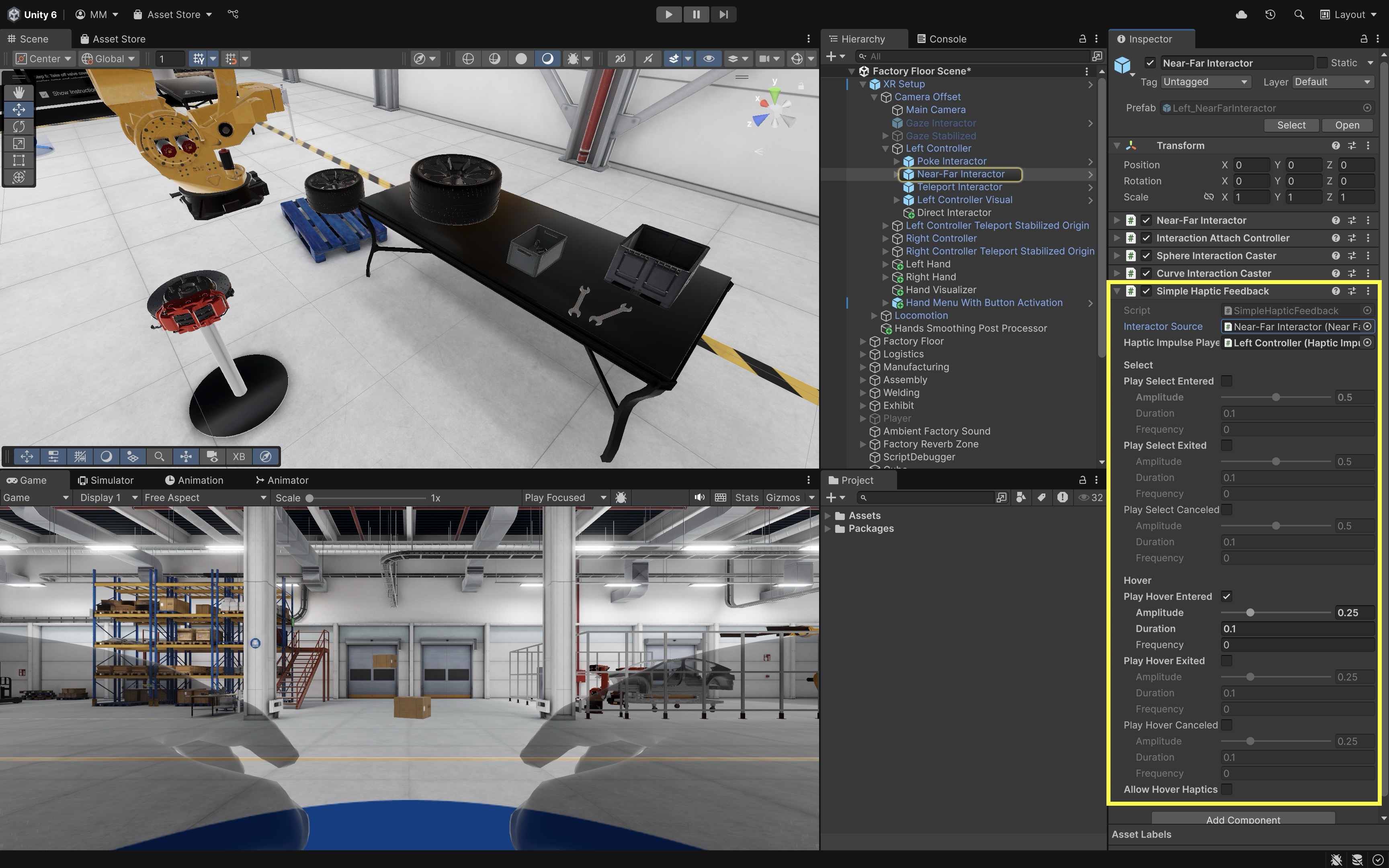

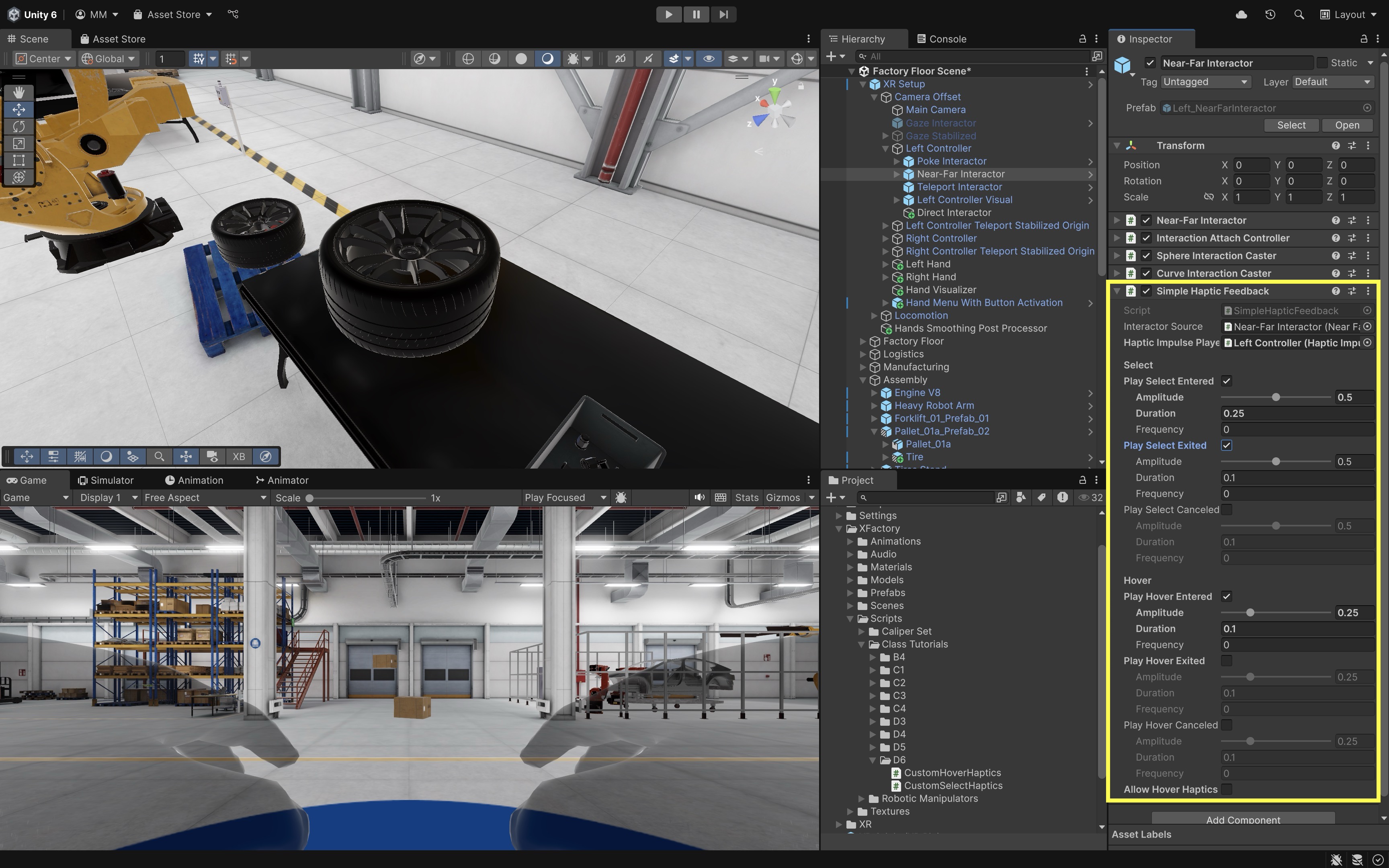

Simple Haptic Feedback

The SimpleHapticFeedback component serves as the event-driven controller for haptics, determining when and what haptic sensations to play for each interaction. It listens to interaction events from an interactor (e.g., hovering over or selecting an interactable) and uses a linked HapticImpulsePlayer to send the corresponding vibration to the hardware. Attach SimpleHapticFeedback to interaction components like Near-Far Interactor, Poke Interactor, or Teleport Interactor. This setup allows the player’s controller to automatically vibrate when specific events occur, such as when an object is grabbed, released, or approached. Key properties include:

Interactor Source: Defines which interactor this component listens to for hover and select events (e.g.,Near-Far Interactor).Haptic Impulse Player: References theHapticImpulsePlayerthat actually sends the vibration signal. If left empty, Unity will automatically assign a suitableHapticImpulsePlayerfrom the controller’s components at runtime.- Toggleable event options such as

Play Select Entered,Play Hover Entered, orPlay Select Exiteddetermine which interactions trigger haptics. - Each event can define its own parameters, customizing the feel of the feedback. For instance, a short, sharp vibration with high amplitude might simulate a click or button press, while a longer, softer pulse could represent a gentle touch or hover cue:

Amplitude: How strong the vibration is (range0.0 – 1.0).Duration: How long the vibration lasts (in seconds).Frequency: The pitch or “buzziness” of the vibration (in Hz, optional and device-dependent).

Allow Hover Haptics While Selecting: Enables hover-related vibrations even while an object is being held or interacted with.

Developers can also call controller-level methods like

SendHapticImpulse(float amplitude, float duration)to manually trigger vibrations, but usingSimpleHapticFeedbackprovides a more structured, event-based workflow that aligns with the XR Interaction Toolkit’s interaction system.

Configure Hover Feedback

The Simple Haptics Feedback component in the XR Interaction Toolkit provides a more flexible way to configure vibration feedback during common interaction events like hovering, selecting, or canceling. Let’s use it to create a light pulse when the user’s controller hovers near the tire in the assembly station.

- Add the

Simple Haptics FeedbackComponent:- In the

Hierarchy, select theLeft Controller > Near-Far Interactor(repeat forRight Controllerif needed). - In the

Inspector, clickAdd Component. - Search for and add

Simple Haptics Feedback.

- In the

- Enable Hover Enter Feedback:

- In the

Simple Haptics Feedbackcomponent, scroll to theHoversection. - Check the box next to

Play Hover Entered.

- In the

- Set Hover Feedback Parameters:

- Set

Amplitudeto0.25for light vibration. - Set

Durationto0.1to create a short pulse. - Set

Frequencyto0.0(use default frequency or leave at 0 if unsure). - These values create a gentle, noticeable cue as users move close to the tire, ideal for subtle prompts.

- Set

- Test Hover Feedback in Scene:

- Press

Play(if using the Meta Link app) or deploy the app to your headset. - Move your controller near the tire positioned on the table.

- You should feel a soft pulse as the ray interactor enters hover range. This hover interaction feels intuitive and non-intrusive, perfect for signaling interactivity across the factory — like near tools, robot controls, or digital screens.

- Press

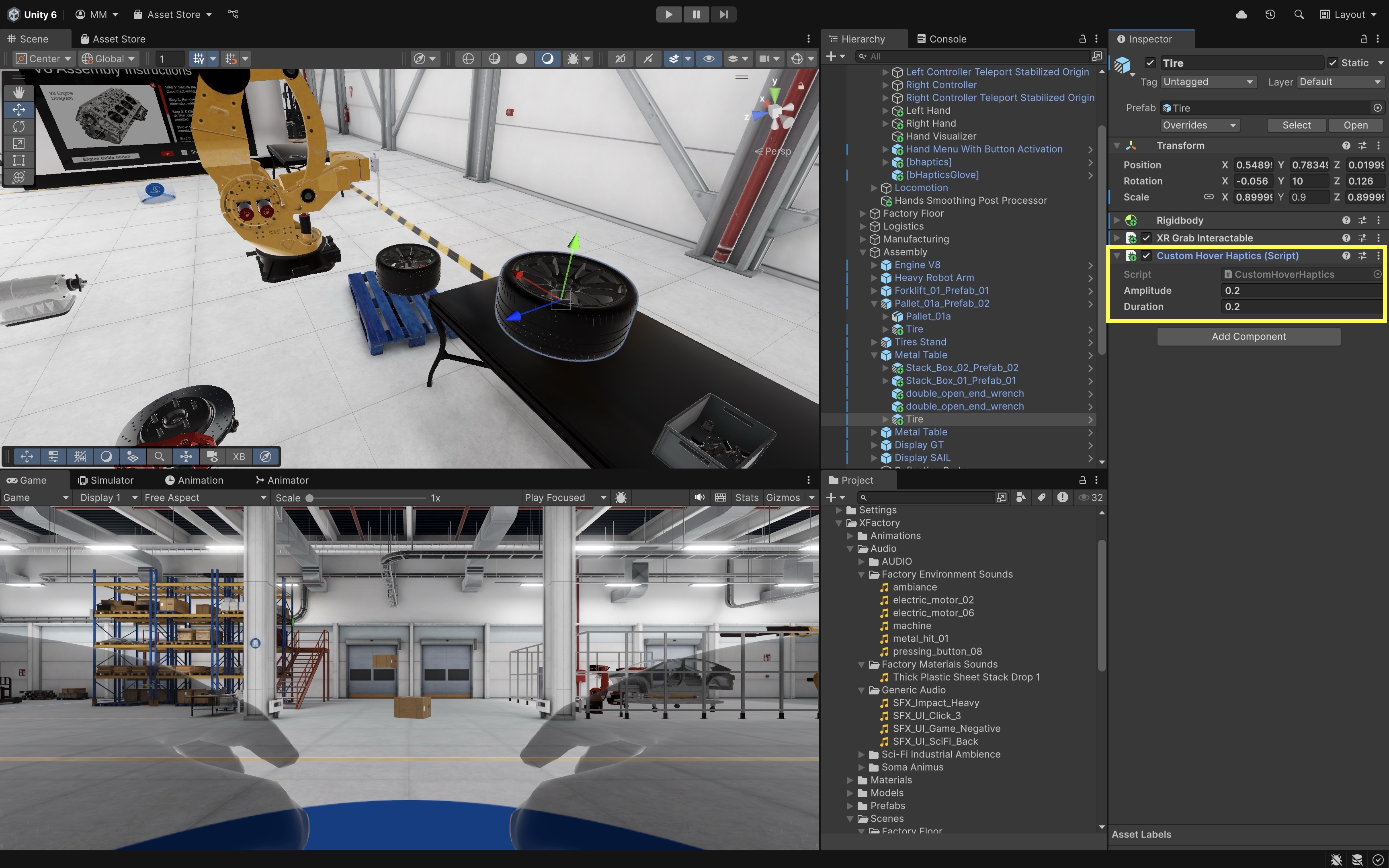

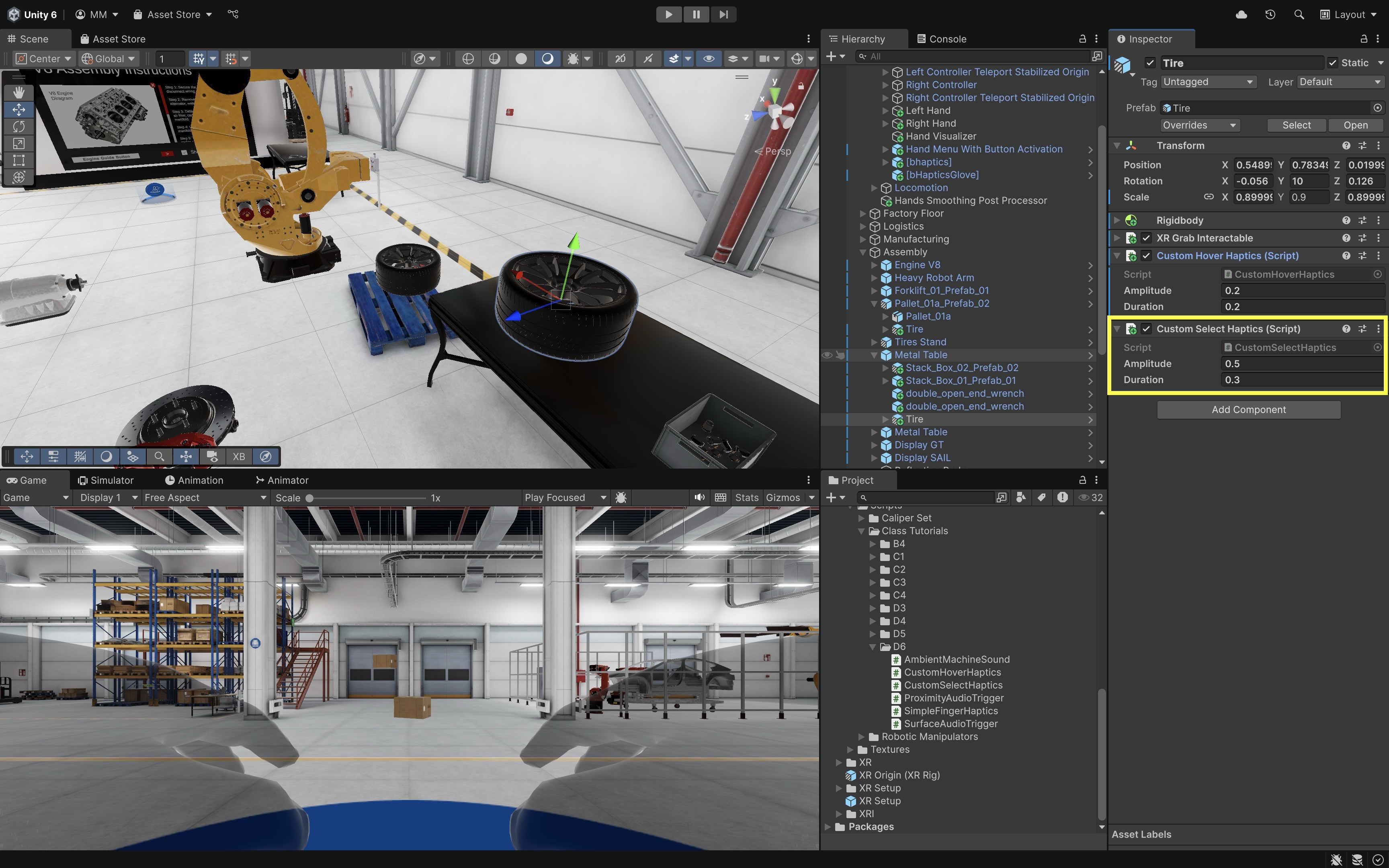

- Add Object-Specific Hover Haptics:

- Optionally, write a script to enable more granular control (e.g., different objects trigger different vibrations) and define feedback per object, rather than globally on the controller.

using UnityEngine; using UnityEngine.XR.Interaction.Toolkit; // Ensures the GameObject has an XRBaseInteractable component [RequireComponent(typeof(XRBaseInteractable))] public class CustomHoverHaptics : MonoBehaviour { [Tooltip("Strength of the haptic impulse (0.0 – 1.0).")] public float amplitude = 0.2f; // vibration intensity [Tooltip("Duration of the haptic impulse in seconds.")] public float duration = 0.1f; // how long it vibrates private void OnEnable() { // Get the interactable and subscribe to hoverEntered event var interactable = GetComponent<XRBaseInteractable>(); interactable.hoverEntered.AddListener(OnHoverEntered); } private void OnDisable() { // Unsubscribe when disabled to avoid memory leaks var interactable = GetComponent<XRBaseInteractable>(); interactable.hoverEntered.RemoveListener(OnHoverEntered); } private void OnHoverEntered(HoverEnterEventArgs args) { // If the hovering object is a controller, send a haptic pulse if (args.interactorObject is XRBaseControllerInteractor controllerInteractor) { controllerInteractor.SendHapticImpulse(amplitude, duration); } } }XRBaseInteractableis the base class for all interactable objects in Unity’s XR Interaction Toolkit, providing the core events and logic for interactions such as hovering, selecting, and activating. Classes likeXRGrabInteractable,XRSocketInteractor, andXRSimpleInteractableinherit from it to implement specific interaction behaviors. - Configure the Script:

- Select the

TireGameObject in theHierarchy. - Click

Add Component, then addCustomHoverHaptics.cs. - Tweak

IntensityandDurationin theInspectorfor a different sensation unique to this object.

- Select the

Use the

Simple Haptics Feedbackcomponent for controller-based interaction logic. Use the custom script when you want different objects (e.g., torque wrench vs. tire) to feel distinct when hovered.

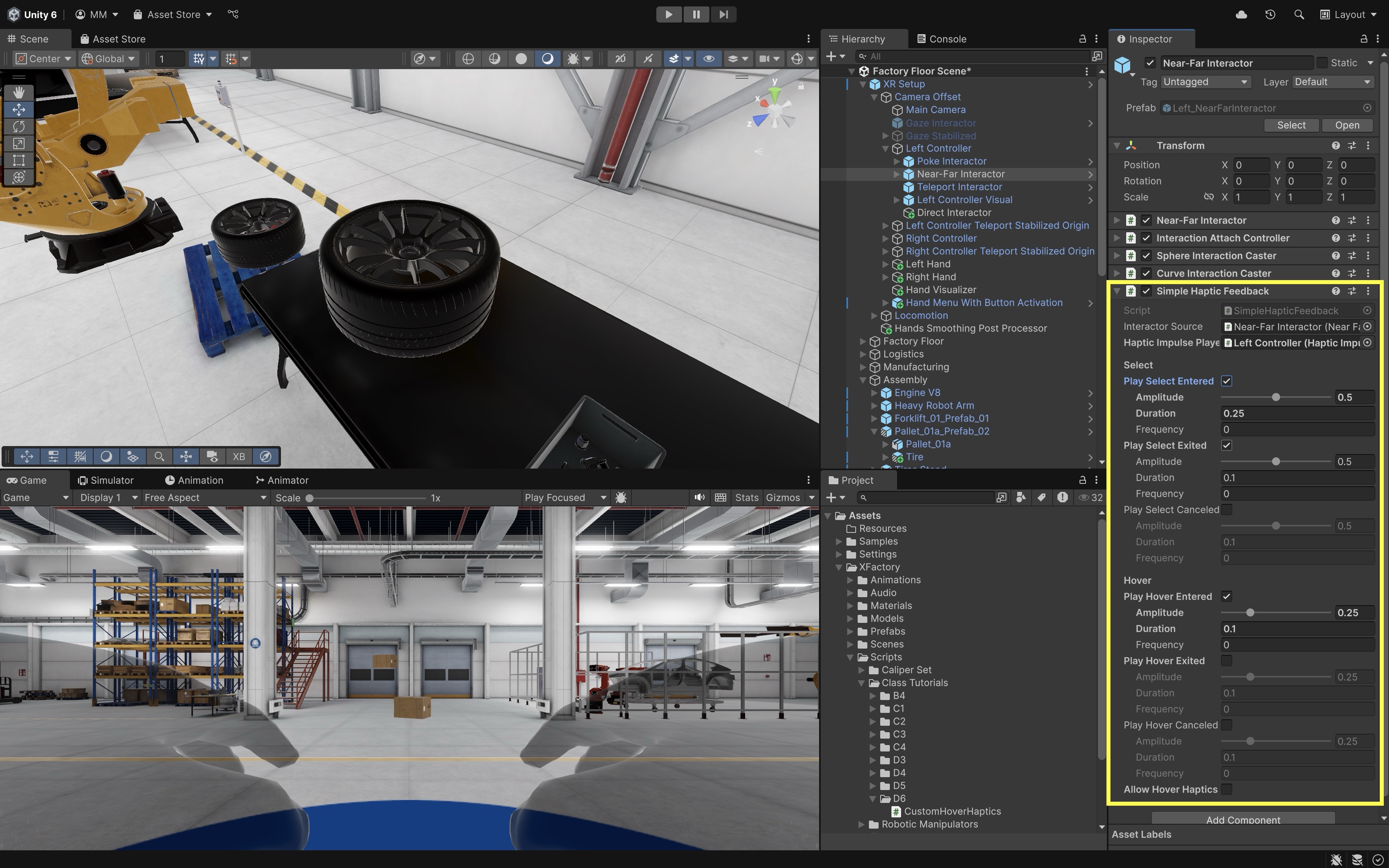

Configure Select Feedback

Now we will configure stronger haptic feedback to reinforce intentional actions, like grabbing a tire, pressing a button, or toggling a switch. In this case, the pulse confirms the user has successfully selected (grabbed) the tire in the assembly station.

- Add the

Simple Haptics FeedbackComponent (if not already added):- In the

Hierarchy, select theLeft Controller > Near-Far Interactor(repeat forRight Controller, if needed). - In the

Inspector, clickAdd Component. - Search for and add

Simple Haptics Feedback.

- In the

- Enable Select Enter Feedback:

- Locate the

Simple Haptics Feedbackcomponent. - Scroll to the

Selectsection. - Check the box for

Play Select Entered.

- Locate the

- Set Select Feedback Parameters:

- Set

Amplitudeto0.5to make it more pronounced than hover. - Set

Durationto0.25to vibrate longer to signify a “confirmed” action. - Set

Frequencyto0.0unless using a custom frequency pattern. - This pulse acts like a tactile confirmation — perfect for grabbing the tire, flipping a safety switch, or initiating a machine cycle.

- Set

- Test Select Feedback in Scene:

- Press

Play(if using the Meta Link app) or deploy the app to your headset. - Aim at the tire, and grab it using the interactor (direct or ray).

- You should feel a firm pulse when the

Play Select Enteredevent fires, confirming successful selection.

- Press

- Add Custom Select Feedback Per Object (Optional):

- Similar to the custom hover haptics example, you can different objects to give unique haptic responses on selection. Here’s a custom script you can attach directly to the object.

using UnityEngine; using UnityEngine.XR.Interaction.Toolkit.Interactables; using UnityEngine.XR.Interaction.Toolkit.Interactors; using UnityEngine.XR.Interaction.Toolkit; [RequireComponent(typeof(XRBaseInteractable))] public class CustomSelectHaptics : MonoBehaviour { [Tooltip("Strength of the haptic impulse (0.0 – 1.0).")] public float amplitude = 0.5f; [Tooltip("Duration of the haptic impulse in seconds.")] public float duration = 0.25f; private void OnEnable() { // Subscribe to the select entered event when the object becomes active var interactable = GetComponent<XRBaseInteractable>(); interactable.selectEntered.AddListener(OnSelectEntered); } private void OnDisable() { // Unsubscribe from the event when the object is disabled var interactable = GetComponent<XRBaseInteractable>(); interactable.selectEntered.RemoveListener(OnSelectEntered); } private void OnSelectEntered(SelectEnterEventArgs args) { // Check if the interactor is a controller-based interactor (e.g., hand controller) if (args.interactorObject is XRBaseInputInteractor controllerInteractor) { // Trigger haptic feedback on the controller using amplitude and duration controllerInteractor.SendHapticImpulse(amplitude, duration); } } } - Configure the Script:

- Select the

TireGameObject in theHierarchy. - Click

Add Component, and chooseCustomSelectHaptics.cs. - Modify the

IntensityandDurationvalues in theInspectorfor desired feedback.

- Select the

Configure Attach Feedback

When the user successfully attaches the tire onto its platform (i.e., socket) at the tire stand in the assembly station, we want to simulate a clear tactile “click” — like snapping something firmly into place. When the socket detects the tire has been correctly placed, the controller delivers a firm, long vibration. This feedback adds a strong sense of completion to the interaction. Here is how to configure this behavior:

- Add the

Simple Haptics FeedbackComponent (if not already added):- In the

Hierarchy, select theLeft Controller > Near-Far Interactor(andRightHand Controller, if applicable). - In the

Inspector, clickAdd Component. - Add

Simple Haptics Feedback.

- In the

- Set Attach Feedback Parameters:

- Scroll to the

Selectsection. - Check the box labeled

Play Select Exited. - Set

Amplitudeto0.7– strong and satisfying. - Set

Durationto0.35– longer to simulate the weight and resistance of snapping the tire in. - Set

Frequencyto0.0– leave at 0 unless using a specific pulse frequency.

Why this event? In socket-based interactions, the grabbable is often released (select exited) when it snaps into the socket. This makes

Select Exiteda good trigger for “attachment complete” feedback. This feedback makes it feel like the tire is firmly and correctly mounted on the stand — just like snapping a car wheel into place. - Scroll to the

- Test Attach Feedback in Scene:

- Enter

Playmode (with Link app) or deploy the app. - Grab the tire and place it into the socket on the tire stand.

- Once inserted and released, the

Play Select Exitedevent fires — triggering a deep, confident pulse on the controller. - This sensation pairs well with visual alignment cues and a click sound for multisensory feedback.

- Enter

Hand Tracking & Haptic Gloves

Hand tracking and haptic gloves in VR bring natural, intuitive interaction to virtual environments by letting users see and feel their own hands. Instead of relying solely on controllers, hand tracking captures finger and wrist motion directly, enabling gestures like grabbing, pressing, and pointing. When paired with haptic gloves such as the bHaptics TactGlove DK2, these interactions gain a tactile layer — vibrations in the fingertips and wrists simulate the sensation of touching, pressing, or holding virtual objects. Together, they transform simple gestures into convincing physical experiences, enhancing realism and user immersion in VR. This section walks through setting up hand tracking using Unity’s XR Interaction Toolkit and integrating bHaptics TactGlove DK2 for fingertip haptic feedback.

Set Up Hand Tracking

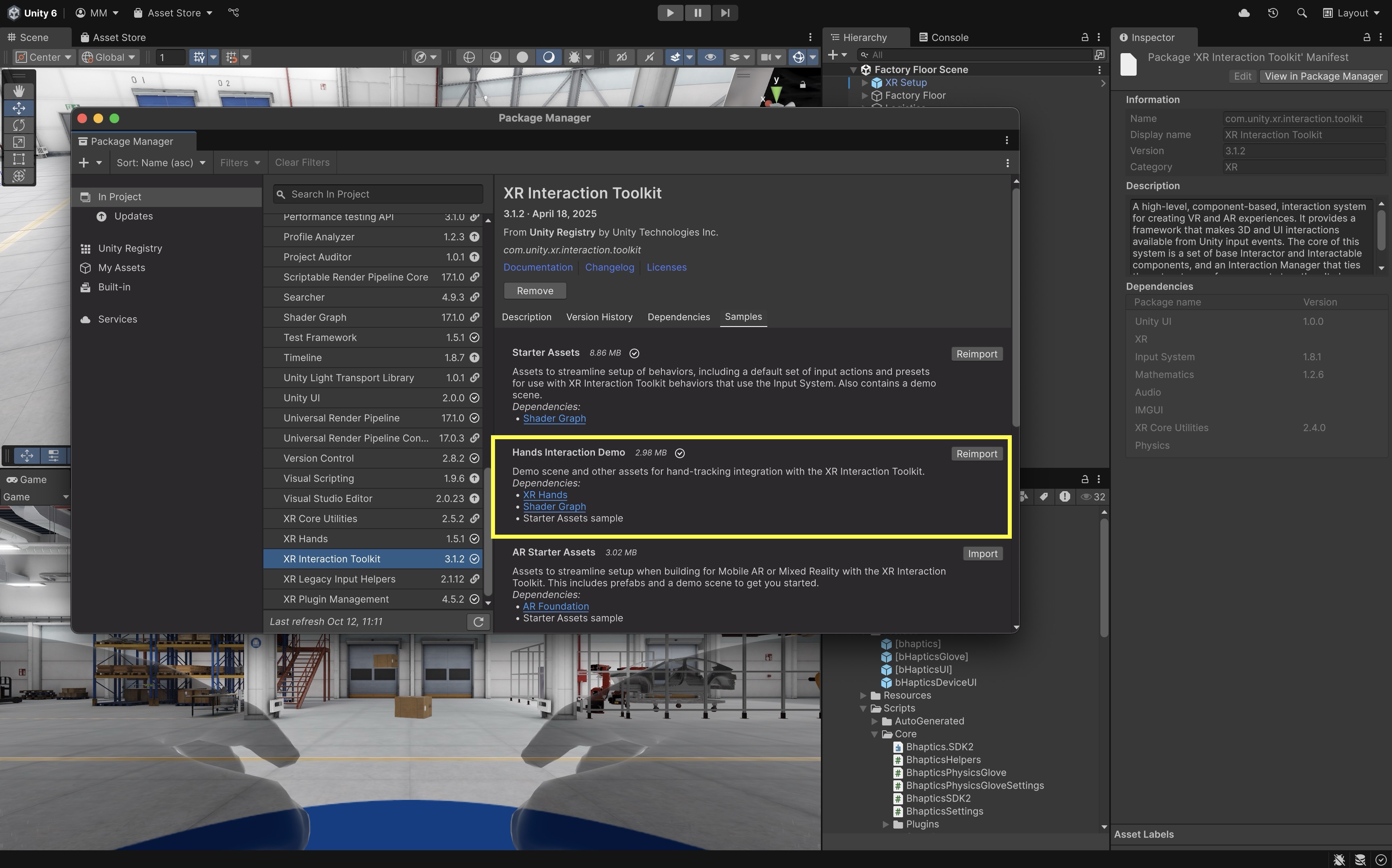

- Install Required Packages for Hand Tracking:

- Click

Window > Package Management > Package Manager. - Select

XR Interaction Toolkit(v3.x or newer). Install if not installed already. - Click the

Samplestab. ImportHand Interaction Demo. - Ensure

Input Systemis installed and active (Project Settings > Player > Active Input Handling > Both).

- Click

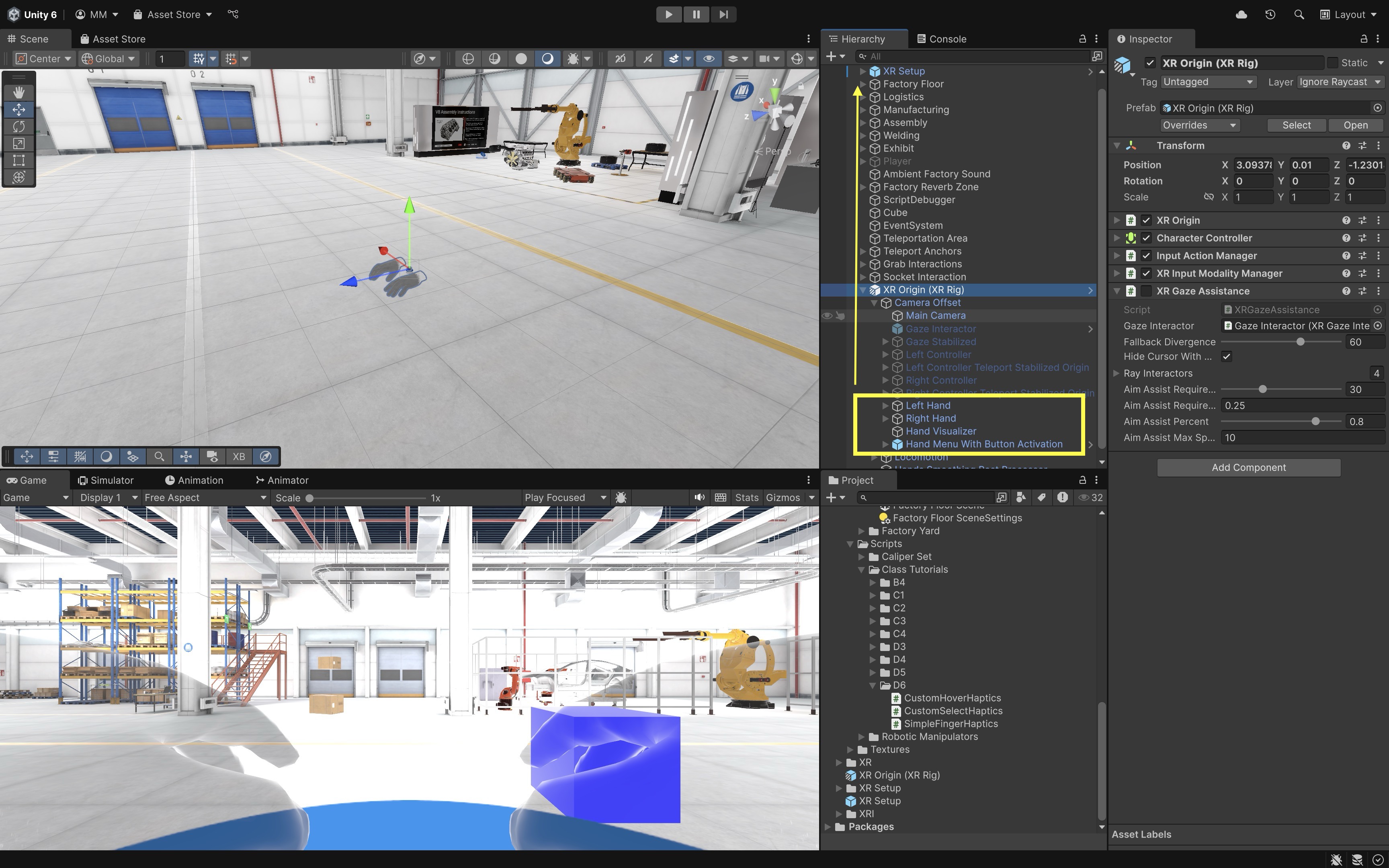

- Set Up Hand Tracking in Scene:

- Open the demo scene from the imported

Hand Interaction Demosample. - In your own

XR Setup, copy these objects from the demo rig:

Left HandRight HandHand VisualizerHand Menu With Button Activation- Paste them under yourXR Setupin your scene. - These prefabs already include tracked-hand logic and colliders for interaction.

- Open the demo scene from the imported

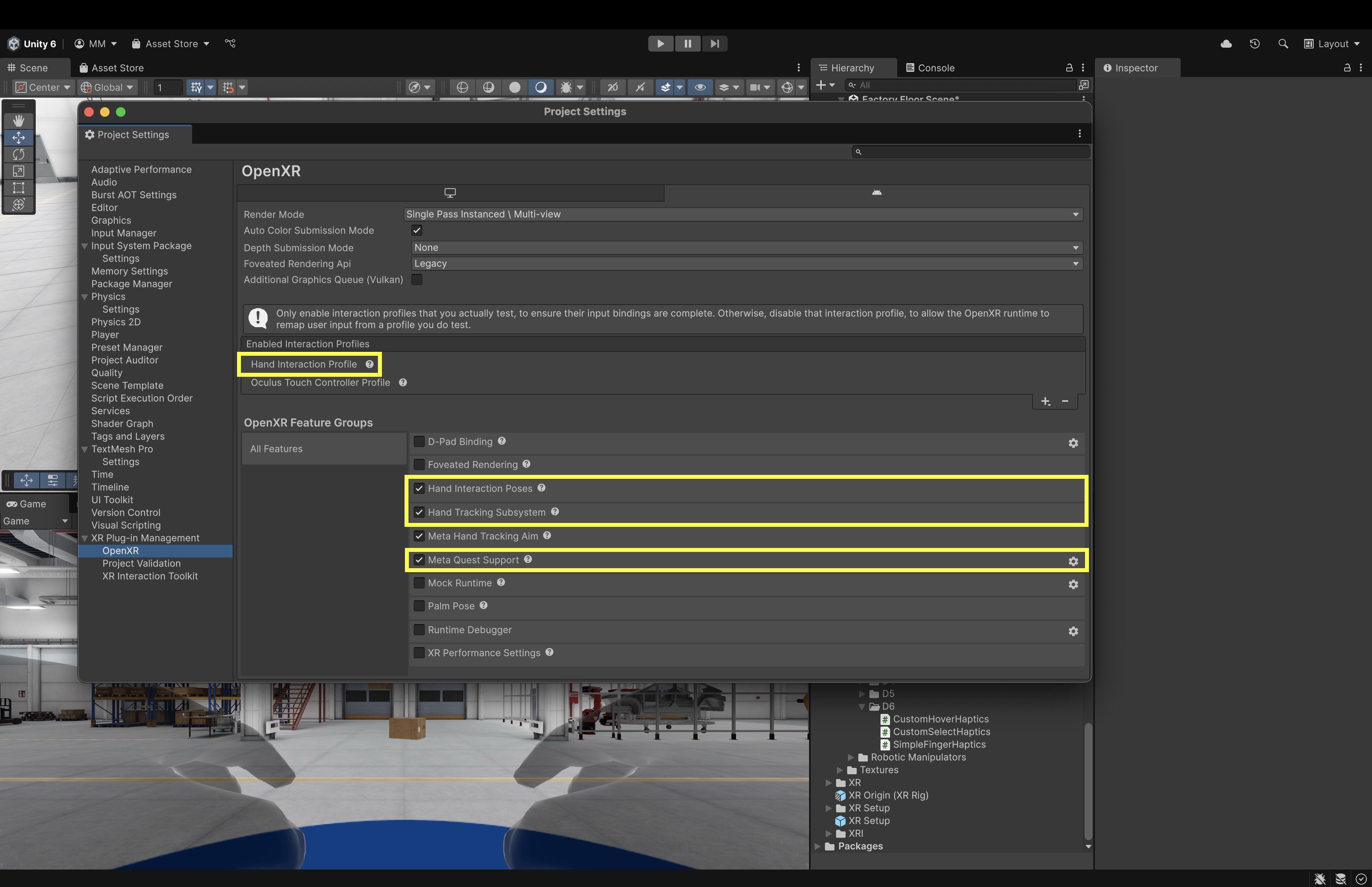

- Enable OpenXR Features:

- Go to

Edit > Project Settings > XR Plug-in Management > OpenXR. - Select your target platform (e.g.,

Androidfor Quest). - Under

Features, enable:

Hand Tracking SubsystemMeta Quest Support(if building for Quest)Hand Interaction Profile

- Go to

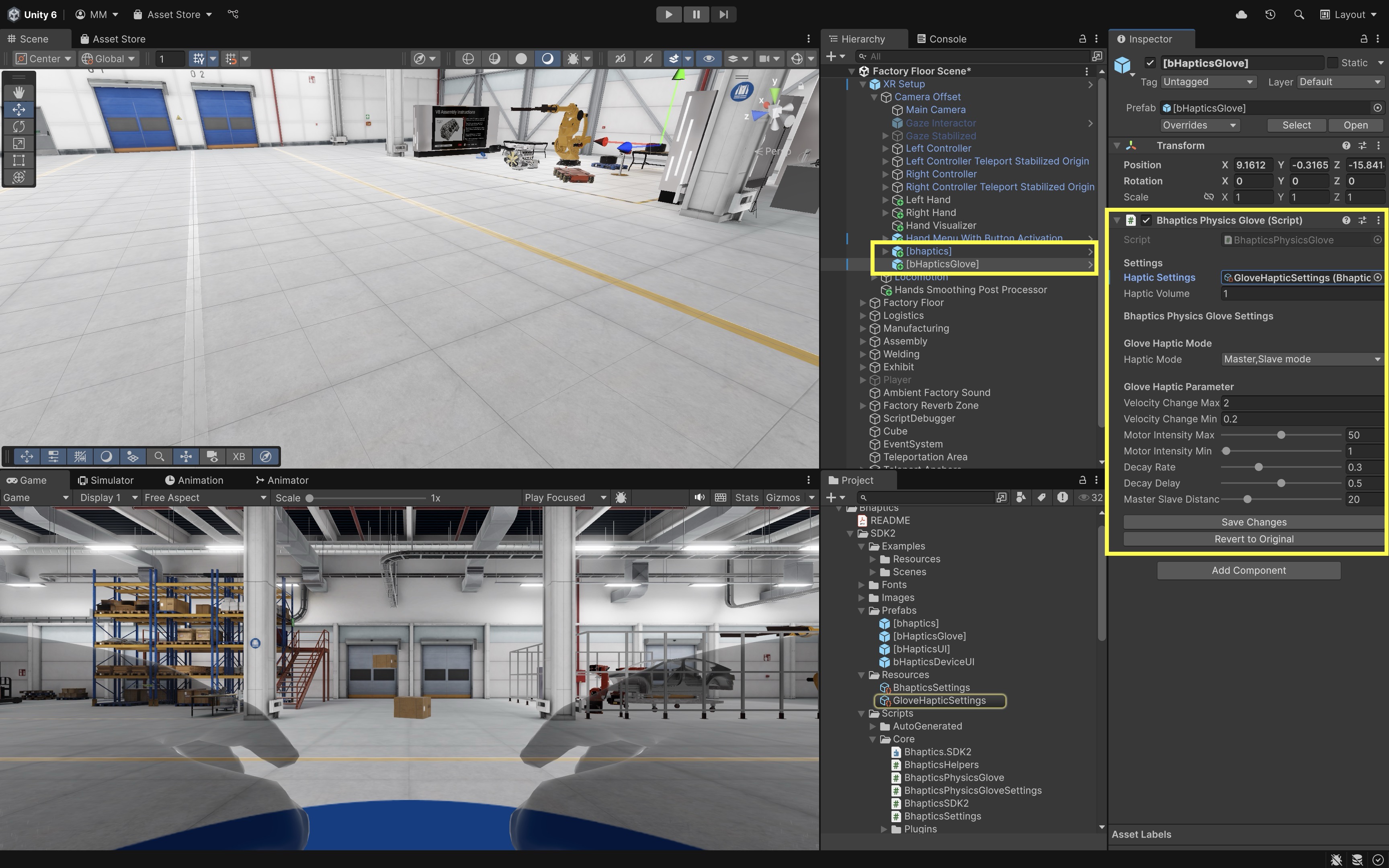

Configure bHaptics SDK

- Import the bHaptics Haptic Plugin:

- Import the bHaptics Haptic Plugin from Unity Asset Store.

- Go to

Window > Package Management > Package Manager > My Assets. - Import the

bHaptics Unity SDK(v2.3.0 or newer). - You should now have:

Assets/Bhaptics/SDK2.

- Set up the Gloves Haptic Settings:

- In the

Projectwindow, openAssets/Bhaptics/SDK2/Prefabs/. - Drag the following prefabs into your scene:

[bhaptics][bHapticsGlove]- Select[bHapticsGlove]in theHierarchy. - ClickCreate New GloveHapticSettingsto auto-generate settings. - Keep the default parameters for now — they work well for most setups.

- In the

Review this Bhaptics documentation to learn more about the bHaptics Physics Glove SDK.

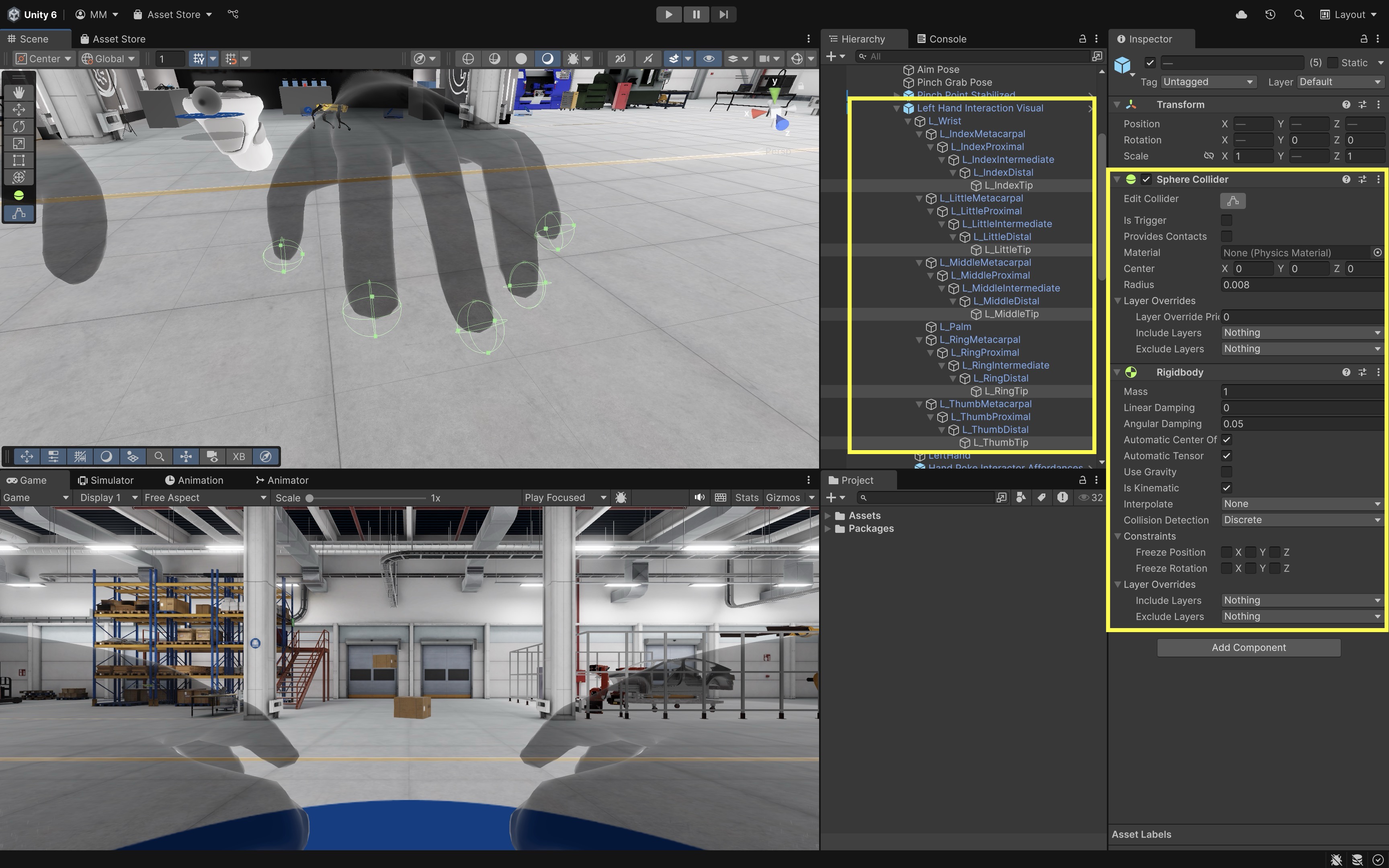

Add Physics to Fingertips

- Locate Fingertip Transforms:

- Inside your hand hierarchy (i.e.,

RightHand Interaction VisualandLeftHand Interaction Visual), find:

R_ThumbTipR_IndexTipR_MiddleTipR_RingTipR_LittleTipL_ThumbTipL_IndexTipL_MiddleTipL_RingTipL_LittleTip

- Inside your hand hierarchy (i.e.,

- Add Collider and Rigidbody to Each Fingertip:

- Select the fingertip GameObjects.

- Click

Add Component > Sphere Collider. - Set

Radiusto0.005 – 0.01. - Leave

Is Triggerunchecked. - Click

Add Component > Rigidbody. - Uncheck

Use Gravity. - Check

Is Kinematic. - Set

Collision DetectiontoContinuous.

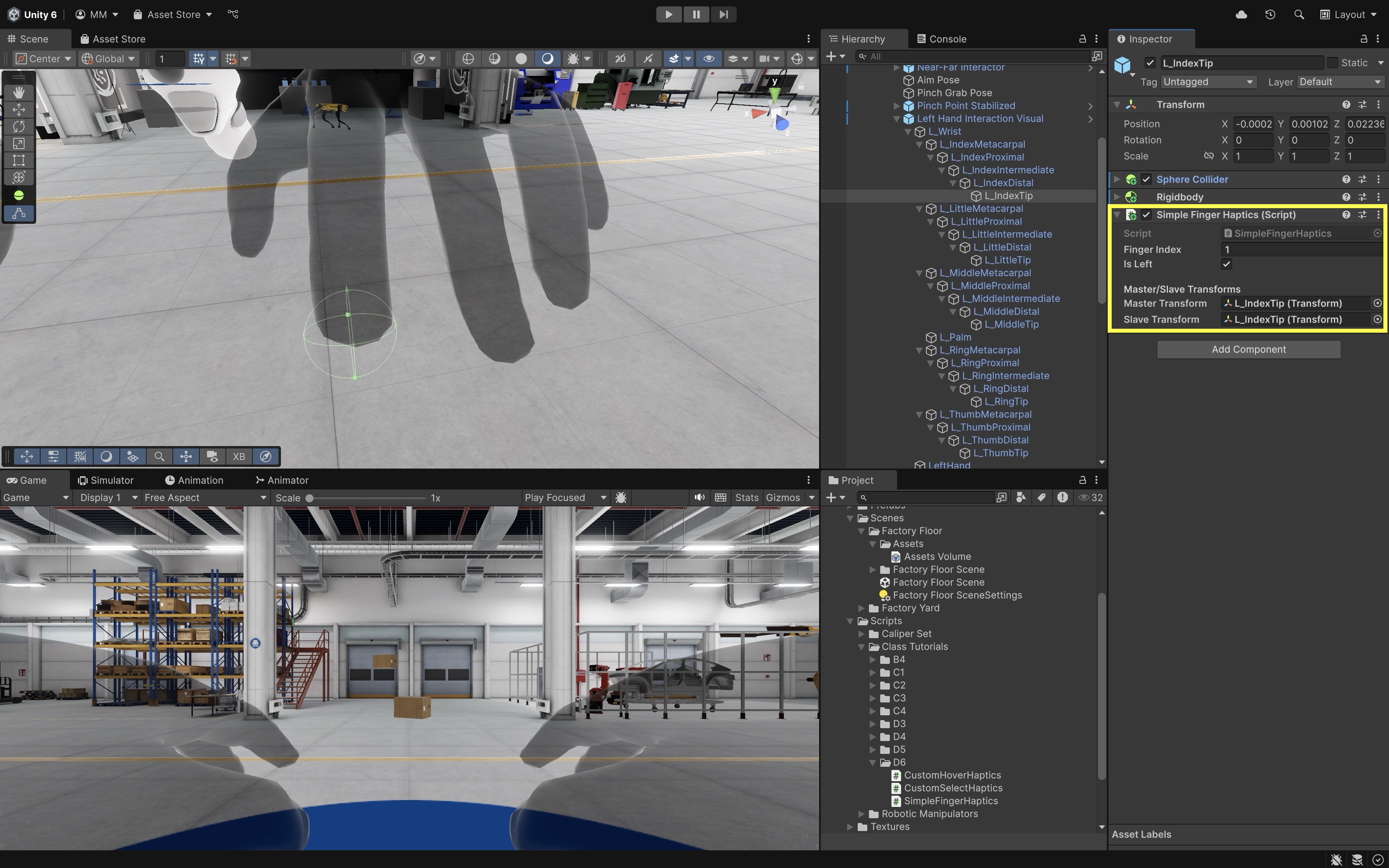

- Add the Haptic Script:

- Create a new script named

SimpleFingerHaptics.csin your project. - Paste this code:

// SimpleFingerHaptics.cs (Trigger-based) using UnityEngine; using Bhaptics.SDK2; // for PositionType using Bhaptics.SDK2.Glove; // for BhapticsPhysicsGlove /// <summary> /// Trigger-based fingertip haptics for bHaptics TactGlove. /// Set fingertip Sphere Colliders to IsTrigger = true. /// At least one collider in the pair must have a Rigidbody. /// </summary> public class SimpleFingerHaptics : MonoBehaviour { [Tooltip("0=Thumb, 1=Index, 2=Middle, 3=Ring, 4=Little, 5=Wrist")] public int fingerIndex; [Tooltip("Enable for left glove; disable for right.")] public bool isLeft; [Header("Master/Slave Transforms")] [Tooltip("Tracked fingertip (visual).")] public Transform masterTransform; [Tooltip("Physics fingertip (trigger collider).")] public Transform slaveTransform; [Header("Optional")] [Tooltip("Default impact vector for enter haptic. " + "Increase for stronger tap.")] public Vector3 defaultEnterVector = new Vector3(0f, 0f, 1f); // Determines left/right glove side for SDK calls. // => is a C# expression-bodied property. private PositionType Side => isLeft ? PositionType.GloveL : PositionType.GloveR; private void OnTriggerEnter(Collider other) { if (BhapticsPhysicsGlove.Instance == null) return; // Triggers lack velocity; use default vector for intensity. BhapticsPhysicsGlove.Instance.SendEnterHaptic( Side, fingerIndex, defaultEnterVector); } private void OnTriggerStay(Collider other) { if (BhapticsPhysicsGlove.Instance == null) return; if (!masterTransform || !slaveTransform) return; // While overlapping, sustain haptic based on offset. BhapticsPhysicsGlove.Instance.SendStayHaptic( Side, fingerIndex, slaveTransform, masterTransform); } private void OnTriggerExit(Collider other) { if (BhapticsPhysicsGlove.Instance == null) return; // End of contact → exit haptic. BhapticsPhysicsGlove.Instance.SendExitHaptic( Side, fingerIndex); } } - Create a new script named

- Configure Each Fingertip in the Inspector:

fingerIndex: Assign0 = Thumb,1 = Index,2 = Middle,3 = Ring,4 = Little,5 = Wrist(optional).isLeft: Check for left-hand fingertips. Uncheck for right-hand fingertips.masterTransform: Drag in the tracked fingertip transform (the one following hand tracking). Usually the same tip under yourHand Interaction Visual.slaveTransform: Drag in the fingertip GameObject that has this script and collider attached (often itself).- In most XRI Hand Interaction setups, you can assign the same GameObject for both

masterTransformandslaveTransformif you’re not using a physics proxy hand.

- Deploy and Test:

- Build and deploy your project to the headset.

- On the headset, make sure your TactGlove DK2 devices are paired and connected through the bHaptics Player app or system Bluetooth settings.

- Once the gloves are paired, launch your Unity app to test fingertip collisions and haptic feedback in VR.

- Fine-Tuning Haptic Sensation:

- Open your

GloveHapticSettingsasset (attached to[bHapticsGlove]). - Adjust parameters as needed.

motorIntensityMin/Max: Controls vibration strength.velocityChangeMin/Max: Tunes how sensitive it is to impact speed.decayRateanddecayDelay: Control how long sustained contact persists.- Resize fingertip colliders (e.g., radius

0.006–0.01) to optimize detection.

- Open your

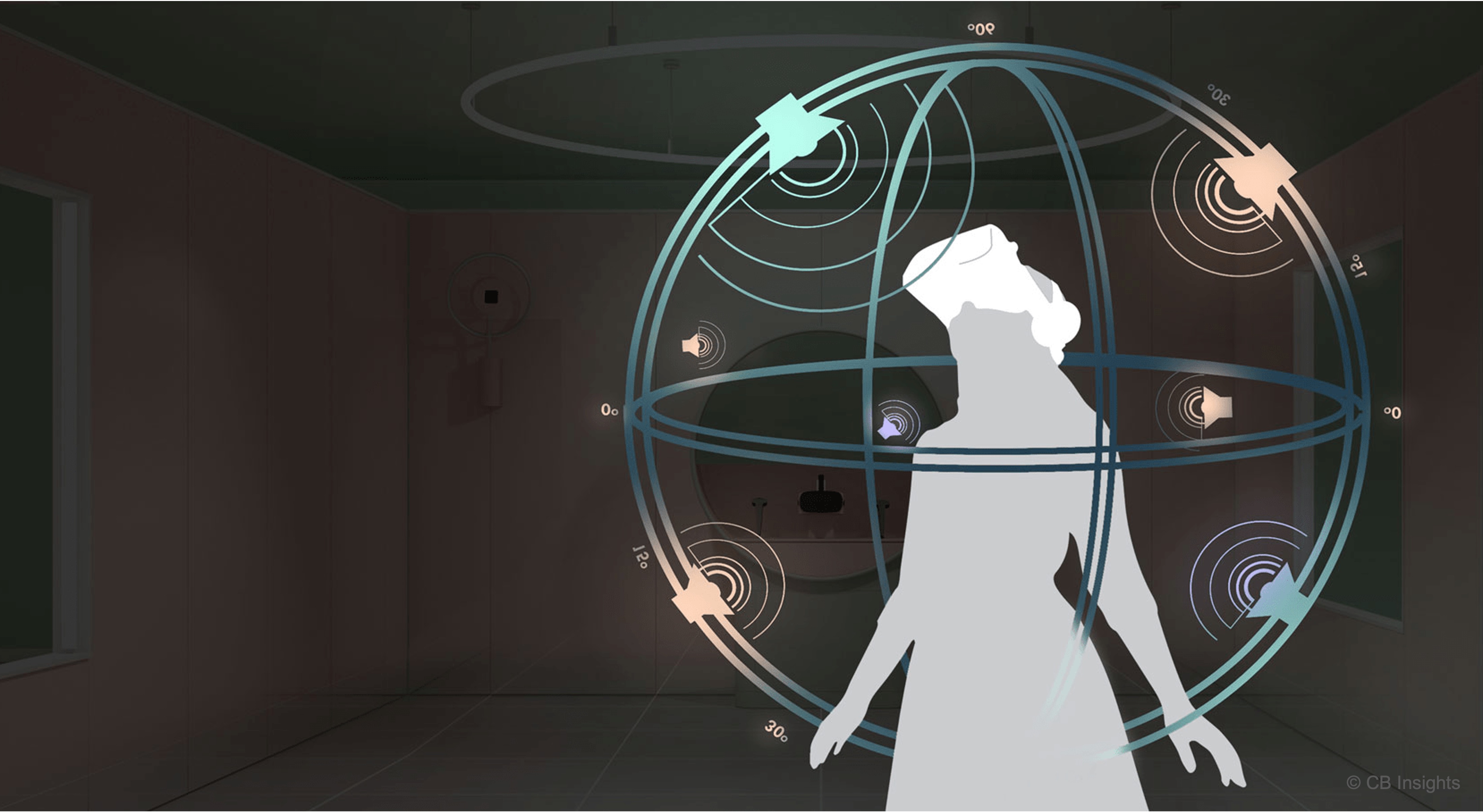

Audio in VR

Audio in VR plays a crucial role in creating immersive and realistic experiences by simulating 3D spatial sound that corresponds to the user’s environment and physical movements. It enhances presence, emotional engagement, and user understanding by allowing users to perceive direction, distance, and material characteristics of sound sources, just as they would in a real-world engineering environment. Key concepts include:

-

Audio Feedback: Audio feedback uses sound cues to indicate interactions, state changes, or successful task completions. These auditory signals can be subtle (e.g., soft beeps for UI interaction) or mechanical (e.g., metallic clunks when machinery operates). In XFactory, placing a box into a rack at the logistics station can trigger a satisfying “clunk” sound, reinforcing task completion.

-

Spatial Audio: This technique adjusts sound characteristics—such as stereo balance, volume, and filtering—based on the user’s position and orientation. It provides directional awareness, helping users interpret where sounds are coming from and how far away they are. In the manufacturing station of XFactory, the CNC machines should sound louder as users walk toward them and quieter as they move away.

-

Audio Events: Audio events are specific triggers that play sound effects during interactions. These include object grabs, button presses, or environmental triggers (like a door opening). In the assembly station, interacting with the large display screen should trigger a crisp UI confirmation tone.

Principles

-

Immersion & Presence: Craft audio that aligns seamlessly with the visual and interactive world. Every machine, tool or environment element should emit appropriate sounds—whether it’s the steady, low-pitched hum of a CNC lathe or the distant echo of footsteps in a factory hall. Even subtle layers of ambient noise (airflow, distant machinery, electrical buzz) reinforce spatial context and keep users “in” the scene.

-

Spatial & Directional Cues: Use accurate 3D audio positioning so users can pinpoint sound sources at a glance. In a multi-station workshop, they should hear a welding arc crackle to their left or a conveyor belt whirring behind them. Consistent volume attenuation and HRTF-based panning help operators quickly locate equipment or hazards without breaking visual focus.

-

Clarity & Consistency: Define a clear “audio vocabulary” for key actions and system states. Reuse the same short beep for every successful control-panel selection or the same alert tone for safety warnings across all modules. Predictable, unambiguous sounds build muscle memory and reduce cognitive load, so users instantly recognize outcomes and system responses.

-

Layered Soundscapes By blending these levels thoughtfully—mixing quieter ambient tracks under louder interactive cues—you create a rich, believable soundscape that enhances situational awareness without masking critical feedback. Build depth by stacking multiple audio layers:

- Ambient Base: Continuous environmental loops (air handling units, distant machinery).

- Functional Mid-Layer: Operational sounds tied to active components (motors, hydraulics).

- Interactive Top-Layer: Event-driven cues (button clicks, error tones).

Event-Driven Audio

In VR, event-driven audio feedback is triggered in response to specific game or application events, such as footsteps, machinery actions, UI interaction, or object manipulation. It creates a dynamic and interactive sound experience that responds to user behavior in real time, enhancing immersion by responding to user interactions. Let’s demonstrate how to trigger different sounds when a large box is dropped onto various surfaces inside the logistics station of the XFactory. We will use XR Interaction Toolkit for grabbing and dropping, AudioSource for playing sounds, and physics-based collision detection for triggering different clips.

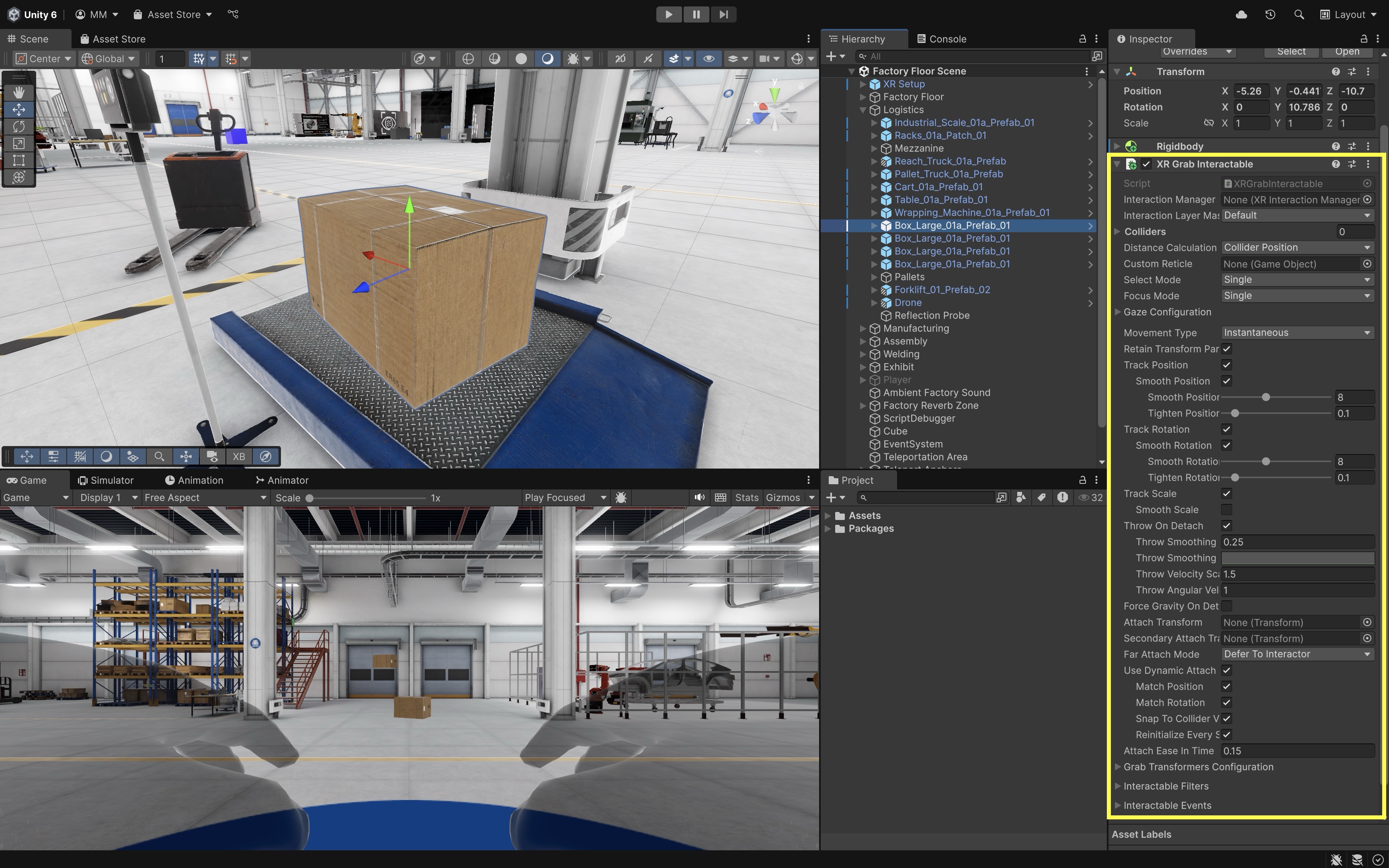

- Prepare Your Environment:

- Inside the logistics station, create or place a grabbable box (e.g.,

Box_Large_01a_Prefab_01). - Make sure the box has a

Rigidbody, aCollider, and anXR Grab Interactable. - Position the box next to a the scale (

Industrial_Scale_01a) and a wooden pallet (e.g., Pallet_02_Prefab_02). - Confirm the scale, pallet, and concrete floor have appropriate colliders.

- Inside the logistics station, create or place a grabbable box (e.g.,

- Create and Configure an Audio Trigger Script:

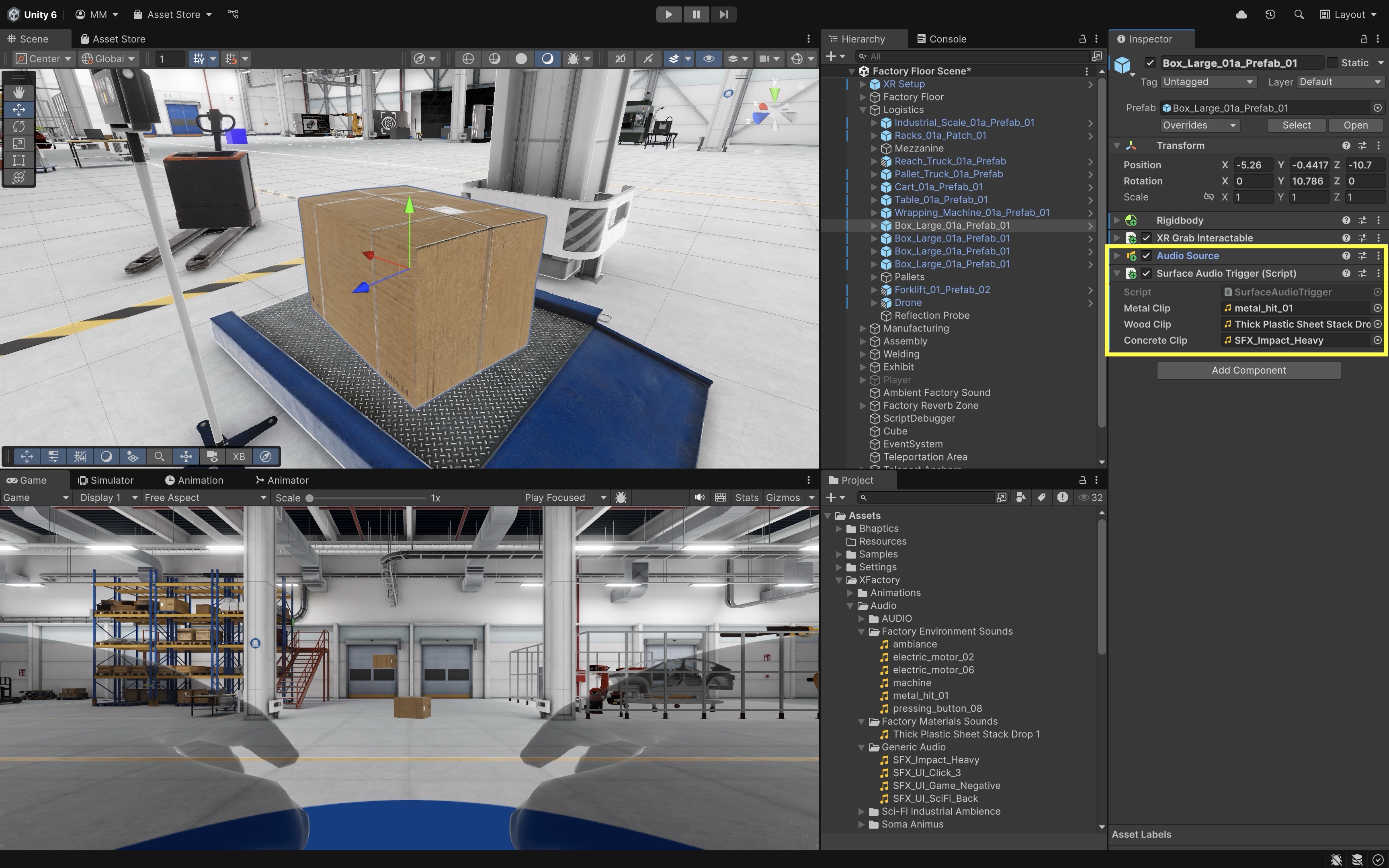

- Make sure your box prefab (

Box_Large_01a_Prefab_01) has anAudioSourcecomponent. If not, the script will automatically add one at runtime, but it’s good practice to add and configure it in advance if you want to control volume, spatial blend, or other settings. - Create and attach the following script to your box prefab:

using UnityEngine; public class SurfaceAudioTrigger : MonoBehaviour { public AudioClip metalClip; public AudioClip woodClip; public AudioClip concreteClip; private AudioSource audioSource; void Start() { audioSource = GetComponent<AudioSource>(); if (audioSource == null) { audioSource = gameObject.AddComponent<AudioSource>(); } } private void OnCollisionEnter(Collision collision) { string tag = collision.gameObject.tag; switch (tag) { case "Metal": PlaySound(metalClip); break; case "Wood": PlaySound(woodClip); break; case "Concrete": PlaySound(concreteClip); break; } } void PlaySound(AudioClip clip) { if (clip != null) { audioSource.PlayOneShot(clip); } } } - Make sure your box prefab (

- Configure the Script:

- Select the box GameObject in the

Hierarchy. - In the

Inspector, find theSurface Audio Triggercomponent. - Drag appropriate audio clips into the three fields:

Metal Clip,Wood Clip, andConcrete Clip.

- Select the box GameObject in the

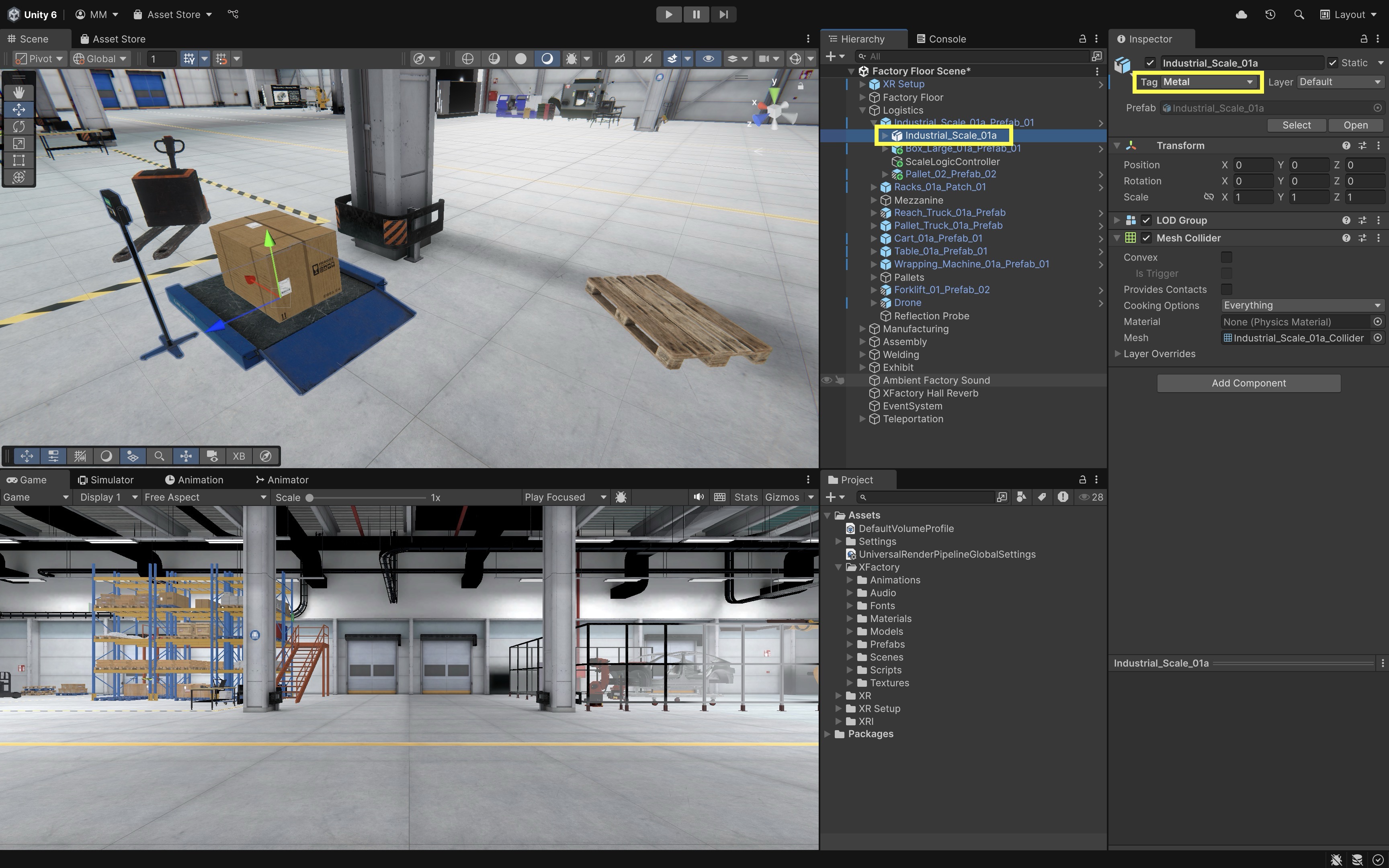

- Tag the Surfaces:

- Tag the scale

Metal. - Tag the pallet

Wood. - Tag the floor

Concrete, - Go to

Tags & Layers > Add Tag, addMetal,Wood, andConcrete, and assign them to the respective objects.

- Tag the scale

- Test the Behavior:

- Play the scene (using the Link app) or deploy it to your VR headset.

- Pick up the box using the controller.

- Drop it on each surface.

- Listen for context-aware impact audio feedback.

This setup showcases dynamic feedback based on user action and environment context, tightly integrated VR interaction and audio design. You can expand this system to other factory objects—like different drop sounds for metal parts in the production station, or warning buzzers if items are dropped in restricted zones.

3D Spatial Audio

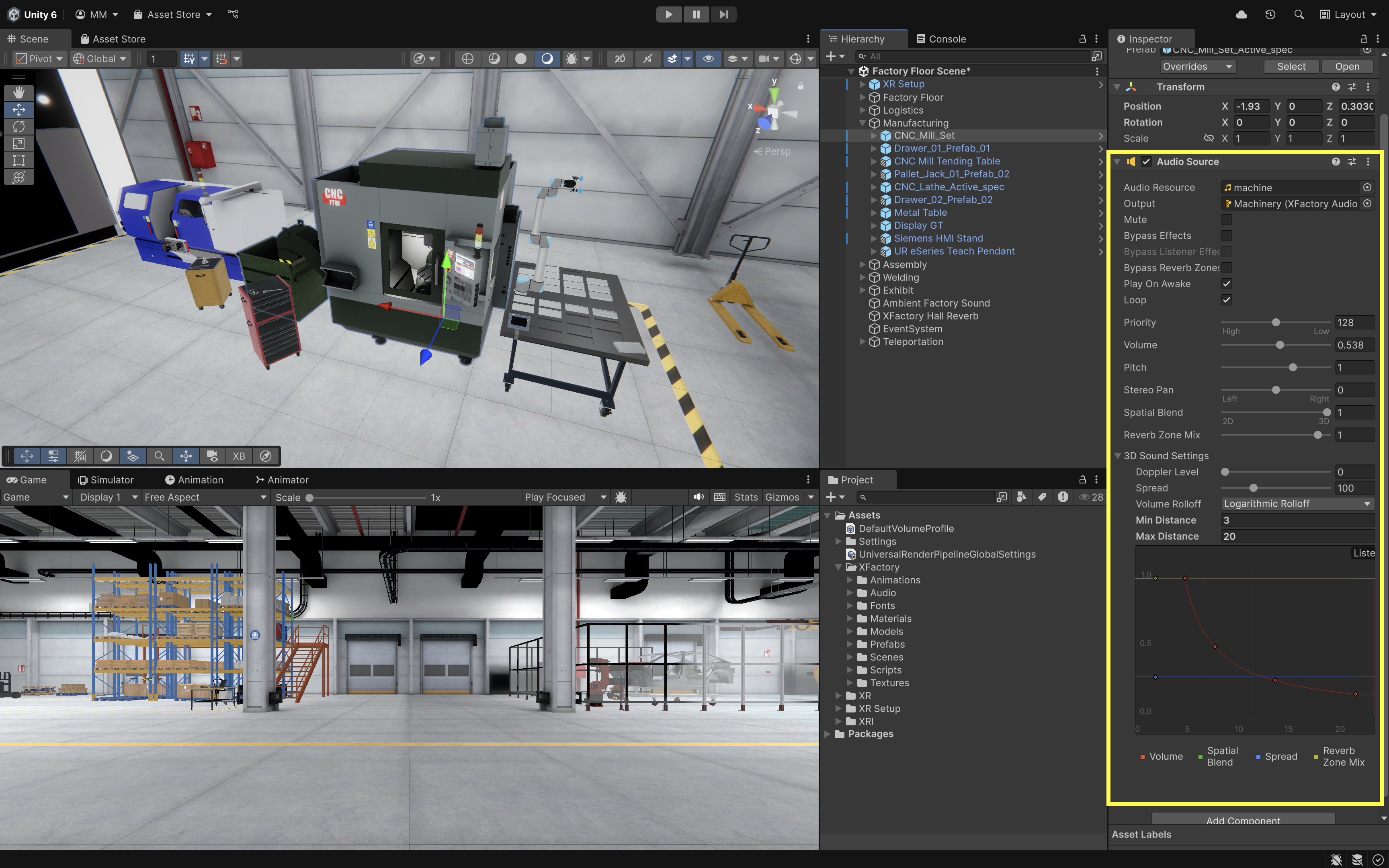

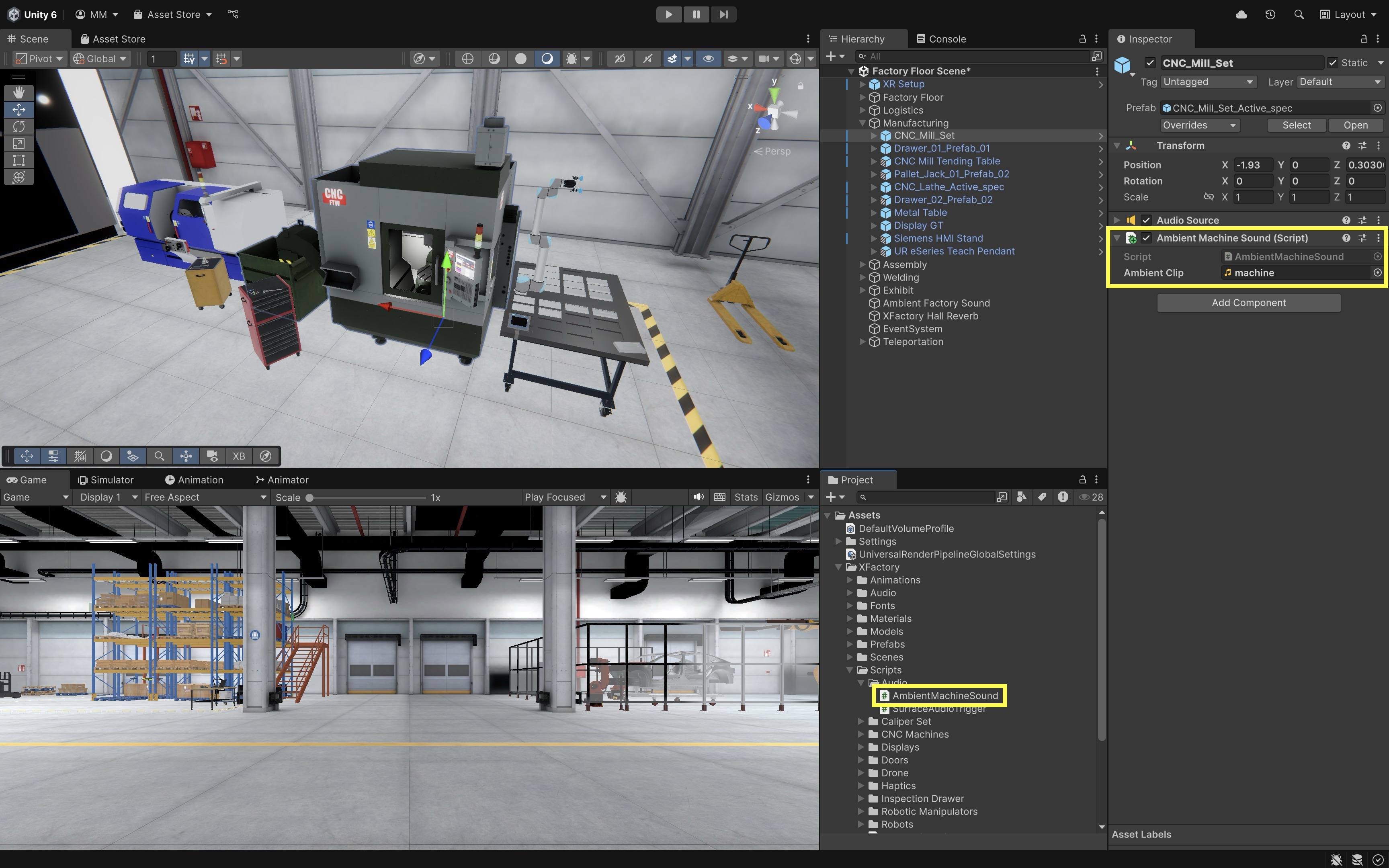

3D spatial audio simulates sound sources in a three-dimensional space, allowing users to perceive direction, distance, and environmental effects. This is especially useful in immersive VR environments like your XFactory, where spatial context enhances realism and user awareness. Let’s enable ambient machine sound for the CNC_Mill_Set GameObject in the manufacturing station using Unity’s 3D audio features.

- Prepare the CNC Machine:

- In the

Hierarchy, locateCNC_Mill_Set - Confirm it already has an

Audio Sourcecomponent (or add one if not).

- In the

- Configure the

Audio Source:- Go to the

Audio Sourcecomponent. Audio Resource: Assign a looping ambient sound (e.g.,machine.ogg) that represents the machine hum.Play On Awake: Enable this so the sound starts automatically when the scene loads.Loop: Enable this to keep the sound playing continuously.Spatial Blend: Set to1.0to make the sound fully 3D and spatially aware.Min Distance: Set to3meters. The sound will be at full volume within this range.Max Distance: Set to20meters. Beyond this distance, the sound fades out gradually.- Optionally, adjust

Doppler Levelto simulate pitch shift when the user or object moves, orSpreadto control stereo width for large machines.

- Go to the

- Automate Playback and 3D Settings (Optional):

- Attach the script below to the

CNC_Mill_SetGameObject. - Assign an ambient clip such as

machine.oggin theInspector.

using UnityEngine; // This script plays a looping ambient sound (e.g., CNC machine hum) in the environment [RequireComponent(typeof(AudioSource))] public class AmbientMachineSound : MonoBehaviour { [Tooltip("The looping ambient sound clip to play (e.g., CNC hum)")] public AudioClip ambientClip; private AudioSource audioSource; void Start() { // Get or add the AudioSource component audioSource = GetComponent<AudioSource>(); // Configure AudioSource for spatial audio playback audioSource.clip = ambientClip; audioSource.loop = true; audioSource.playOnAwake = true; audioSource.spatialBlend = 1.0f; // Fully 3D audioSource.minDistance = 3f; // Full volume within 3 meters audioSource.maxDistance = 20f; // Sound fades beyond 20 meters // Play the ambient sound audioSource.Play(); } }

The script configures an

AudioSourceto loop a 3D ambient clip, sets near and far fade distances, and starts playback automatically. The sound naturally fades with distance, making it ideal for prefabs or multiple ambient machines—ensuring consistent, realistic audio with minimal setup. - Attach the script below to the

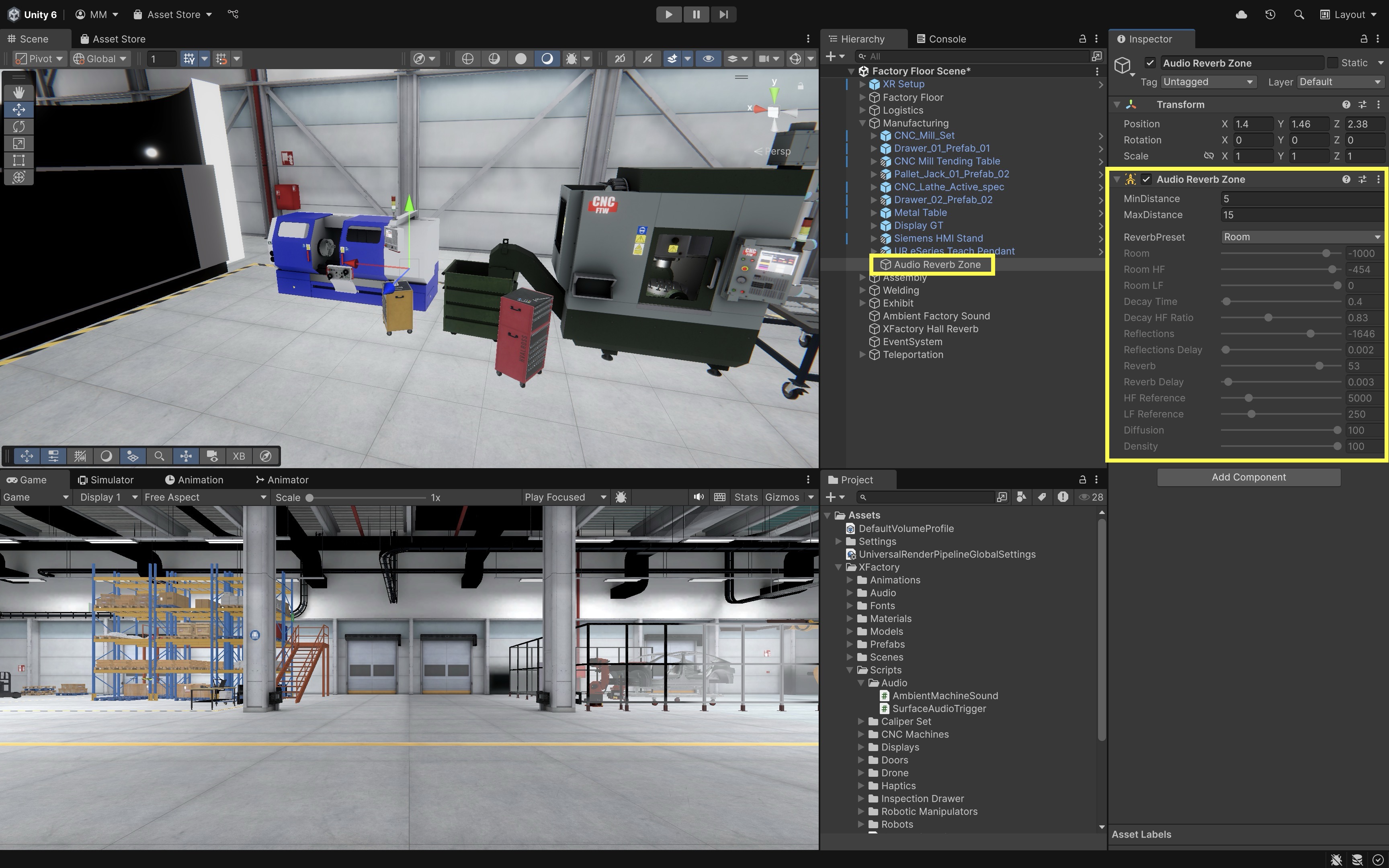

- Add

Audio Reverb Zonefor Factory Acoustics (Optional):- In the

Hierarchy, right-click and chooseAudio > Audio Reverb Zone. - Position the zone to fully encompass the desired area (e.g., manufacturing station).

- Go to the

Inspector. - Set

Min Distanceto5meters. Inside this range, full reverb is applied. - Set

Max Distanceto15meters. Outside this, reverb fades out completely. - Set

Reverb PresettoFactory,Hangar, orRoomto simulate realistic industrial acoustics.

- In the

Reverb zones only affect 3D audio sources within their area. As the user moves in or out of the zone, sounds dynamically gain or lose reverb — enhancing the spatial feel of different factory zones.

Proximity-Triggered Audio

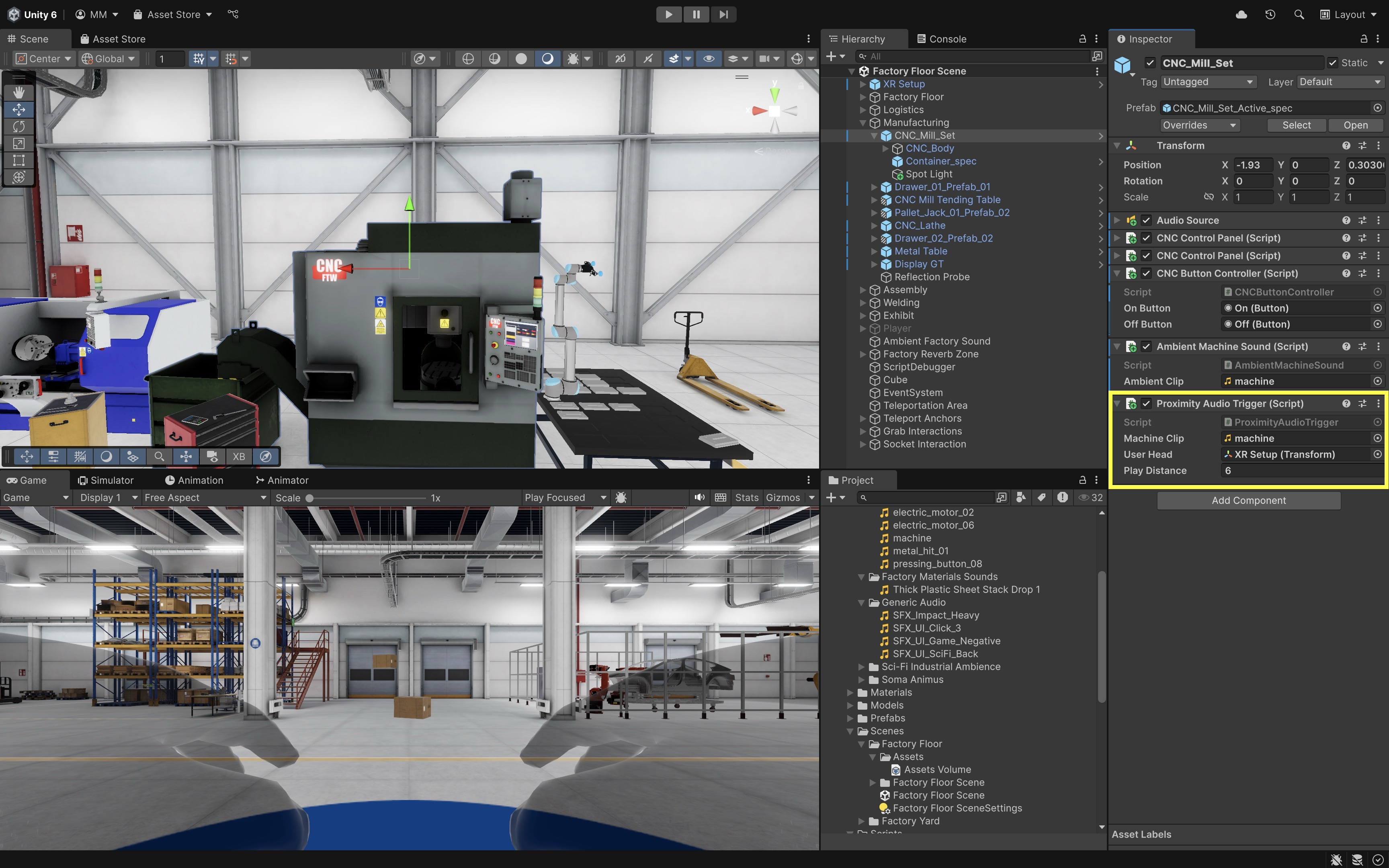

A Proximity-Triggered Audio setup plays sound only when the user is within a defined range of a sound-emitting object. This improves realism and performance by avoiding unnecessary audio playback in distant or inactive areas. Instead of always playing machine audio, let’s make the CNC_Mill_Set emit sound only when the user approaches, mimicking a real-world scenario where machine noise becomes prominent only when nearby.

- Add and Configure the Audio Source:

- Select the

CNC_Mill_SetGameObject in the manufacturing station. - Add an

Audio Sourcecomponent if it doesn’t already exist. - Go to the

Inspector. Audio Clip: Assign a mechanical loop (e.g.,machine.ogg).Loop: Enabled.Play On Awake: Disabled (sound will be triggered via script).Spatial Blend:1(Fully 3D).Min Distance:3(full volume within 3m).Max Distance:20(fades out gradually).

- Select the

- Add and Configure the Proximity Trigger Script:

- Prepare a script that checks the distance between the user’s headset (camera) and the CNC machine. If within a specified radius, the sound plays. If the user walks away, the sound stops.

using UnityEngine; /// Plays machine sound when user is near the CNC_Mill_Set object. public class ProximityAudioTrigger : MonoBehaviour { public AudioClip machineClip; public Transform userHead; public float playDistance = 6f; private AudioSource audioSource; void Start() { audioSource = GetComponent<AudioSource>(); audioSource.clip = machineClip; audioSource.loop = true; audioSource.spatialBlend = 1f; // Do not play on awake; controlled by proximity } void Update() { if (userHead == null) return; float distance = Vector3.Distance(userHead.position, transform.position); if (distance < playDistance && !audioSource.isPlaying) { audioSource.Play(); } else if (distance >= playDistance && audioSource.isPlaying) { audioSource.Stop(); } } } - Configure the Script:

- Attach the

ProximityAudioTriggerscript to the sameCNC_Mill_SetGameObject. - Go to the

Inspector. - Drag your audio clip (e.g.,

machine.ogg) into theMachine Clipfield. - Drag the

Main Camera(XR Rig > Camera Offset > Main Camera) into theUser Headfield. - Adjust the

Play Distancevalue to control when the sound activates (e.g.,6meters for a moderate range).

- Attach the

- Play and Test:

- Enter Play Mode in Unity with your VR headset connected.

- Walk toward the CNC machine and listen for the audio to fade in naturally as you approach.

- Move away and notice the audio fading out until it stops completely.

- Try different approach angles to confirm the sound spatialization feels realistic.

- Adjust

Play Distance,Min Distance, andMax Distancein the Inspector if the audio triggers too soon, too late, or feels unnatural.

Proximity-triggered audio limits active audio sources in large scenes, reducing processing load. It also mimics how object sounds become audible only when the user is nearby and enhances spatial awareness by tying audio to physical proximity.

Key Takeaways

Integrating touch and sound in VR transforms interaction from a visual process into a multisensory experience that strengthens realism, awareness, and precision. In engineering simulations like XFactory, synchronized haptic and audio cues—ranging from fingertip vibrations and controller pulses to spatial and proximity-based machine sounds—provide intuitive, context-rich feedback that enhances safety and engagement. Effective design balances consistency, proportional intensity, and timing across senses while allowing user comfort and adaptability. By layering event-driven haptics, spatial audio, and tactile gloves within responsive, context-aware systems, developers can create immersive VR environments that feel authentic, support efficient task performance, and mirror the dynamics of real-world engineering operations.