D2. Navigating in VR

Learning Outcomes

- Apply VR coordinate systems. Practice Global vs. Local gizmos, test Unity’s left-handed axes, and compare world vs. local transforms. Review personal space zones to trigger cues when proximity boundaries are crossed.

- Set up a VR-ready project. Install XR Plug-in Management with OpenXR, add the XR Interaction Toolkit, configure Interaction Profiles, and verify input settings.

- Implement locomotion. Configure the XR Rig with a Locomotion Mediator and XR Body Transformer; test teleportation, snap turn, continuous turn, and continuous move—ensuring only one is active at a time.

- Optimize for performance. Target 90 FPS, keep draw calls under ~175 and triangles under ~500k, batch static objects, reduce poly/texture costs, limit effects, and use MSAA/FXAA for clarity.

Spatial Awareness

Spatial awareness in VR refers to the user’s ability to perceive and understand the positions, orientations, and relationships of objects—and of themselves—within a 3D virtual environment. This awareness is driven by multisensory input, including visual, auditory, and haptic cues, and is enhanced by technologies such as head tracking, hand/controller tracking, spatialized sound, and real-time feedback. To support this immersive perception, VR environments rely on a set of coordinate systems—including world, local, and camera-relative frames of reference—that define how objects are positioned, moved, and interacted with. Understanding these coordinate systems is fundamental to designing VR experiences that feel spatially coherent, responsive, and believable.

Coordinate Systems in VR

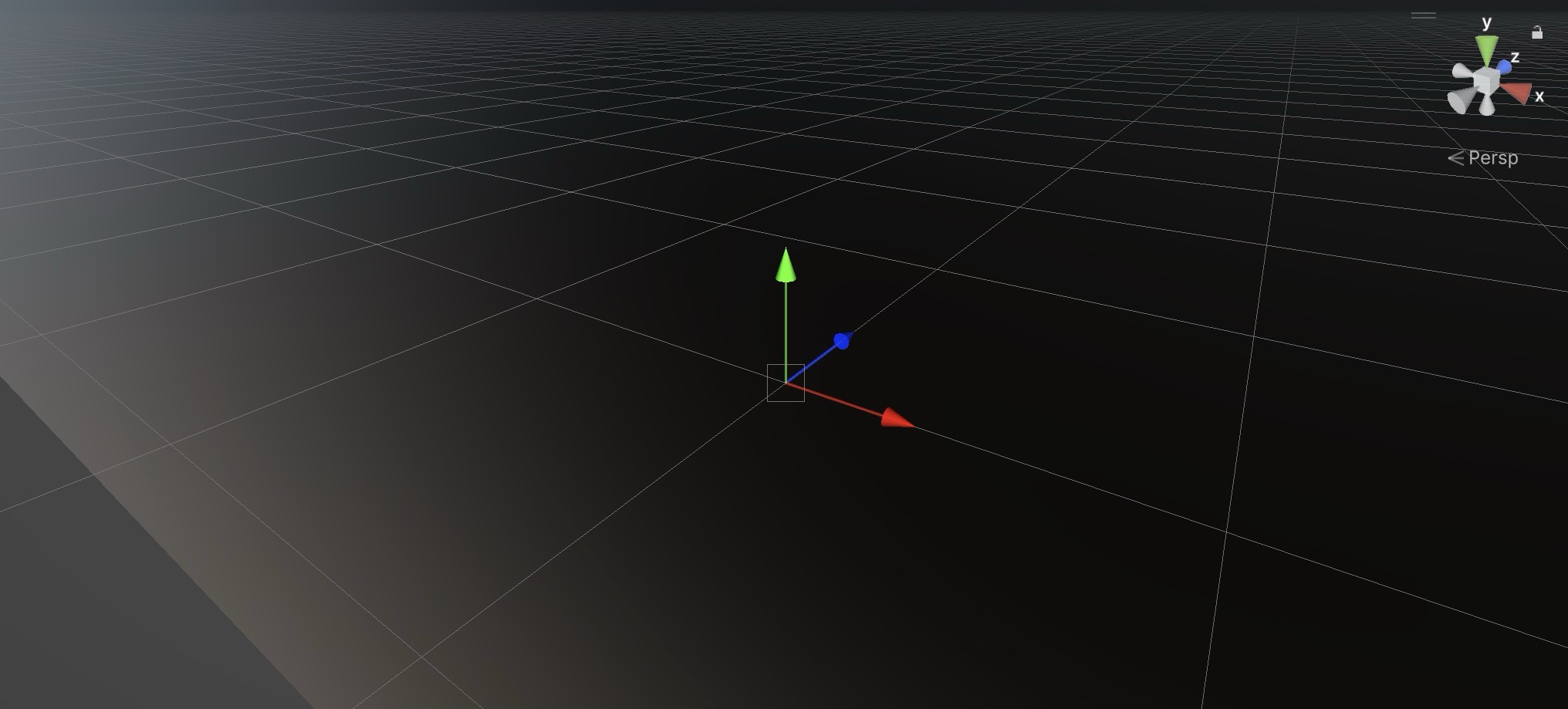

In VR development, coordinate systems provide the framework for positioning, orienting, and scaling every element in your virtual world. A deep understanding of coordinate systems in VR is critical for creating immersive and technically robust experiences. By mastering the interplay between world, local, and view coordinate systems—and understanding how Unity’s left-handed system operates—you can ensure that every object in your virtual world is placed accurately and behaves consistently. Coordinate systems are essential for ensuring that virtual objects maintain their intended spatial relationships as the user moves. Accurate positioning and orientation of objects help the user feel grounded within a believable, interactive space, and proper management of coordinate systems minimizes errors like drift or scaling mismatches that can break immersion. Unity uses a left-handed coordinate system for its 3D space. Here’s a breakdown of how the axes are oriented:

X-axis: Represents horizontal movement, with the positive direction typically pointing to the right.Y-axis: Represents vertical movement, with the positive direction pointing upwards.Z-axis: Represents depth, with the positive direction typically pointing forward (out of the screen) in Unity’s left-handed coordinate system.

Left-handed coordinate system uses the left hand for the axis orientation. Here, the positive

Zaxis points away from the viewer. Unity uses a left-handed system for its 3D world. This means when you specify a position like(X, Y, Z), theZvalue increases as objects move away from the camera. Knowing your system’s handedness helps in importing assets, adjusting lighting, and ensuring proper camera behavior.

World Coordinate System

The world coordinate system is the global reference frame for the entire scene. It defines a fixed, universal orientation across the entire Unity environment. The world origin (0, 0, 0) is typically set near the player’s starting point or a defined central location in the virtual factory. This origin serves as the anchor point from which all objects’ positions, directions, and distances are ultimately measured. The world coordinate system uses a left-handed axis convention in Unity, where:

- Positive

Xpoints right - Positive

Ypoints up - Positive

Zpoints forward (out of the screen)

These fixed world directions remain constant, regardless of an object’s orientation or parent-child relationships. This system ensures a consistent and shared spatial layout, enabling accurate placement, navigation, and alignment of objects throughout the scene.

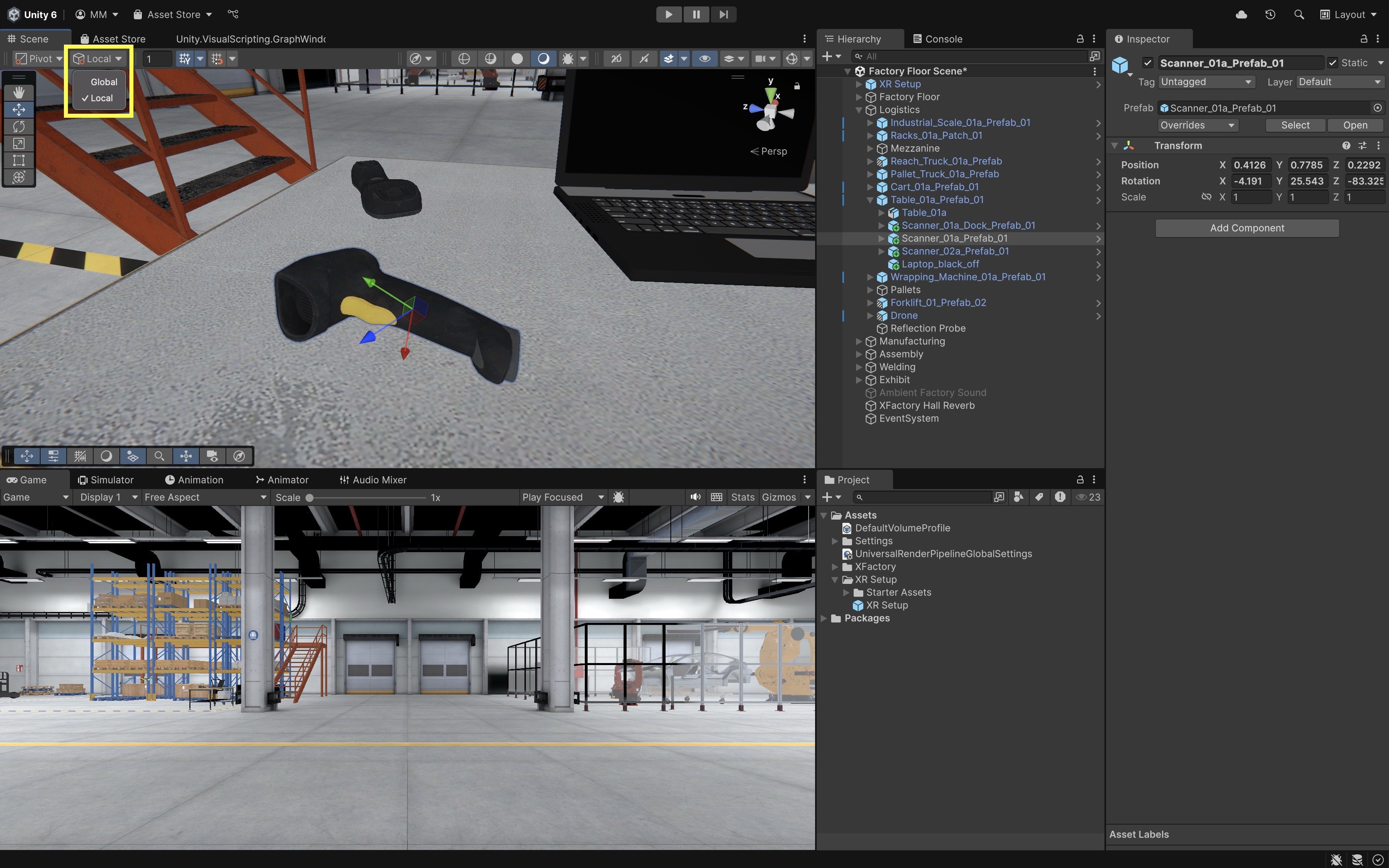

In the logistics station, select a drone or scanner. Toggle between

GlobalandLocalhandle modes in the Unity toolbar, then move the object using theMovetool (W). InGlobalmode, the arrows follow the fixed world axes (X: red,Y: green,Z: blue), while inLocalmode, they align to the object’s current rotation. This clearly shows how world and local directions differ.

Local Coordinate System

Every GameObject in Unity also has a local coordinate system—its own frame of reference that travels and rotates with it. This system defines the object’s orientation and position relative to itself, rather than the world. Local axes are especially important when dealing with hierarchical relationships, such as child objects attached to a parent, or when animating complex, articulated mechanisms. In local space:

- The

Xaxis represents the* object’s right direction - The

Yaxis represents the object’s up direction - The

Zaxis represents the object’s forward direction

These directions are influenced by the object’s rotation and are used in calculations like local movement, local rotation, and parenting. This makes local coordinates essential for precise control of movement and orientation, particularly in robotic arms, vehicles, characters, and modular machines.

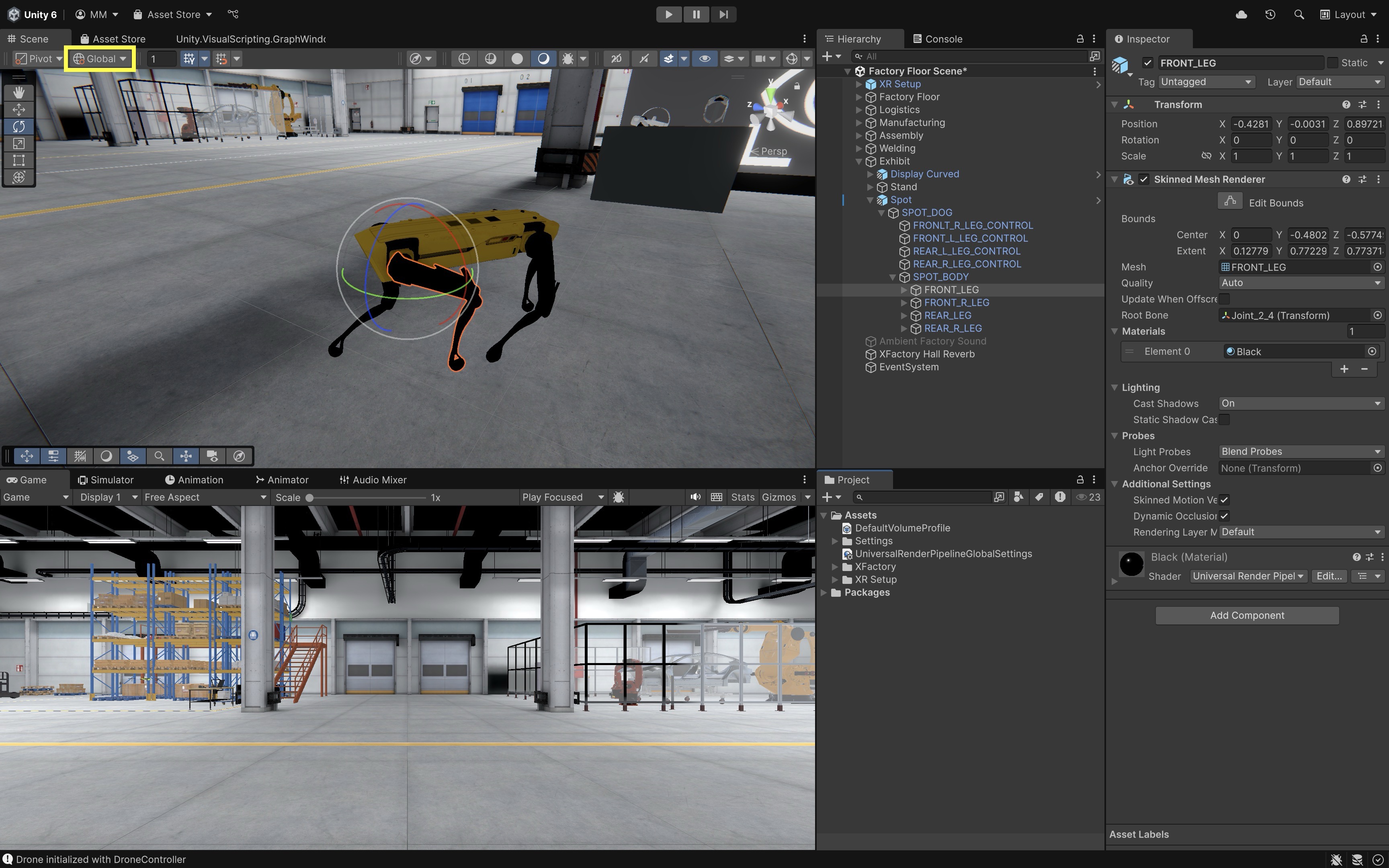

In the exhibit station, select one of the leg joints on the quadruped robot (

Spot). Use theRotatetool (E) and toggle betweenLocalandGlobalmodes. When you rotate the joint inLocalmode, the rotation follows the natural direction of the joint, consistent with the leg’s intended movement. InGlobalmode, the gizmo remains aligned with the world axes, making the rotation feel off or unnatural for articulated motion.

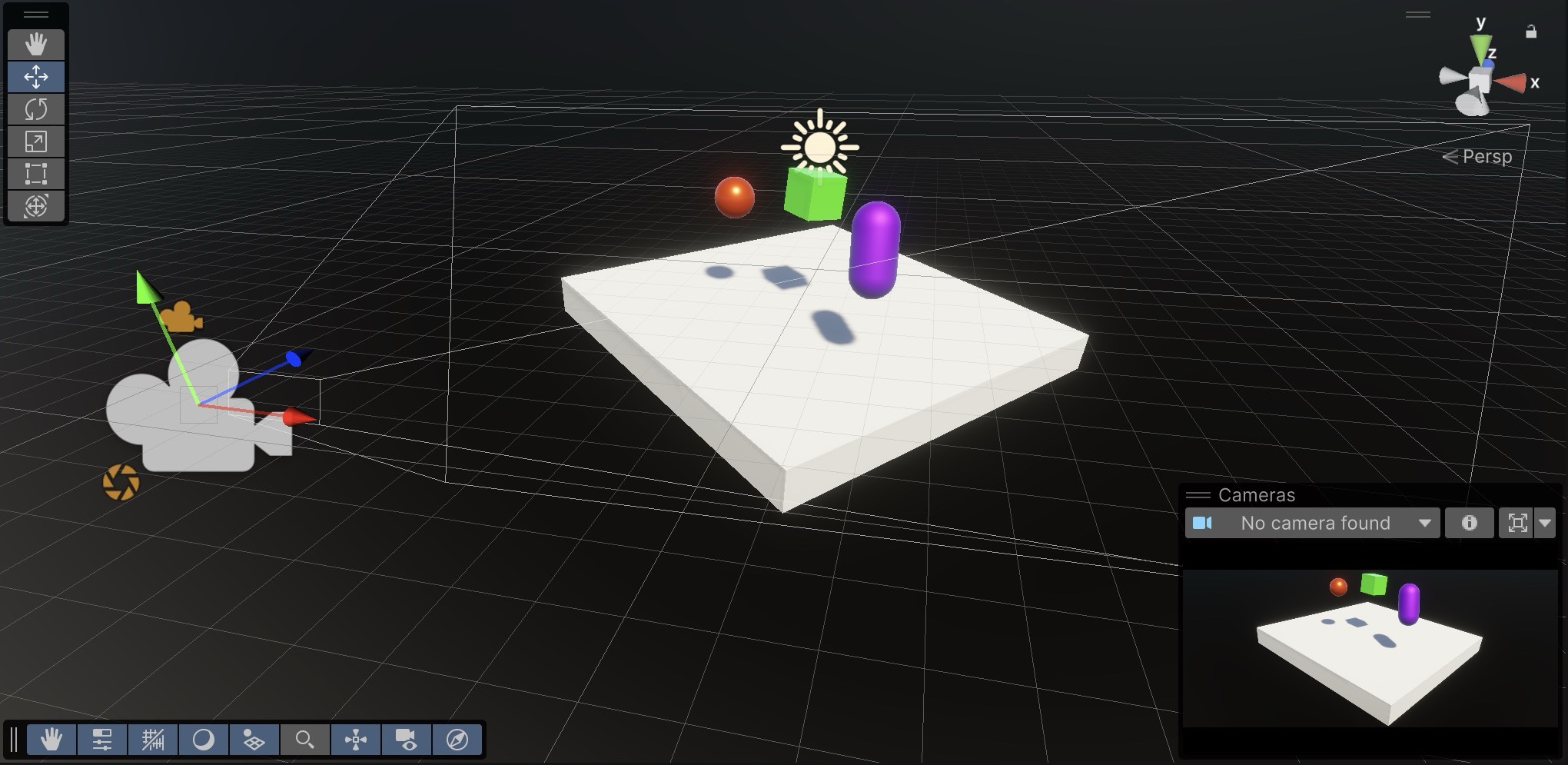

Camera Coordinate System

The camera (or headset) coordinate system is dynamic—it updates in real time with the user’s head position and orientation. In XR, the camera becomes the user’s point of view, serving as the basis for rendering the scene and positioning UI or interactive elements. Key spatial behaviors and scenarios include:

-

World-Locked Content: Objects are fixed in the environment and do not follow the user (i.e., the camera). Ideal for props, machines, landmarks, or spatial UI that needs to stay consistent as the user moves around. For example, the CNC machines in the manufacturing station remain anchored regardless of user movement.

-

Head-Locked (View-Fixed) Content: Objects move with the user’s head, maintaining a fixed offset in view space. Common for HUDs, notifications, or tooltips. Use sparingly to avoid motion sickness or visual overload.

-

Seated Experiences: The user is expected to stay seated. The world origin is usually aligned with the user’s initial head position, making camera-relative offsets predictable in limited physical space. This is suitable for simulations or control panels where physical movement is minimal.

-

Standing Experiences: The user stands and may walk or move within a physical play area. The world origin is aligned with the floor plane, and content should be positioned with physical scale and posture in mind. This is useful for interactive demos or exploring full-scale scenes like XFactory’s exhibit zone.

-

Hybrid Experiences: Some setups blend seated and standing interactions—for example, a user may stand to explore content but sit to operate a virtual machine. In XFactory’s exhibit station, users may stand to explore AR/VR displays but sit for testing simulations, requiring flexible origin handling.

Drifts may happen as a result of gradual inaccuracies in the system’s understanding of position over time. Techniques such as periodic re-centering of the world origin help minimize drift. Regular calibration ensures that long-duration VR sessions maintain positional accuracy and prevent the feeling of disorientation.

Proxemics in VR

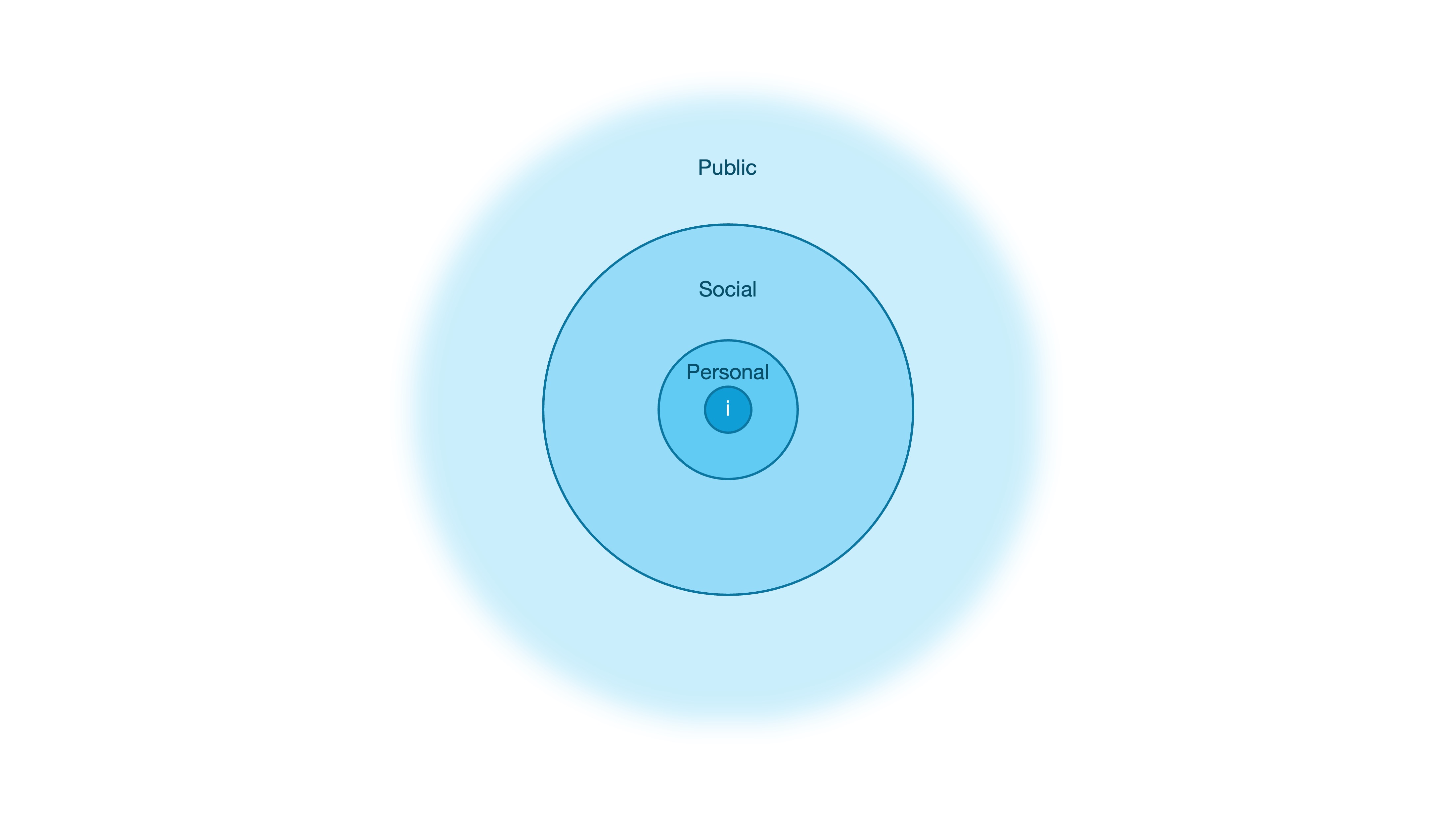

While coordinate systems define the logical structure of space, proxemics introduces the human dimension—how people perceive and react to spatial proximity. Proxemics is the study of personal space and social distance, and in virtual reality, it plays a crucial role in shaping interactions that feel natural, respectful, and emotionally comfortable. Designers must account not only for functional placement but also for the psychological boundaries users instinctively maintain. Respecting these spatial expectations can significantly enhance immersion and reduce discomfort. The zones of personal space in VR include:

-

Intimate Space (0–0.5m): Reserved for close personal interactions, such as handoffs, high-trust exchanges, or haptic feedback experiences. Avoid placing avatars, tools, or UI elements in this space unless the user has explicitly initiated the interaction.

-

Personal Space (0.5–1.5m): Ideal for one-on-one conversations, small-scale tasks, or interactive UI panels. This is the user’s primary comfort zone—frequently used for interaction and control, so intrusions should be intentional and minimal.

-

Social Space (1.5–4m): Best suited for group interactions, multiplayer collaboration, or shared environments. Arrange participants or elements to encourage communication while preserving personal boundaries.

-

Public Space (4m+): Appropriate for presentations, lectures, or passive observation. Use this zone for content delivery that doesn’t require direct engagement, such as signage, broadcasts, or performances.

Designing with proxemics in mind ensures that your VR experiences are not only technically accurate—but also socially intuitive. Always test interactions at different distances and in varied user postures (e.g., standing, seated, crouching). Pay attention to how users respond to avatar proximity, reachability, and UI positioning. To reduce discomfort and maintain boundaries, consider subtle visual cues like fading, blurring, or scaling of objects that breach a user’s personal space. In XFactory, for example, invisible boundaries or warning overlays are essential around active machinery like CNC mills or robotic arms—balancing safety with immersion.

Locomotion in VR

Locomotion is the method by which a user moves through and interacts with a virtual environment. In VR, effective locomotion is critical to creating an immersive, natural, and comfortable experience. Unity’s XR Interaction Toolkit provides a range of locomotion primitives—including teleportation, snap turning, continuous turning, and continuous movement—that can be tailored to suit different applications. Key considerations in designing VR locomotion include:

-

User Comfort and Safety: Design locomotion to minimize motion sickness and disorientation. Use techniques like snap turning and teleportation for rapid repositioning while considering ergonomic factors for extended VR sessions. Ensure that only one locomotion method is active at a time via a centralized system to prevent conflicting commands.

-

Centralized Input Handling and Interaction Safety: Favor action-based input over device-based input to simplify control mapping across diverse VR hardware. A centralized locomotion system not only prevents simultaneous actions but also provides a consistent, predictable user experience by handling input conflicts effectively.

-

Coordinate Space Management and Transformations: Precisely manage conversions between device, local, and world coordinate spaces. Accurate transformation is essential for maintaining spatial consistency, immersion, and ensuring that physical movements are correctly mirrored in the virtual environment.

-

Modularity, Scalability, and Flexibility in Movement: Build locomotion systems with modularity in mind by using simple placeholder assets that can be upgraded as needed. This approach supports scalability and allows developers to tailor the locomotion behavior—whether through rapid teleportation or immersive continuous movement—to suit the specific needs of different VR applications.

In XFactory, users can explore the factory floor to observe different stations. Continuous movement lets them walk beside a moving forklift, while teleportation allows them to quickly reposition near a drone landing pad or machinery entrance. Snap turning allows the user to interact with screens or robots placed in a circular formation without physical turning.

XR Origin and Teleportation

The XR Origin (aka, XR Rig—a component of our XR Setup) is a MonoBehaviour that defines the user’s presence and interaction point in the virtual environment. It encapsulates the tracking origin, camera, and input controllers, and is the primary target manipulated by locomotion systems such as teleportation, continuous movement, climbing, and turning. In the context of teleportation, the XR Origin is crucial because teleportation involves instantaneously repositioning the user within the virtual world. This repositioning is applied to the Rig’s root GameObject, ensuring that all child objects—especially the head (camera) and hands (controllers)—move together coherently. Our imported XR Setup (imported from Unity 6’s Sample VR Scene) has the following structure:

XR Rig (Root)

├── Camera Offset

│ ├── Main Camera

│ ├── Left Controller

│ ├── Left Controller Teleport Stabilized Origin

│ ├── Right Controller

│ └── Right Controller Teleport Stabilized Origin

└── Locomotion

├── Turn

├── Move

├── Teleportation

├── Climb

├── Gravity

└── Jump

XR Setup(Root): The top-level prefab that encapsulates the entire VR player setup. It is the main locomotion target, meaning that when teleportation or other movement occurs, providers (e.g.,Teleportation Provider,Action Based Continuous Move Provider) apply position and rotation changes directly here.XR Origin: Defines the origin point for XR tracking, ensuring alignment of the player’s position and orientation with the real-world space.Character Controller: Provides collision detection and physics-based constraints for the player (e.g., preventing walking through walls). It defines capsule height, radius, and step offset for grounded movement.Input Action Manager: Handles input bindings by referencing theXRI Default Input Actionsasset, which manages actions like movement, interaction, and teleportation across devices.XR Input Modality Manager: Manages the player’s current input mode (e.g., controller vs. hand tracking) and ensures the system dynamically switches input handling as needed.

Camera Offset: An intermediary transform that adjusts vertical alignment of the headset relative to the play area. It accounts for standing, seated, or room-scale setups.Main Camera: Represents the user’s head. Driven by the physical headset, its position and rotation are critical for determining direction and teleportation destinations.Left Controller/Right Controller: Represent the physical hand controllers. These often containXR Ray InteractororXR Direct Interactor, enabling pointing, grabbing, and teleport targeting.Left Controller Teleport Stabilized Origin/Right Controller Teleport Stabilized Origin: Provide stable reference points for initiating teleport actions, helping reduce jitter or inconsistencies during teleport aiming.

Locomotion: A collection of locomotion-related providers and behaviors that extend user movement beyond basic head tracking.Turn: Enables snap or smooth turning of the Rig.Move: Provides directional continuous movement controlled by the thumbstick.Teleportation: Handles teleport-based locomotion using controller input.Climb: Adds climbing mechanics for interactable climbable surfaces.Gravity: Applies gravity to the Rig, ensuring grounded movement.Jump: Allows the user to perform jump actions in supported environments.

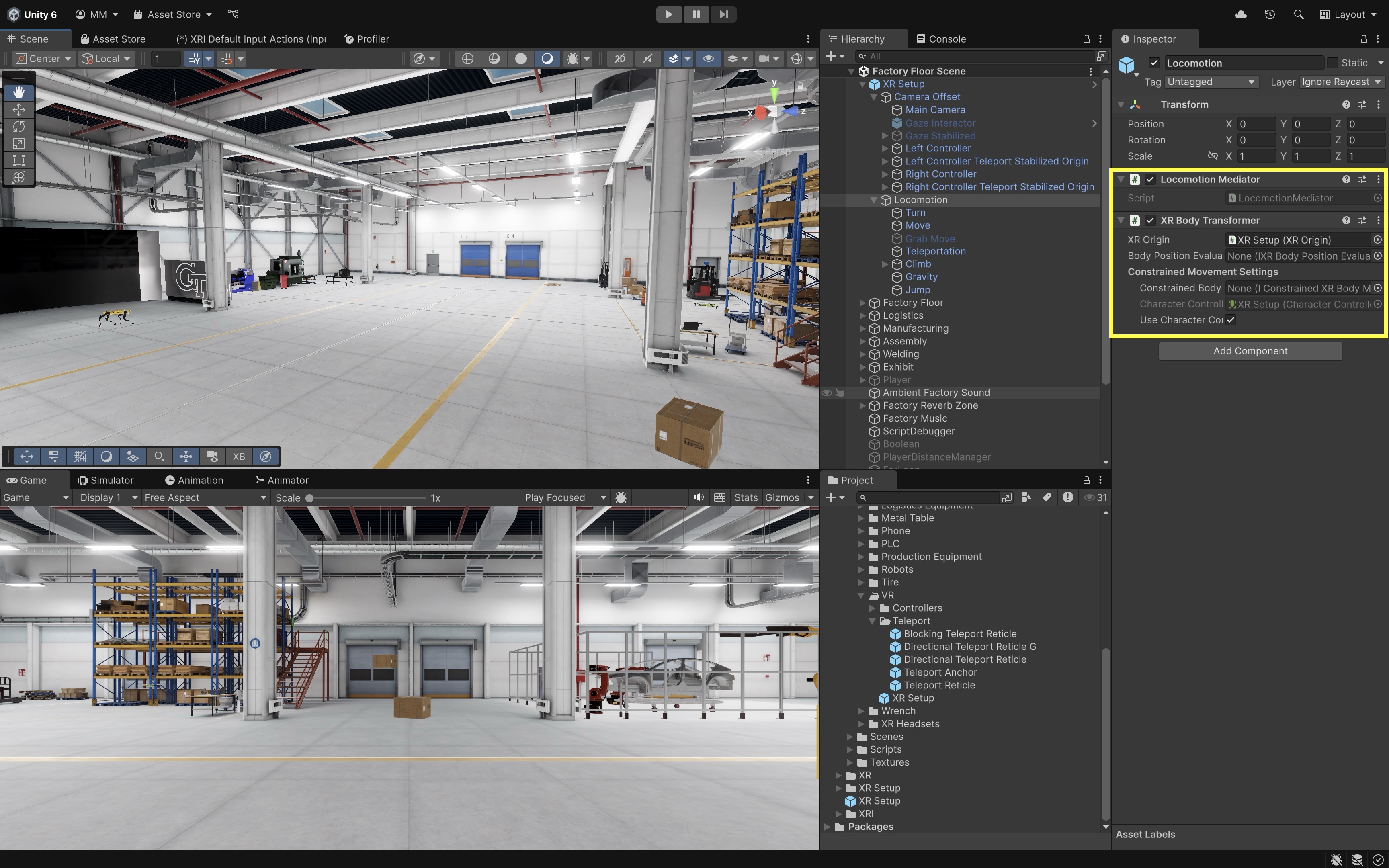

Locomotion Components

Two key components—the Locomotion Mediator and the XR Body Transformer—work together to coordinate input-driven locomotion and apply it safely to the XR Origin.

-

Locomotion Mediator: The

Locomotion Mediatoris a centralized component that coordinates access to theXR Originfor various locomotion methods. It ensures that only one locomotion provider—such as teleportation, snap turn, or continuous movement—is actively controlling theXR Originat any given time. By mediating between multiple input-driven locomotion requests (like a traffic light), theLocomotion Mediatormaintains consistency, prevents conflicting movement commands, and helps deliver a smooth and predictable user experience. This coordination is critical in complex VR scenarios where multiple interaction modes may be available simultaneously. -

XR Body Transformer: The

XR Body Transformeris responsible for applying position and rotation changes to theXR Originin response to locomotion inputs like teleportation or turning. This component acts as the physical mover—once theLocomotion Mediatorgrants access to a locomotion provider, the provider delegates movement operations to theXR Body Transformer. This separation of concerns helps ensure that movement logic remains modular and extensible. This component must be present for theLocomotion Mediatorto function correctly.

Locomotion Providers

These modular components implement specific movement behaviors including teleportation, snap turning, continuous turning, and continuous movement. Each provider extends a common abstract class and is designed to request and relinquish exclusive control over the XR Origin via the Locomotion Mediator. Their modular design allows developers to mix and match movement types based on application needs. Key locomotion providers in XR Interaction Toolkit include Continuous Turn Provider, Continuous Move Provider, Snap Turn Provider, and Teleportation Provider.

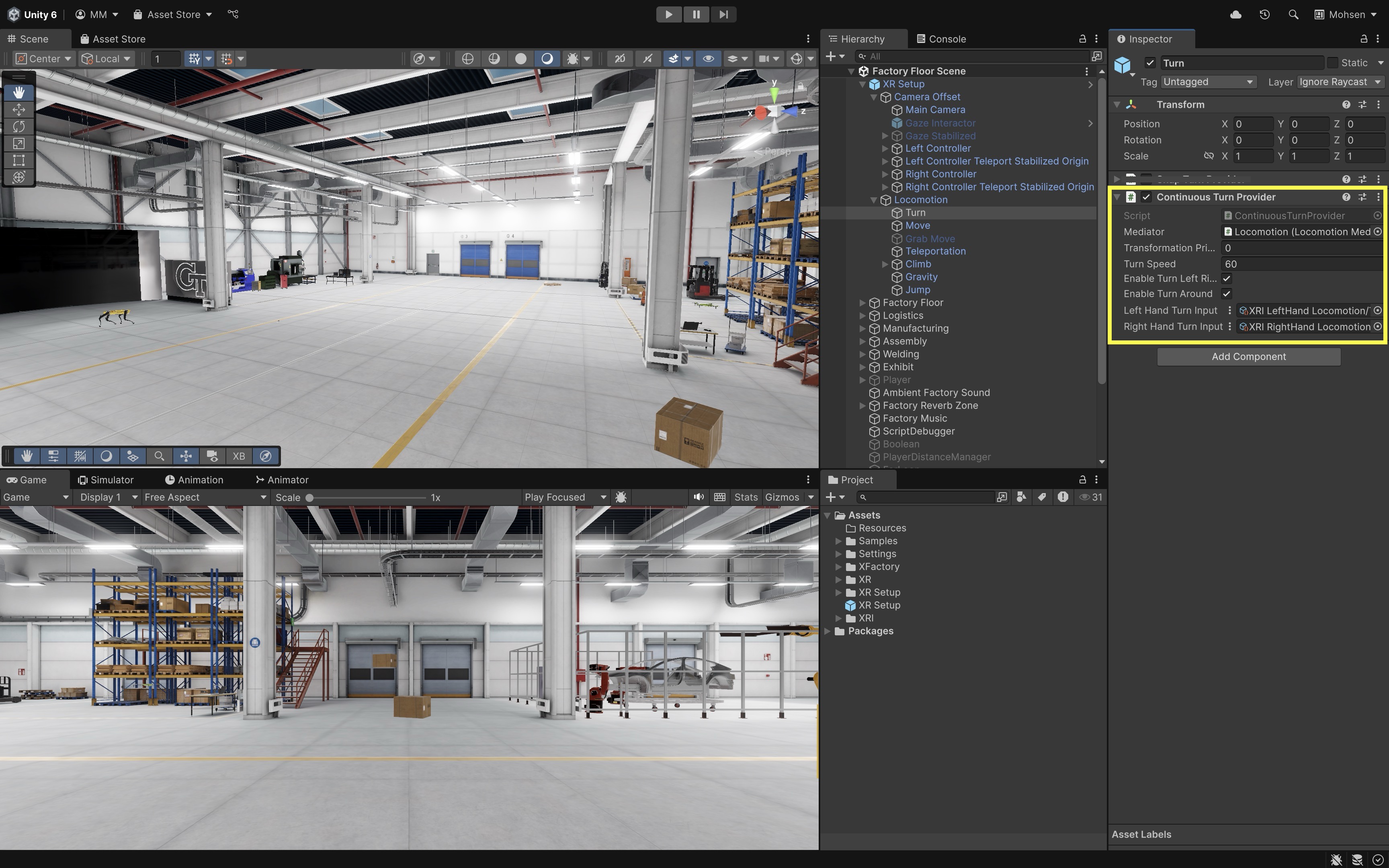

Continuous Turn Provider

The Continuous Turn Provider rotates the XR Origin smoothly over time based on user input. While it offers a fluid rotation experience, it must be carefully calibrated since overly rapid or prolonged turning can cause discomfort or motion sickness. Developers often tie this to the horizontal axis of the right-hand joystick and adjust turn speed to strike a balance between responsiveness and comfort. It is best used in open spaces where users need to orient themselves frequently without relying on teleportation. Follow the instructions below to set it up.

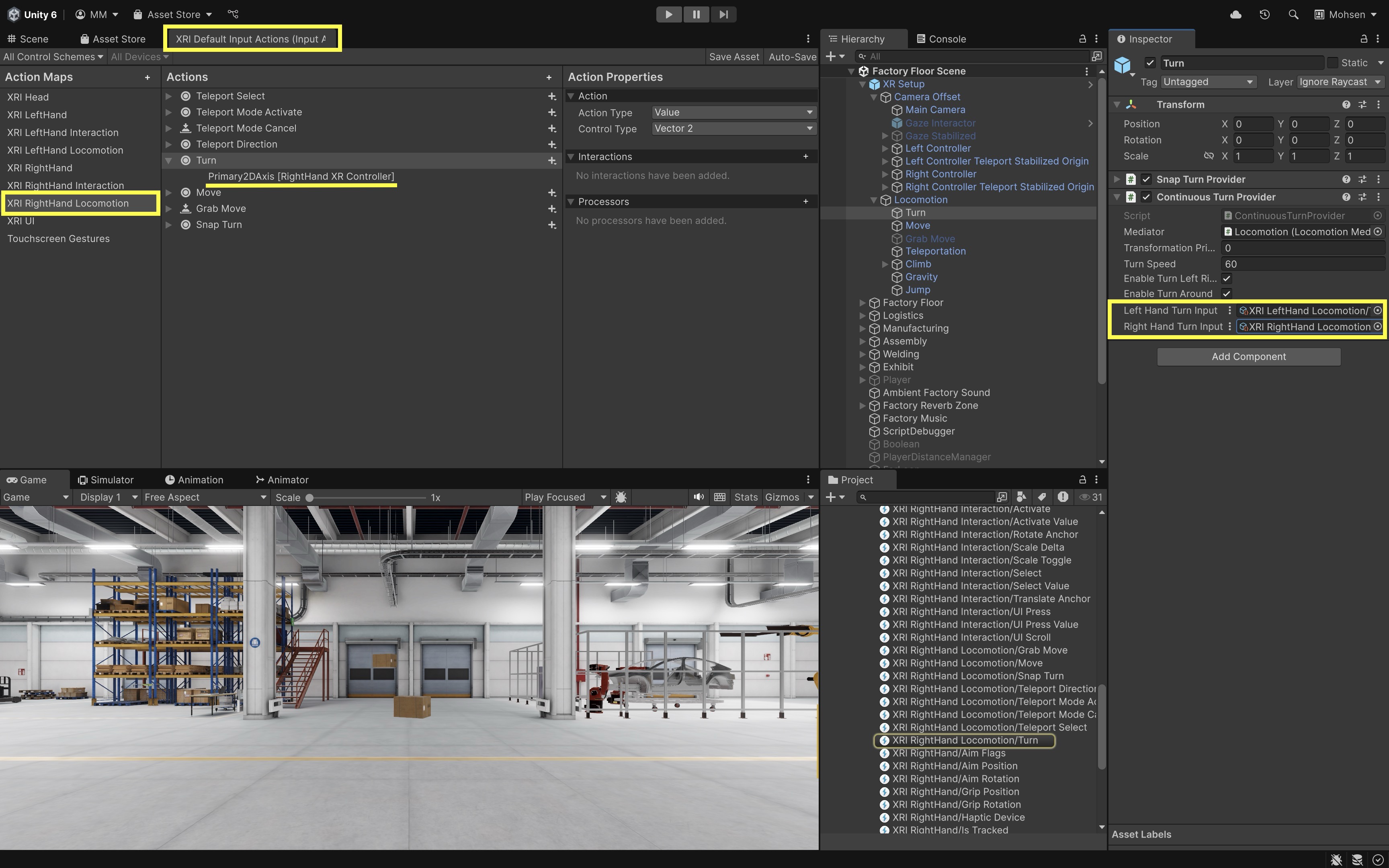

- Add a

Continuous Turn Provider:- Right-click on the

LocomotionGameObject and selectCreate Empty. Name the empty GameObjectTurn. - In the

Inspector, add theContinuous Turn Providercomponent toTurn. - In the

Mediatorfield, assign the existingLocomotion Mediatorcomponent in the scene. This ensures the turning provider cooperates with other movement systems like teleportation or continuous movement. - Ensure the

XR Body Transformeris also attached to the same GameObject as theXR Origin. It is responsible for applying the rotational changes generated by the turn provider.

- Right-click on the

- Configure Turning Parameters:

Transformation Priority: Set this to0. It is an integer value that defines the execution order of movement or rotation operations.Turn Speed: Set the speed of rotation in degrees per second (e.g.,60for a smooth turning feel).Enable Turn Left/Right: Check this box to allow the user to rotate left or right using the assigned input action (typically the thumbstick or trackpad). Disabling it will prevent lateral continuous turning.Enable Turn Around: Check this box to enable a 180° turn input, allowing the user to quickly face the opposite direction. This is useful in fast-paced experiences where reorientation is needed.

- Set Up Input Actions:

- The

Left Hand Turn InputandRight Hand Turn Inputfields let you assign float-based input actions for smooth turning from each controller’s joystick. - In the

Continuous Turn Provider, click the circle (object picker) icon next to theRight Hand Turn Inputfield. - In the search window that appears, type turn and select

XRI RightHand Locomotion/Turnfrom the list. - Repeat the above for the

Left Hand Turn Inputfield.

- The

- Run the Scene:

- On Windows, connect your headset via the Link app and enter

Playmode to test directly in the Editor. - On Mac, build and deploy the project to your headset using the Android build pipeline, then run it on the device to test.

- On Windows, connect your headset via the Link app and enter

- Observe and Tune:

- In the

Sceneview, watch theXR Originrotate around its vertical axis (Y-axis) and verify it’s pivoting from the base. - Adjust the

Turn Speedin theContinuous Turn Providerfor more comfortable or responsive rotation.

- In the

Use

Debug.Log("XR Rig Y-Rotation: " + transform.eulerAngles.y);confirm that smooth turning functions independently of other locomotion methods like movement or teleportation. If rotation feels misaligned, verify theXR Origin’s structure and ensure input actions are assigned correctly.

Snap Turn Provider

The Snap Turn Provider rotates the XR Rig by a fixed angle—such as 45° or 90°—whenever a valid turn input is detected. Because it avoids smooth, continuous rotation, snap turning reduces visual motion and lowers the risk of motion sickness. It is especially useful in confined or object-dense environments, where quick reorientation is needed without displacing the user. Snap turn is commonly paired with teleportation for maximum comfort and usability. Here is how it is set up.

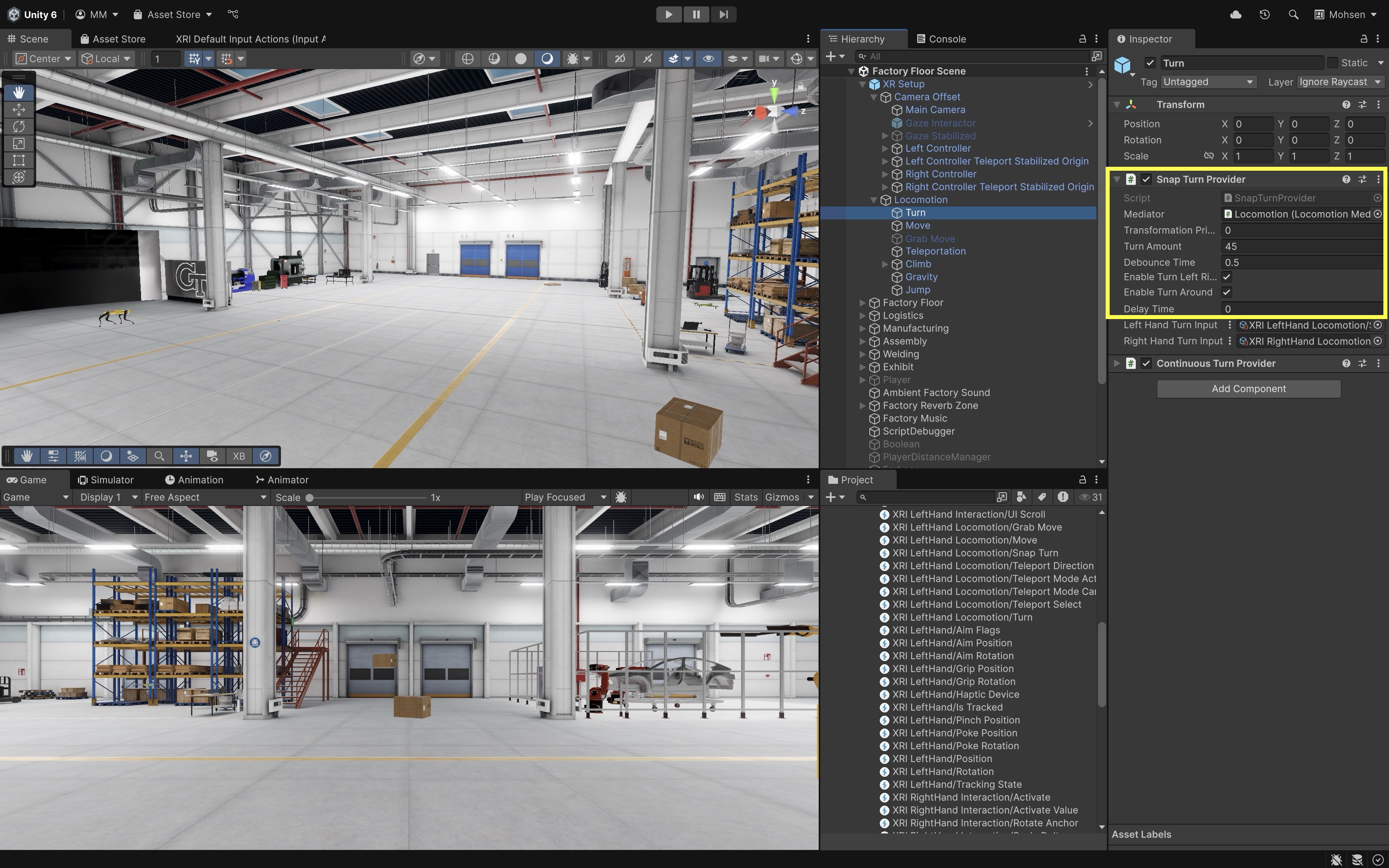

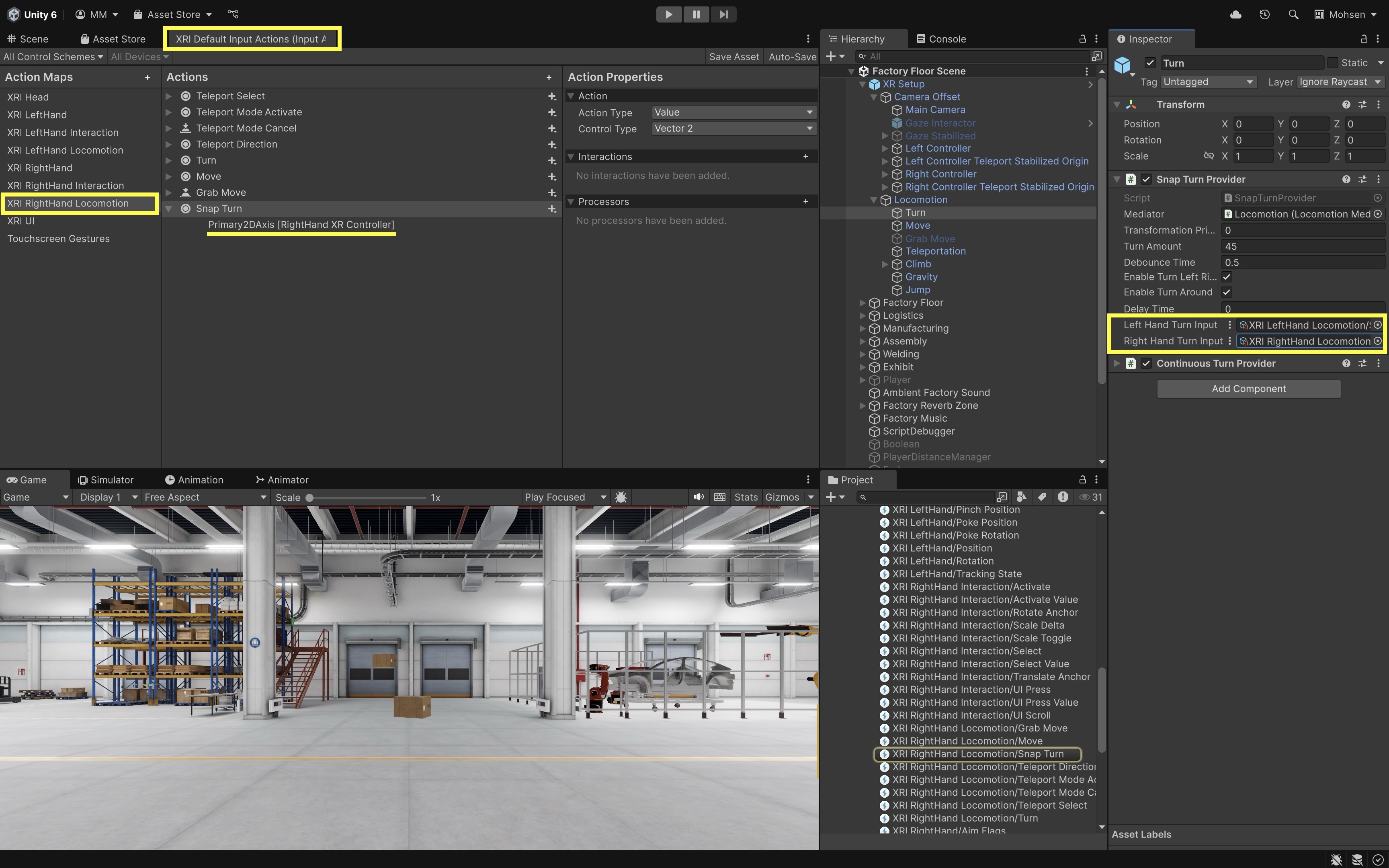

- Add a

Snap Turn Provider:- Select your

TurnGameObject. - In the

Inspector, add theSnap Turn Providercomponent. - Assign the existing

Locomotion Mediatorto theMediatorfield to ensure that the snap turn provider integrates smoothly with other locomotion systems. - Confirm that an

XR Body Transformercomponent is attached to the same GameObject as the XR Rig. This component handles the actual rotational transformation requested by the snap turn provider.

If your setup already includes a

Continuous Turn Provider, be sure to disable or remove it. Only one turn provider should be active at a time to avoid conflicts between smooth and snap turning behaviors. - Select your

- Configure Snap Turn Parameters:

Transformation Priority: Set this to0unless another provider (like movement) needs to apply first. Lower values apply earlier.Turn Amount: Choose the angular step for each snap rotation. Typical values are45degrees (more granular) or90degrees (coarser, faster rotation).Debounce Time: Set a delay (e.g.,0.5seconds) between accepted inputs to avoid accidental rapid turning.Enable Turn Left Right: Enable it to allow left and right snap turns.Enable Turn Around: Optionally enable this if you want to support a 180° turn action using a separate input. This is useful in tight or back-facing scenarios.

- Assign Input Actions:

- The provider includes

Left Hand Turn InputandRight Hand Turn Inputfields, each expecting a float-based Input Action (not a 2D Vector). - Click the circle (object picker) icon next to the

Right Hand Turn Inputfield. - In the search window, type turn and select

XRI RightHand Locomotion/Snap Turn. - Leave the

Left Hand Turn Inputempty unless you wish to support turning via the left-hand joystick. - Ensure your input action is a

Valuetype bound to the joystick X-axis (left = negative, right = positive).

- The provider includes

- Run the Scene:

- On Windows, connect your headset via the Link app and enter

Playmode to test directly in the Editor. - On Mac, build and deploy the project to your headset using the Android build pipeline, then test it on the standalone device.

- On Windows, connect your headset via the Link app and enter

- Evaluate Snap Turn Behavior:

- Use the joystick to perform snap turns and verify that the XR Rig rotates instantly by the set angle.

- Observe whether the rotation happens cleanly around the rig’s Y-axis and does not affect the camera height or controller offsets.

- Adjust the

Turn Amountfor the right balance between speed and control based on your scene’s layout (e.g., tighter turns in small spaces).

- Refine Timing and Feedback:

- If the turn feels too rapid or unintentional, increase the

Debounce Timeto filter out noise or over-triggering. - Optionally, add haptic feedback, sound effects, or UI flashes when a turn is performed to improve user awareness.

- Visual cues—such as a directional arc or quick snap effect—can help users stay oriented after turning in environments with similar or repetitive geometry.

- If the turn feels too rapid or unintentional, increase the

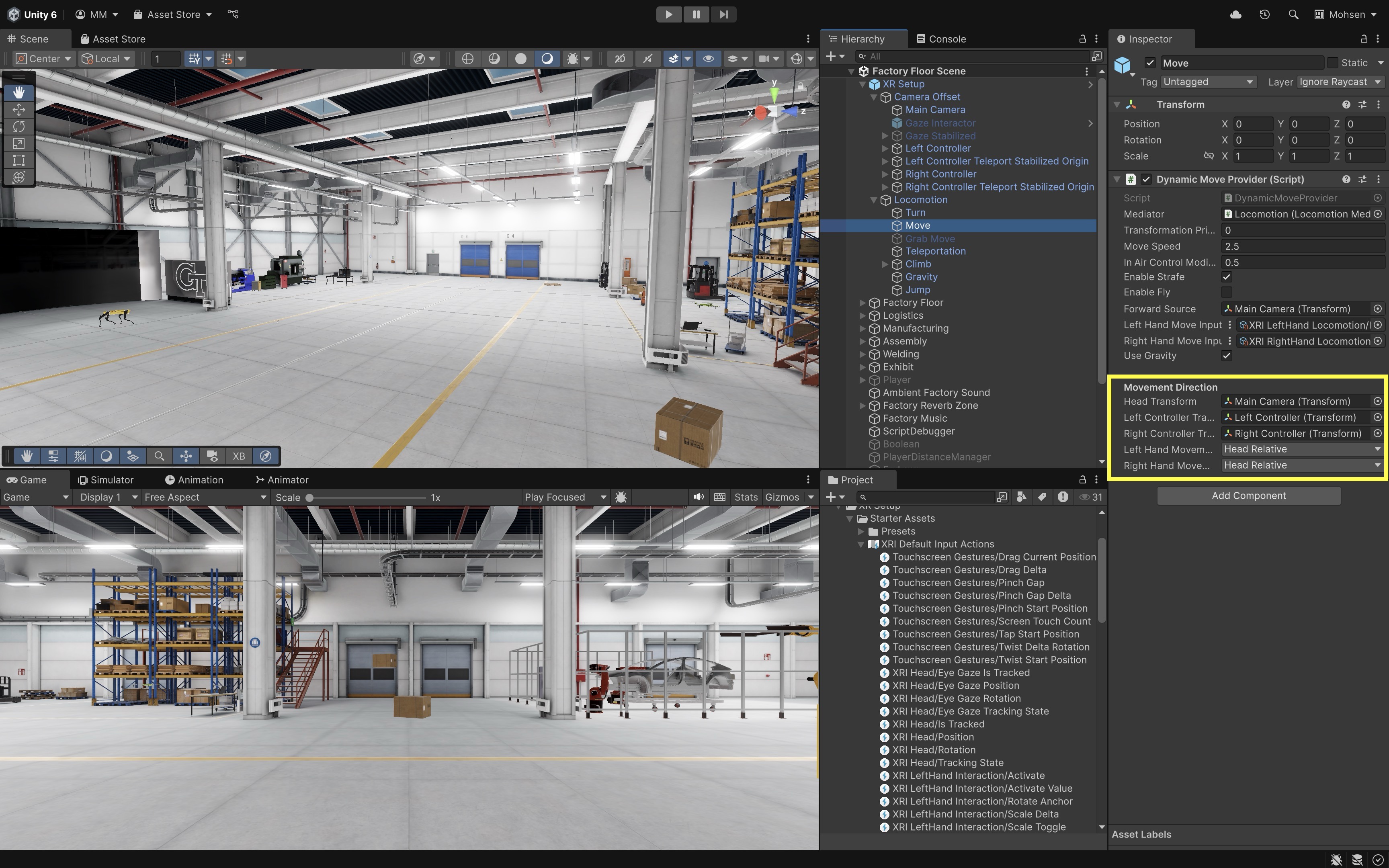

Dynamic Move Provider

The Dynamic Move Provider gradually translates the XR Origin in response to joystick input, allowing users to move smoothly through the virtual world. This form of locomotion mimics real-world walking and enhances immersion, but it must be tuned carefully to avoid causing discomfort. Movement typically follows the headset’s facing direction and supports both forward and strafing (sideways) motion. It’s ideal for exploration-based applications where natural, fluid movement is critical. Follow the steps below to set it up.

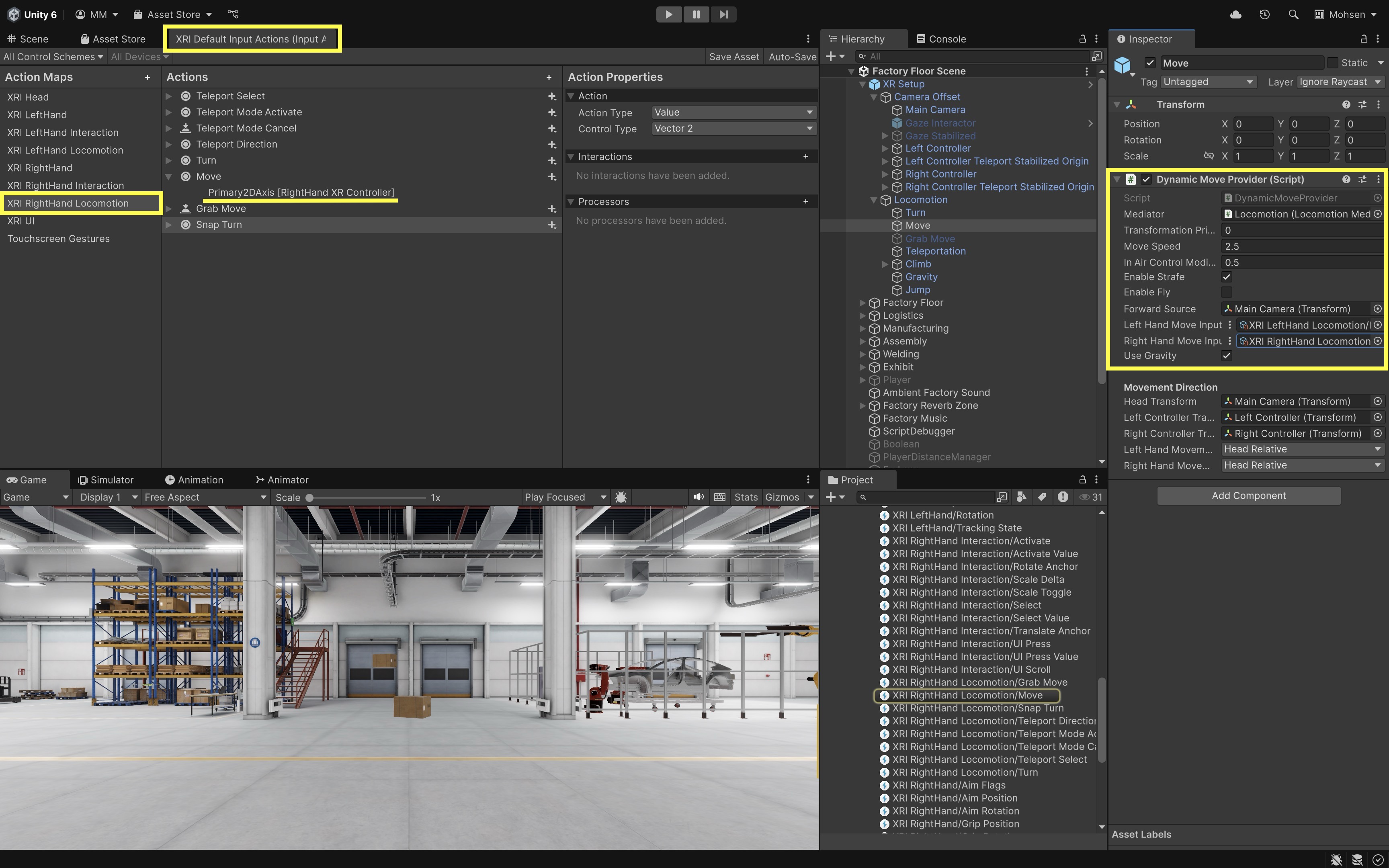

- Attach the

Dynamic Move Provider:- Right-click on the

LocomotionGameObject and selectCreate Empty. Name the empty GameObjectMove. - In the

Inspector, add theDynamic Move Providercomponent. - In the

Mediatorfield, assign the existingLocomotion Mediatorin the scene. This ensures the movement system cooperates with other locomotion providers like turning or teleportation. - Make sure the

XR Body Transformeris attached to the same GameObject as theXR Origin. It applies the translation updates triggered by the move provider.

- Right-click on the

- Configure Movement Parameters:

Transformation Priority: Set this value to define when this movement is applied relative to other providers (e.g., after turning).Move Speed: Set the speed of movement in units per second (e.g.,2.5for walking pace).Enable Strafe: Enable this to allow lateral (sideways) movement from joystick input.Forward Source: Assign theMain Camera(under theXR Setup) so that forward input aligns with the user’s current gaze direction.- Click the circle (object picker) icon next to the

Left Hand Move Inputfield. - In the search window, type move and select

XRI LeftHand Locomotion/Movefrom the list. - Ensure that this action is a 2D Vector bound to the X and Y axes of the left joystick (horizontal = strafe, vertical = forward/backward).

- Repeat the input setup steps for the

Right Hand Move Inputfield.

- Set Movement Direction:

Head Transform: Assign theMain Camerato align movement direction with the user’s gaze.Left Controller Transform: Assign theLeft Controllerto allow controller-relative input when needed.Right Controller Transform: Assign theRight Controllerfor the same purpose on the right hand.Left/Right Hand Movement Direction: Set this toHead Relativeso that joystick inputs always move the player relative to the user’s current viewing direction.

- Run the Scene:

- On Windows, connect your headset via the Link app and enter

Playmode to test directly in the Editor. - On Mac, build and deploy the project to your headset using the Android build pipeline, then run it on the device to test.

- On Windows, connect your headset via the Link app and enter

- Observe and Tune:

- In the

Sceneview, move using the left-hand joystick and observe theXR Origintranslating in space. - Confirm that forward movement matches the headset’s orientation and that the rig moves at a comfortable speed.

- Tweak

Move SpeedandStrafesettings for responsiveness and comfort.

- In the

Use

Debug.Log("XR Origin Position: " + transform.position);to confirm that movement is applied in world space and correctly translates joystick input to the expected direction and magnitude. If movement appears off-angle, double-check that theForward Sourceis correctly referencing theMain Camera.

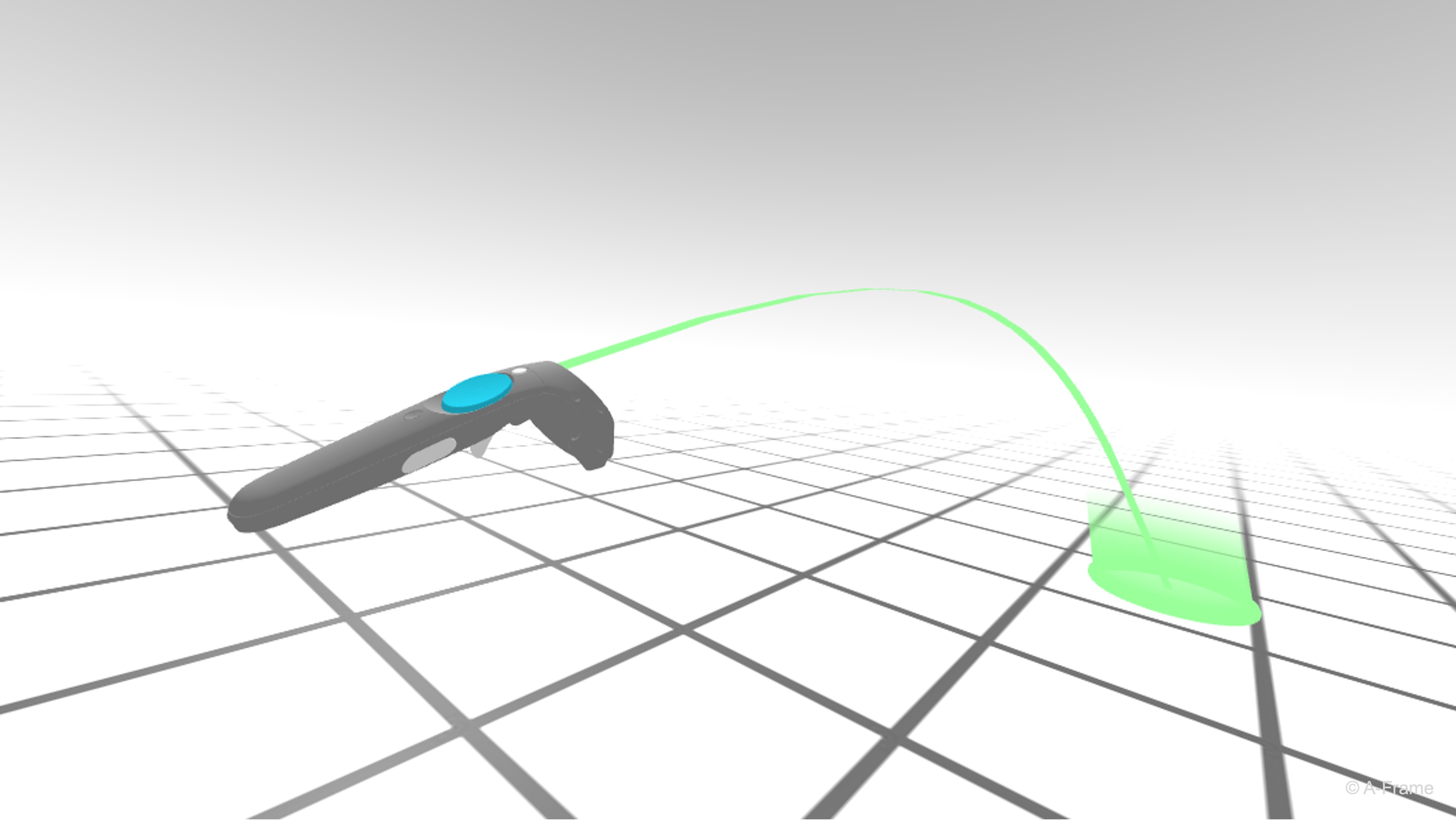

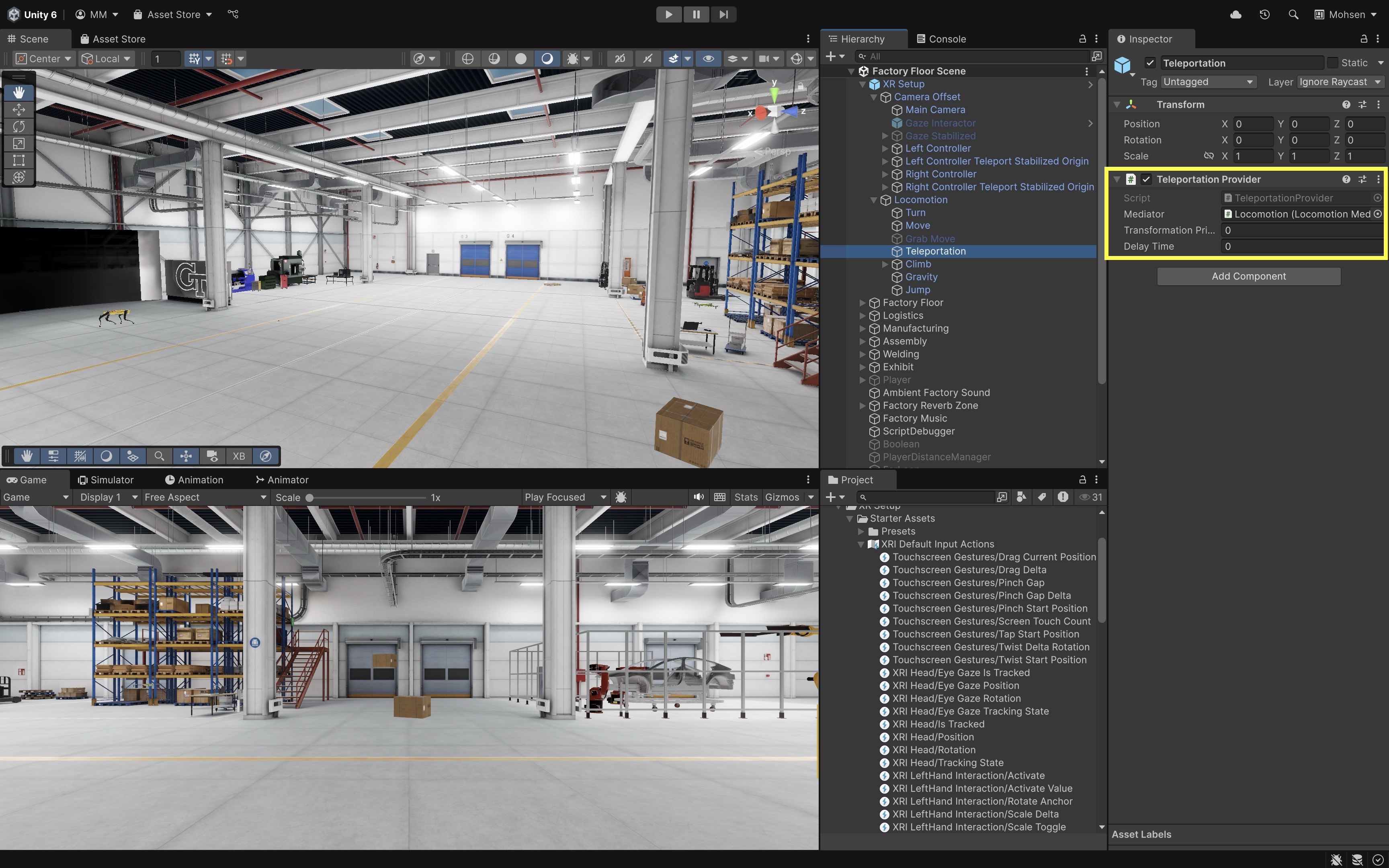

Teleportation Provider

The Teleportation Provider allows users to instantly relocate the XR Origin to a chosen position in the virtual environment. This method avoids the sensory conflict caused by continuous movement, making it highly effective at minimizing motion sickness. It typically works with Teleportation Area and Teleportation Anchor interactables, and is triggered via a Ray Interactor on the controller. Teleportation is ideal for large environments, precision positioning, or setups where natural walking isn’t practical. To setup teleportation, follow the steps below.

- Add a

Teleportation Provider:- Right-click on the

LocomotionGameObject and selectCreate Empty. Name the empty GameObjectTeleportation. - In the

Inspector, add theTeleportation Providercomponent. - In the

Mediatorfield, assign theLocomotion Mediatorfrom your scene. This ensures teleportation integrates cleanly with other movement types and manages access to theXR Originwithout conflict. - Verify that the same GameObject also includes an

XR Body Transformer. This component is responsible for applying the actual transformation—teleporting the user from one location to another.

- Right-click on the

- Configure Teleportation Settings:

Transformation Priority: Set this to0to apply this movement before other providers.Delay Time: Optionally delay teleport execution (e.g., for fade-out effects).

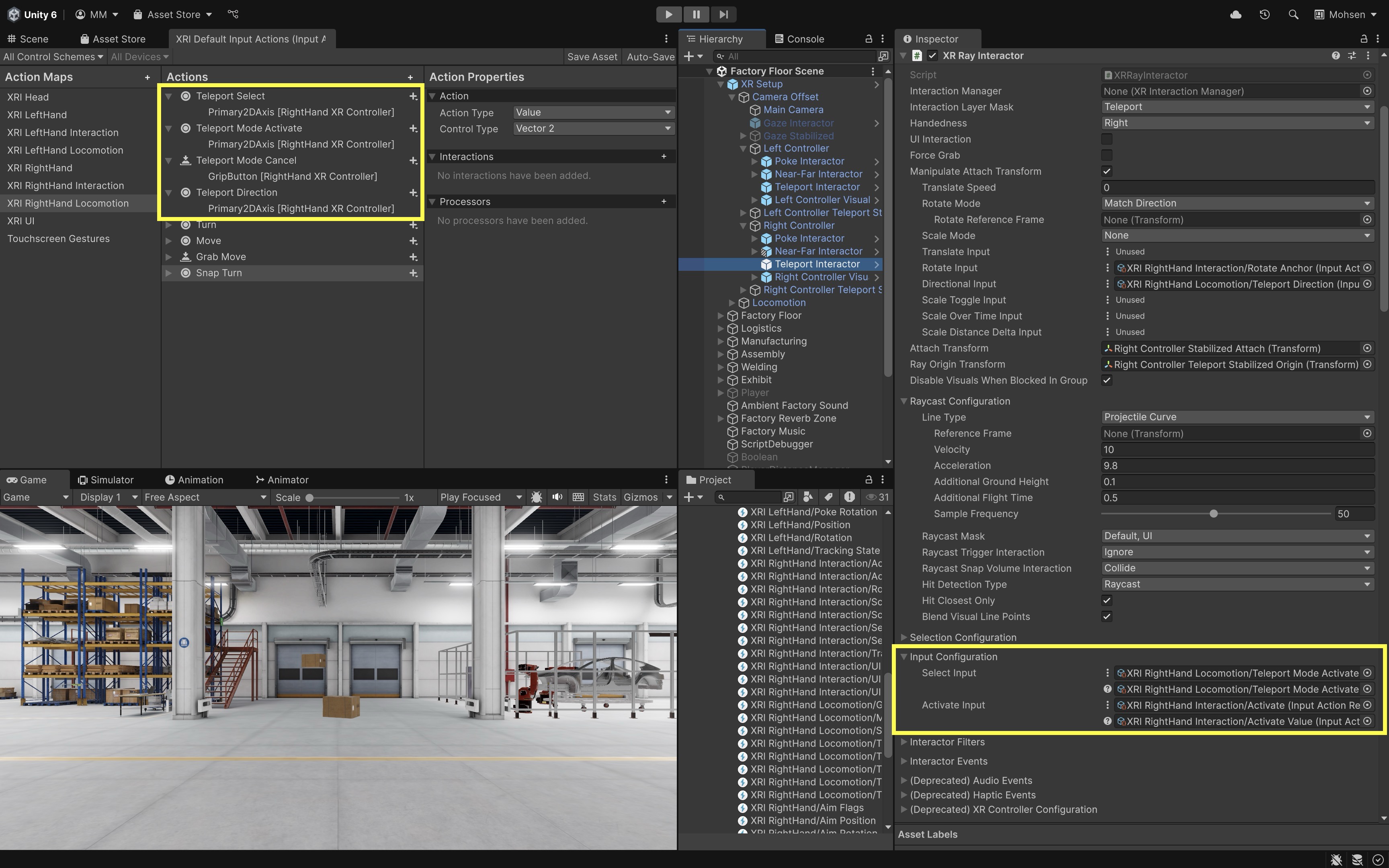

- Assign Controller Input:

- Select the controller you want to use for teleportation (e.g.,

Right Controller). - Ensure the GameObject or one of its children has an

XR Ray Interactorcomponent (e.g., in our case,Teleport InteractorGameObject). - Ensure the

Interaction Layer Maskis set toTeleportso teleport targets are detected during setup and testing. - In the

Input Configurationsection of theXR Ray Interactor, click the circle icon next toSelect Inputand selectXRI RightHand Locomotion/Teleport Mode Activate. This input tells the system when to enter teleport mode—in this setup, pushing the joystick forward activates teleport mode and displays the teleport arc. - In the same

Input Configurationsection, click the circle icon next toActivate Inputand selectXRI RightHand Interaction/Activate. Repeat for the second field and selectXRI RightHand Interaction/Activate Value. These inputs confirm the teleportation action—pressing the button commits to the teleport destination and moves theXR Originaccordingly.

- Select the controller you want to use for teleportation (e.g.,

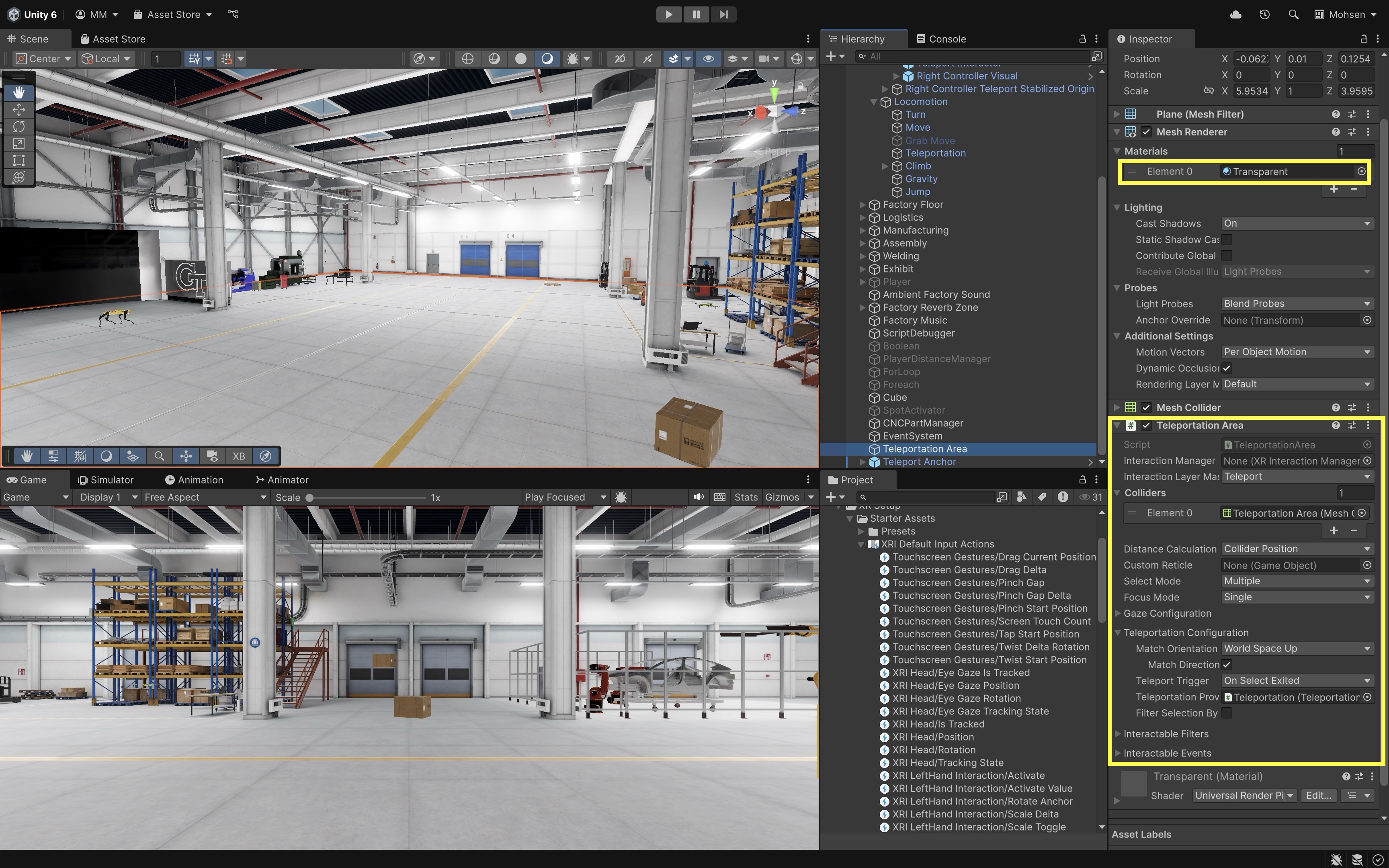

- Set Up

Teleportation Area:- Go to

GameObject > XR > Teleportation Areato create a surface users can teleport to. - Resize and position the area to match your walkable regions (e.g., floors, platforms).

- In the

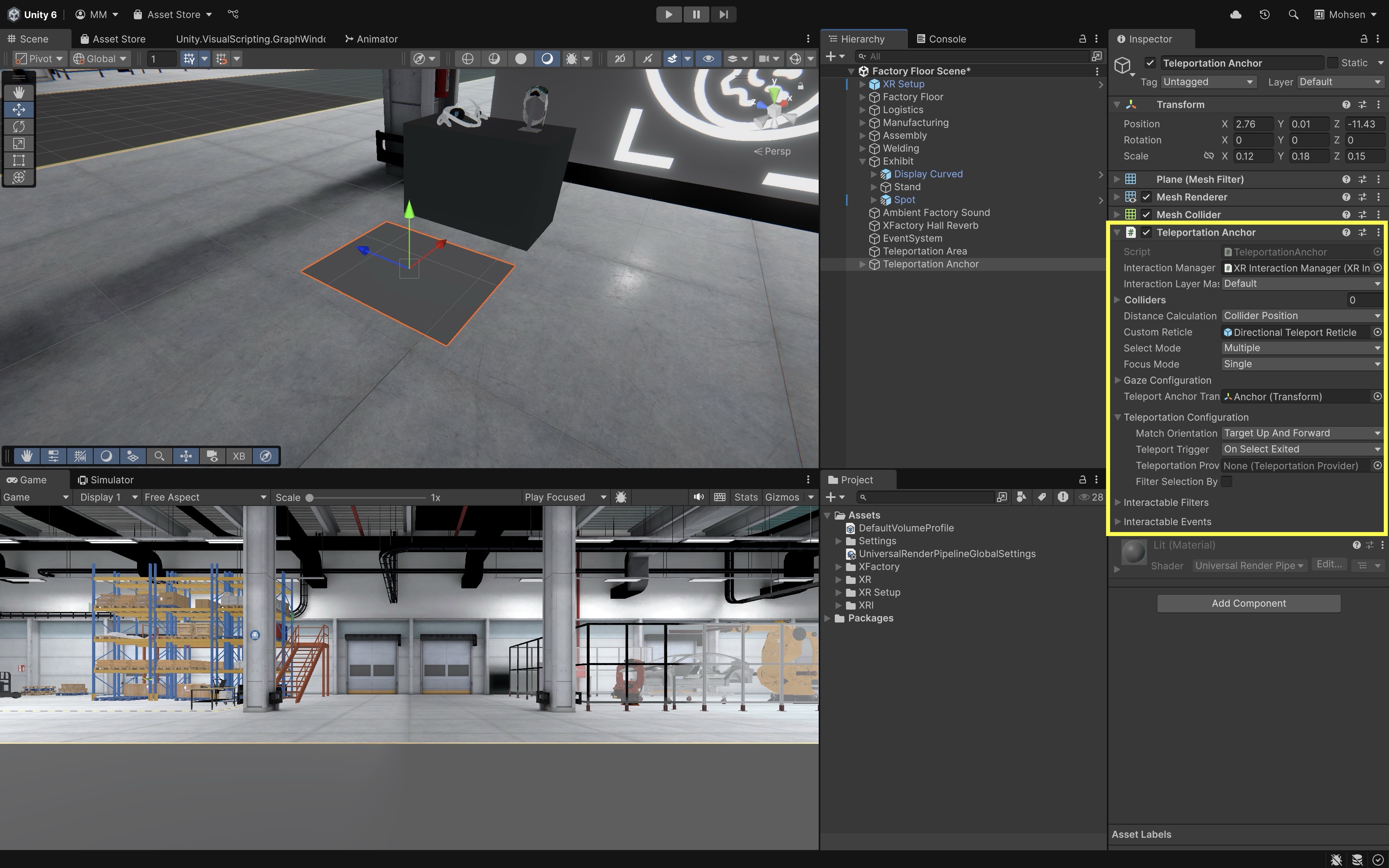

Teleportation Areacomponent, set theInteraction Managerfield if it is not already populated. Use the sameXR Interaction Managerused elsewhere in your scene. You can leave this field empty as well—it will be populated in runtime. Interaction Layer Mask: Defines which Interactors (e.g., ray, hand) can target this area based on matching interaction layers.Colliders: The physical colliders that mark where teleporting is valid; must exist for raycasts/hits.Distance Calculation Mode: Controls how “closest point” is measured for interaction (e.g., from interactor to surface hit).Custom Reticle: Lets you assign a specific reticle prefab (visual marker) instead of using the default.Select Mode: Determines how selection works (e.g.,Single: one interactor at a time,Multiple: allow many).Focus Mode: Defines how focus is tracked (e.g., whether multiple interactors can “focus”/hover at once).Match Orientation: Aligns the player’s forward direction to the surface normal or target orientation when teleporting.Teleport Trigger: Specifies the input event that actually executes the teleport (e.g., on select enter, on select release).

You can assign a custom material (e.g., transparent or semi-transparent) to the

Mesh Rendererof theTeleportation Areato better match your scene’s visual style or to make teleport targets less visually intrusive. - Go to

- Set Up

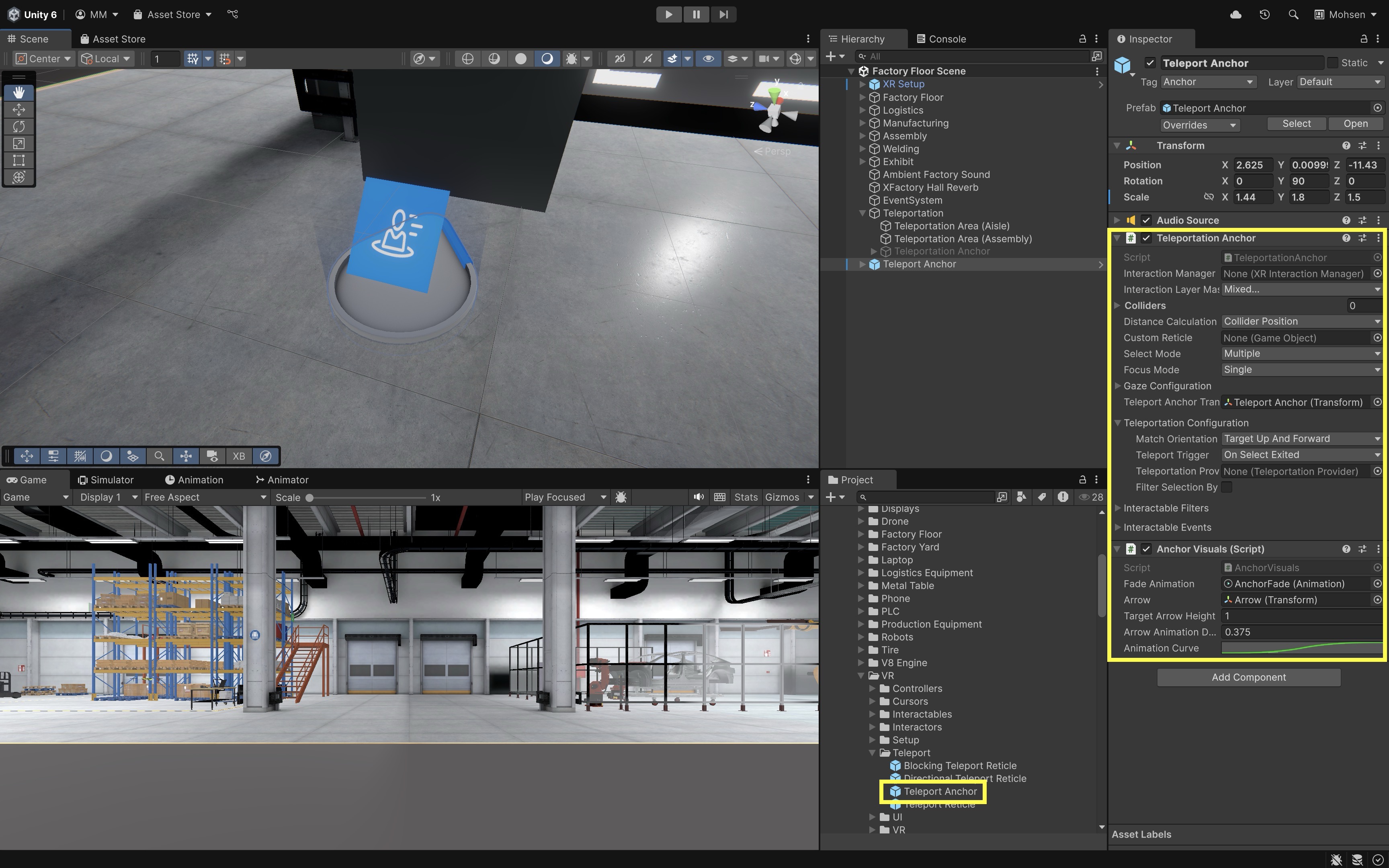

Teleportation Anchor(Optional):- Add a fixed landing spot with a defined orientation via

GameObject > XR > Teleportation Anchor. - Position and rotate the

Teleportation Anchorto control where and how the user will face after teleporting. - In the

Teleportation Anchorcomponent, assign theInteraction Managerfield using the sameXR Interaction Managerused in the rest of your scene (e.g., from the XR Origin or controller objects). Click the circle icon to select it from the scene. - Set the

Match Orientationdropdown based on how you want the user to be aligned after teleporting.Nonekeeps the user’s current orientation.Target Upaligns only the vertical direction (Y-axis).Target Up And Forwardaligns both vertical and forward directions to match the anchor’s transform.

- Optionally, you can replace the anchor with the

Teleport Anchorprefab (provided by Unity) underAssets > XFactory > Prefabs > VR > Teleportto better visualize the position and direction of the teleportation anchor.

- Add a fixed landing spot with a defined orientation via

- Run the Scene and Test Teleportation:

- On Windows, connect your headset using the Link app and test teleportation directly in

Playmode. - On Mac, build and deploy the app to your headset via Android build pipeline, then run it on the device.

- Aim the controller at a

Teleportation AreaorAnchorusing the ray. - Press the assigned teleportation input (e.g., joystick forward, trigger hold).

- Upon release or confirmation, the

XR Originshould instantly move to the target location.

- On Windows, connect your headset using the Link app and test teleportation directly in

- Fine-Tune Interactions:

- Adjust teleport behavior for instant versus press-and-hold activation.

- Address interaction conflicts, such as grabbing and UI control—use separate layers and input actions to avoid overlap.

- Test in a variety of lighting and environment conditions to ensure visual feedback remains visible and intuitive.

- Make the teleportation area’a material transparent to make it less visually intrusive.

Optimize the Project for VR

Before proceeding to other topics in VR development, let’s follow a production-oriented workflow to optimize the Unity project for VR. These practices are essential for maintaining high performance (typically 90 FPS) in VR environments, ensuring a smooth, immersive, and comfortable user experience. Poorly optimized VR apps can lead to nausea, discomfort, and system overheating, especially on standalone devices like Meta Quest 3.

Measure Your Performance

-

Set a Target Frame Rate: Most VR headsets require 90 FPS or higher for comfort. Refer to your device’s specifications (e.g., Meta Quest, HTC Vive) and document your target FPS. Setting a clear target allows you to benchmark performance and detect regressions early during development.

-

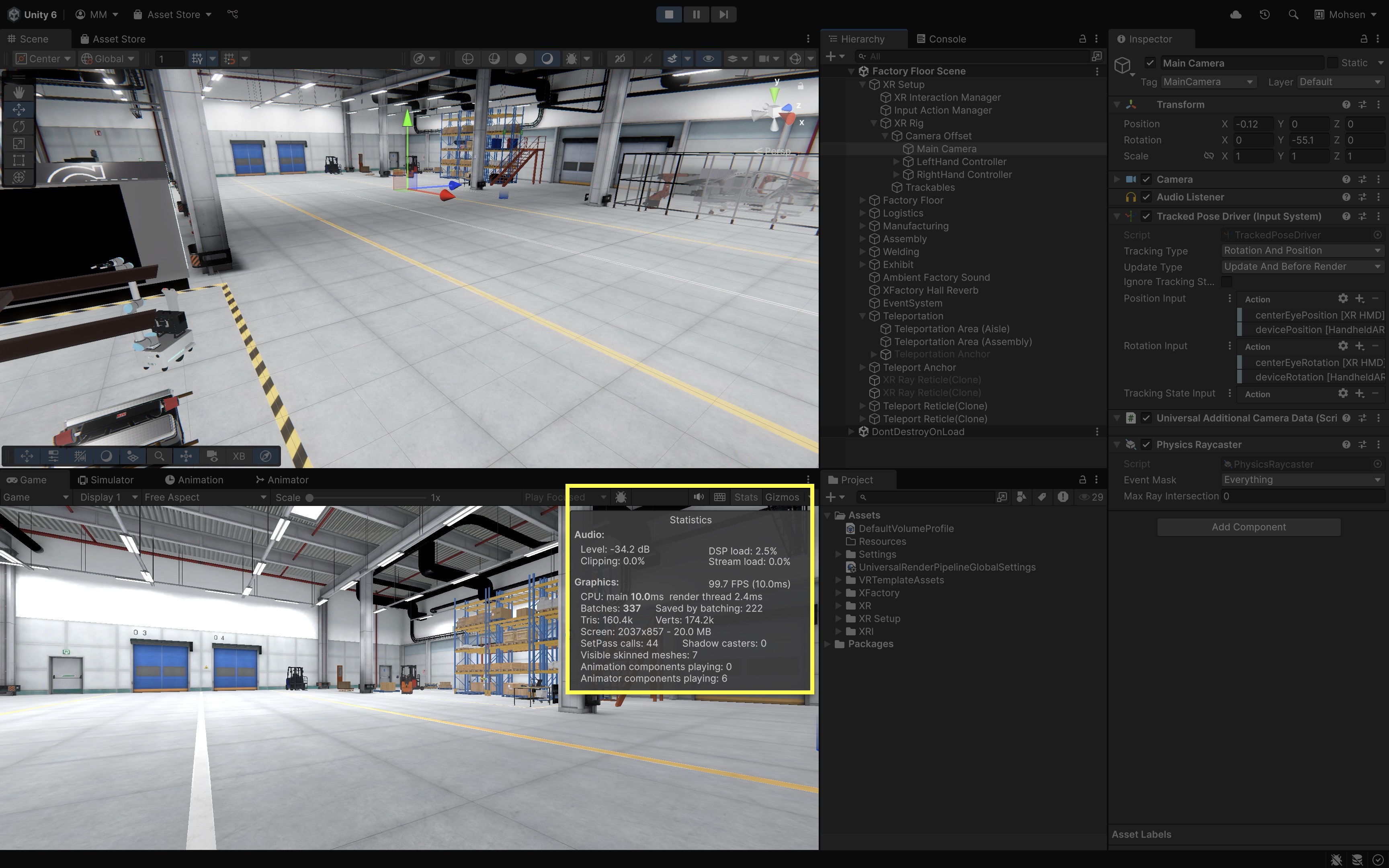

Monitor Frame Rate Using Stats or Script: Click the

Statsbutton in the top-right corner of theGameview to view real-time information such as FPS, draw calls, and triangle count. This is the quickest way to get a basic performance overview while testing in the Editor. You can also display FPS in the scene using a simple script with1.0f / Time.deltaTime, shown in aTextorTMP_TextUI element for live monitoring.

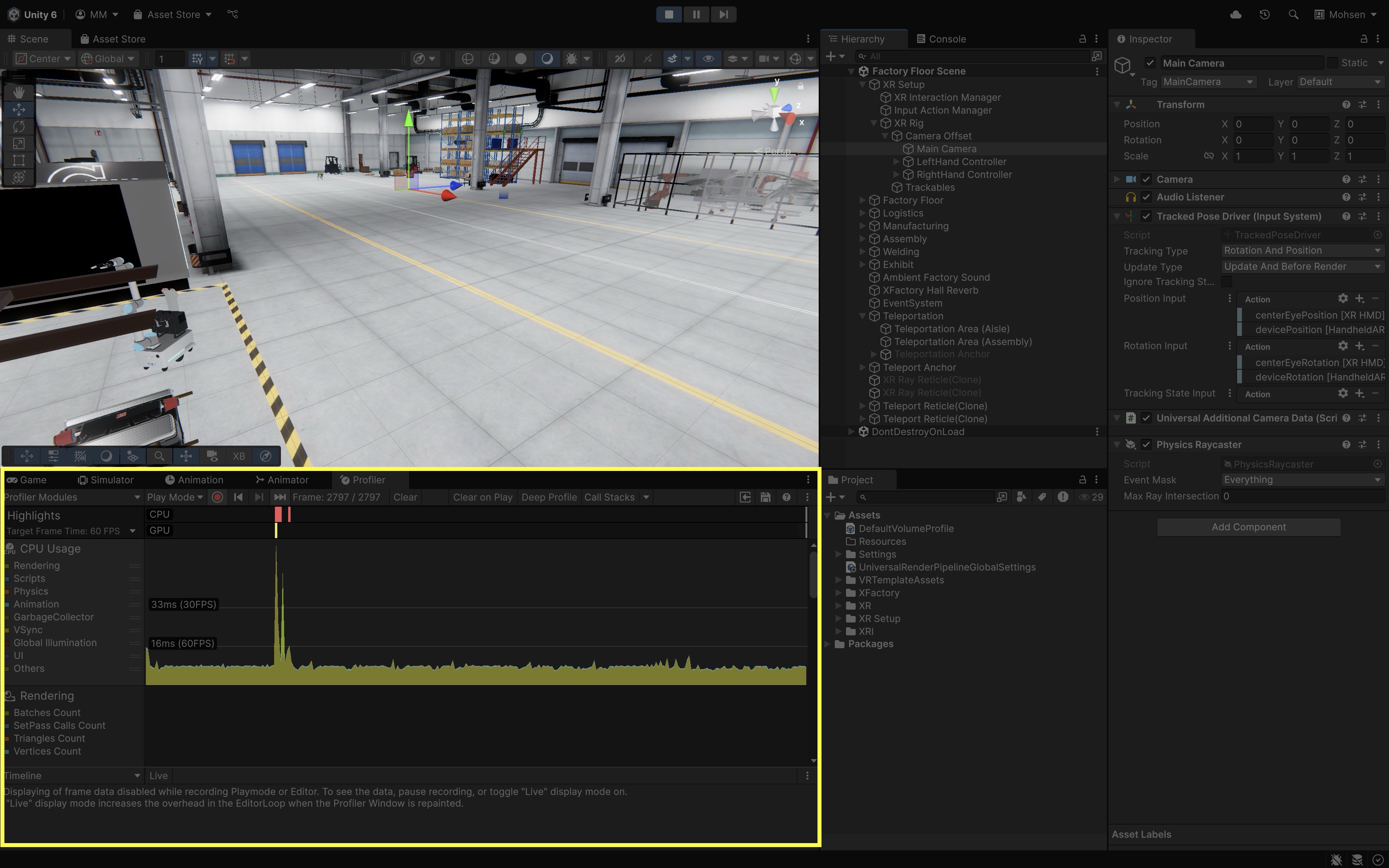

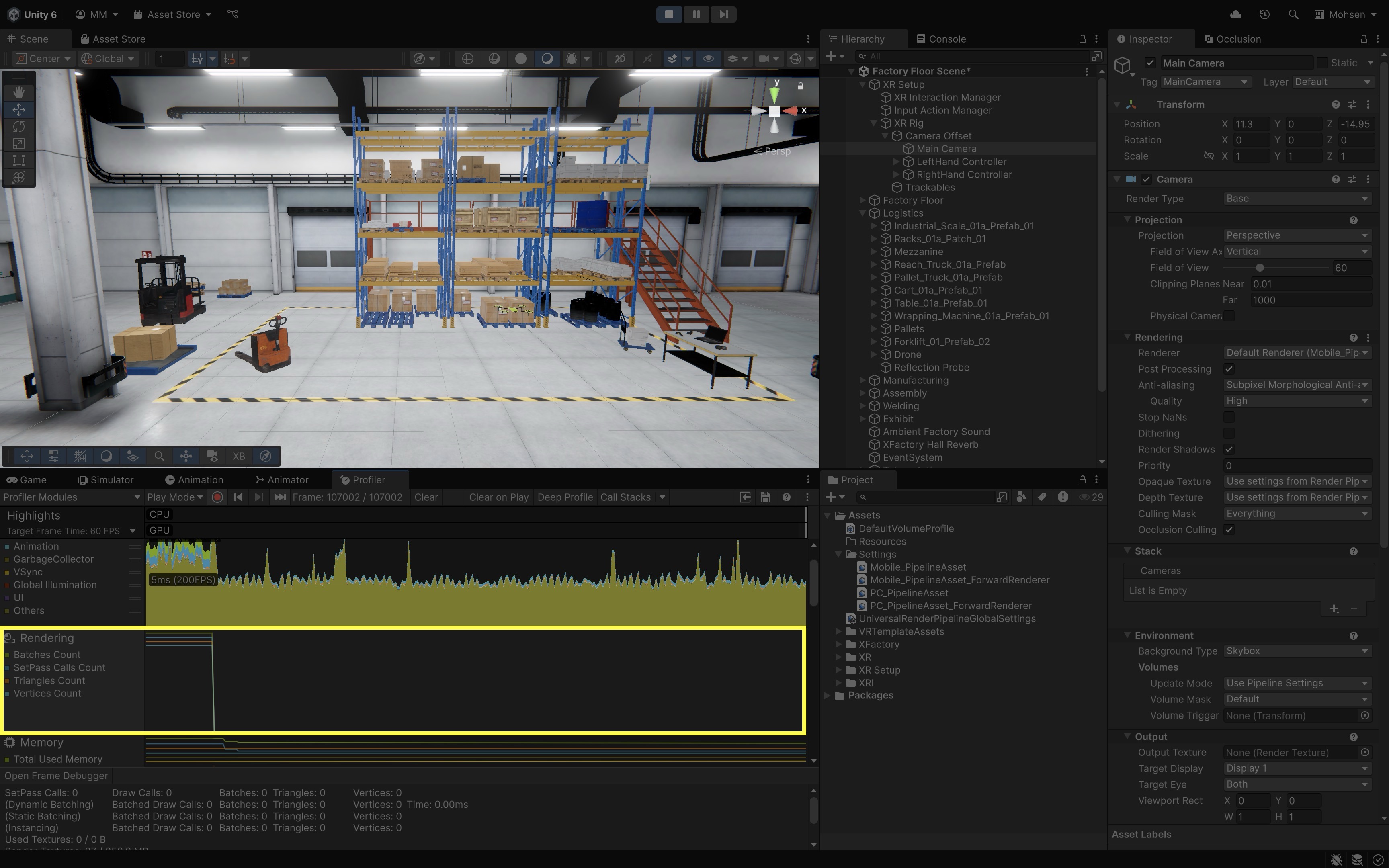

- Delve Deeper Into Performance Using Profiler: For a deeper look into CPU, GPU, memory, rendering, and script performance, open the Unity Profiler via

Window > Analysis > Profiler. It provides real-time charts and timelines, helping you pinpoint bottlenecks while your application is running. These tools are essential for identifying performance issues early — before building for and testing on VR hardware.

- Test on Device: Build and run on your actual VR hardware. Use platform tools like Oculus Developer Hub or SteamVR Performance Graph to verify in-headset FPS. Editor performance is not representative of headset runtime; real-world testing reveals actual user experience and thermal limits.

Minimize Draw Calls

-

Check Draw Calls in Stats: Open the

Game View > Statswindow. The “Batches” value shows your draw call count. Target fewer than 175 draw calls for smooth VR. In the figure above, for example, the number of batches is over 300, which is too high for VR, especially if we re targeting standalone headsets like Meta Quest 3 or HTC Vive Focus. High draw call counts increase CPU overhead and reduce frame rates, especially on mobile or standalone headsets. -

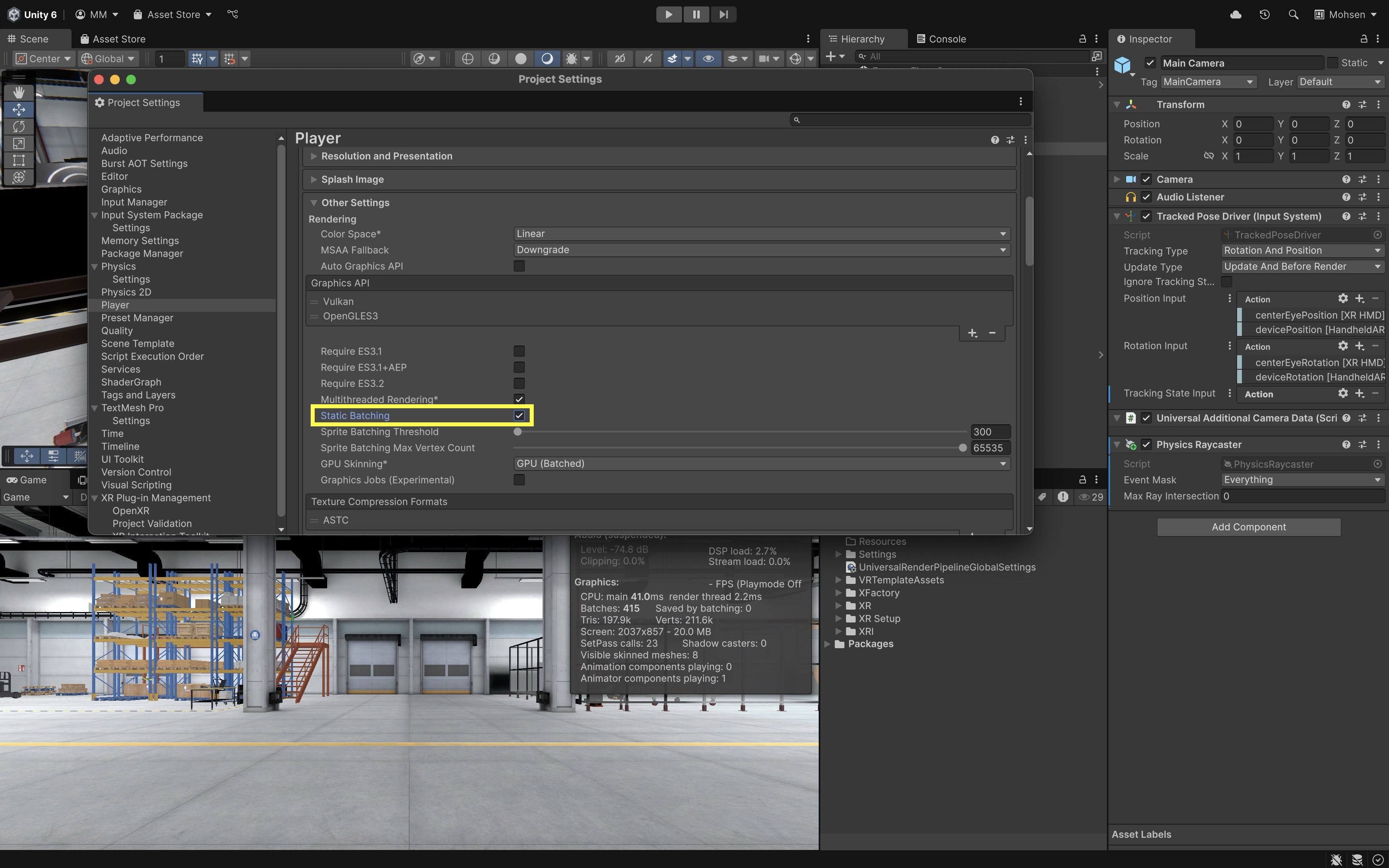

Use Static Batching: Go to

Edit > Project Settings > Player > Other Settings. EnableStatic Batching. This feature lets Unity group static objects, reducing CPU work needed to send rendering instructions.

- Mark Static Objects: In the

Hierarchy, select non-moving objects. In theInspector, enable theStaticcheckbox to let Unity batch them. Marking objects as static informs the engine that these do not change, allowing more aggressive performance optimizations like lightmapping and occlusion culling.

Reduce Polygon Count

-

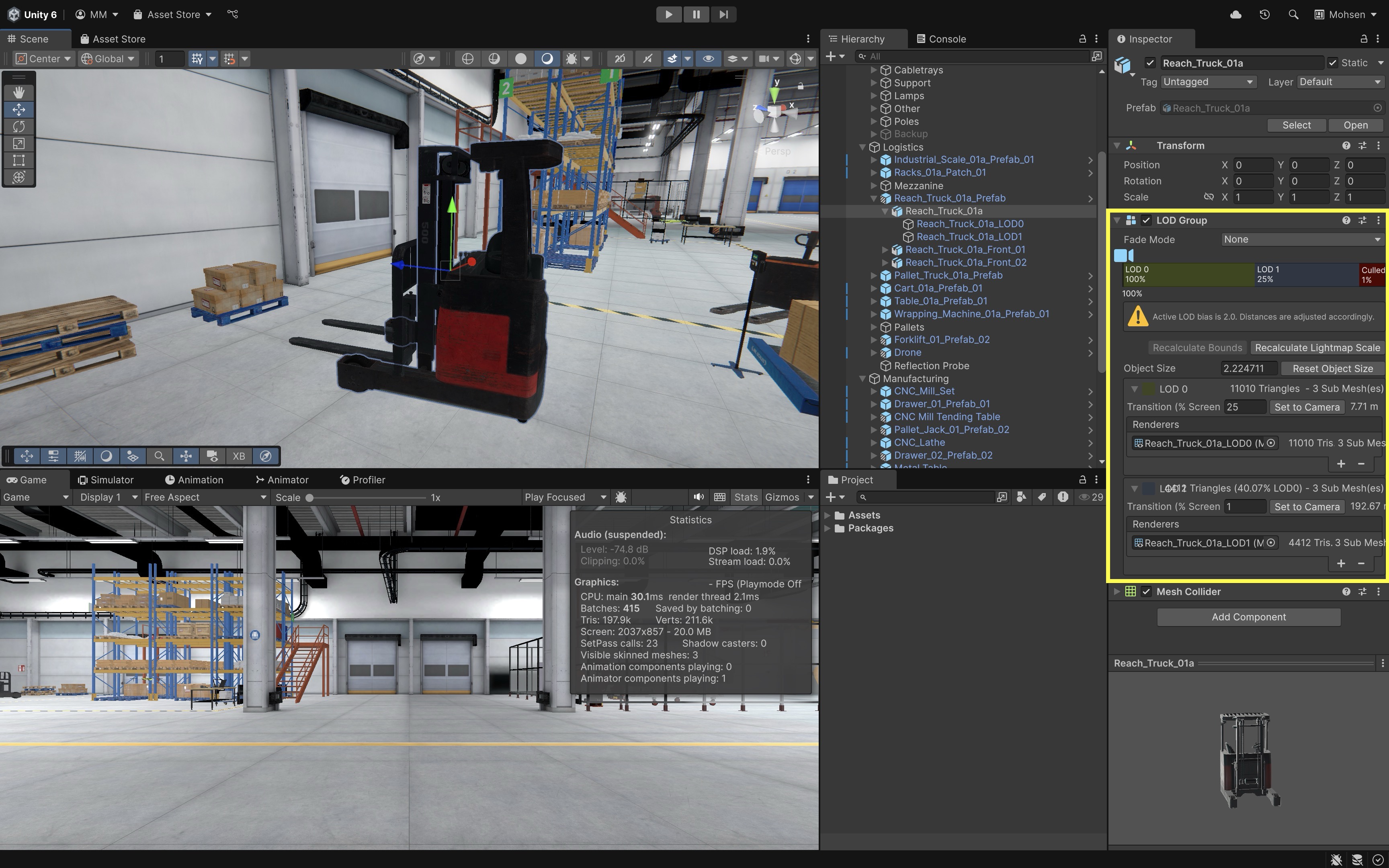

Analyze Triangle Count: In the

Statsview, checkTris(triangle count). UseScene View > Wireframemode to inspect object complexity. Lower triangle counts reduce GPU load, making rendering faster and more efficient, particularly for scenes with many objects. -

Inspect Meshes: Select objects and check their

Mesh Filterin theInspector. View the mesh in theProjectwindow to see triangle details. Understanding your models’ complexity helps you spot and replace unnecessary high-poly meshes early in the pipeline. -

Simplify High-Poly Assets: Use modeling tools like Blender or optimization tools like Simplygon to reduce polycounts. Use

LOD Groupcomponents (GameObject > LOD Group) for objects that can have lower detail at distance. This helps maintain high visual quality up close while reducing rendering costs when objects are farther from the camera.

Optimize Textures

-

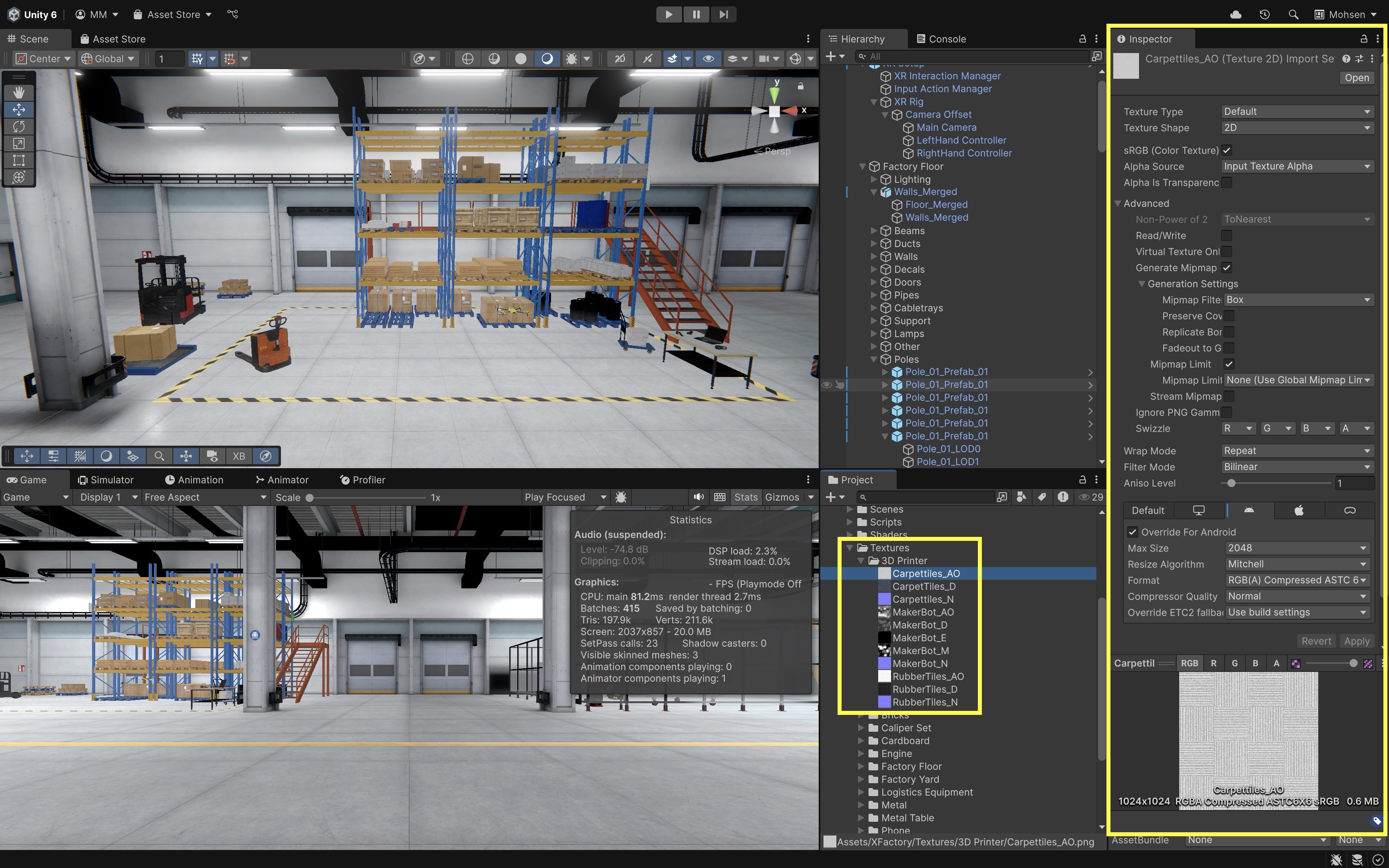

Adjust Import Settings: Select textures in the

Projectview. In theInspector, adjustMax Size,Format, andCompression. Smaller and compressed textures load faster, use less memory, and reduce the bandwidth needed for GPU rendering. -

Enable Mip Maps: In the texture import settings, check

Generate Mip Mapsto reduce overdraw at distance. Mip maps improve performance and visual quality by lowering texture resolution for far-away objects, reducing aliasing. -

Use Anisotropic Filtering: Set

Aniso Levelto2–8for floor or angled surfaces to improve sharpness at oblique views. This reduces blurring of textures viewed from shallow angles, such as factory floors or walls, enhancing realism.

Optimize Particles and Effects

-

Simplify Particle Systems: Use

Universal Render Pipeline/Particles/Unlitshaders for particles. Minimize particle count and lifetime. Complex particle systems are expensive and can tank performance quickly in VR; using unlit shaders avoids lighting overhead. -

Limit Post-Processing Effects: Add a

Volumeto the Main Camera. Create and assign aVolume Profile. Use only lightweight effects likeColor AdjustmentsorBloom, and avoidMotion Blur,Chromatic Aberration, andLens Distortion. Post-processing can dramatically impact performance and user comfort. Some effects are disorienting or nauseating in VR.

Apply Efficient Anti-Aliasing

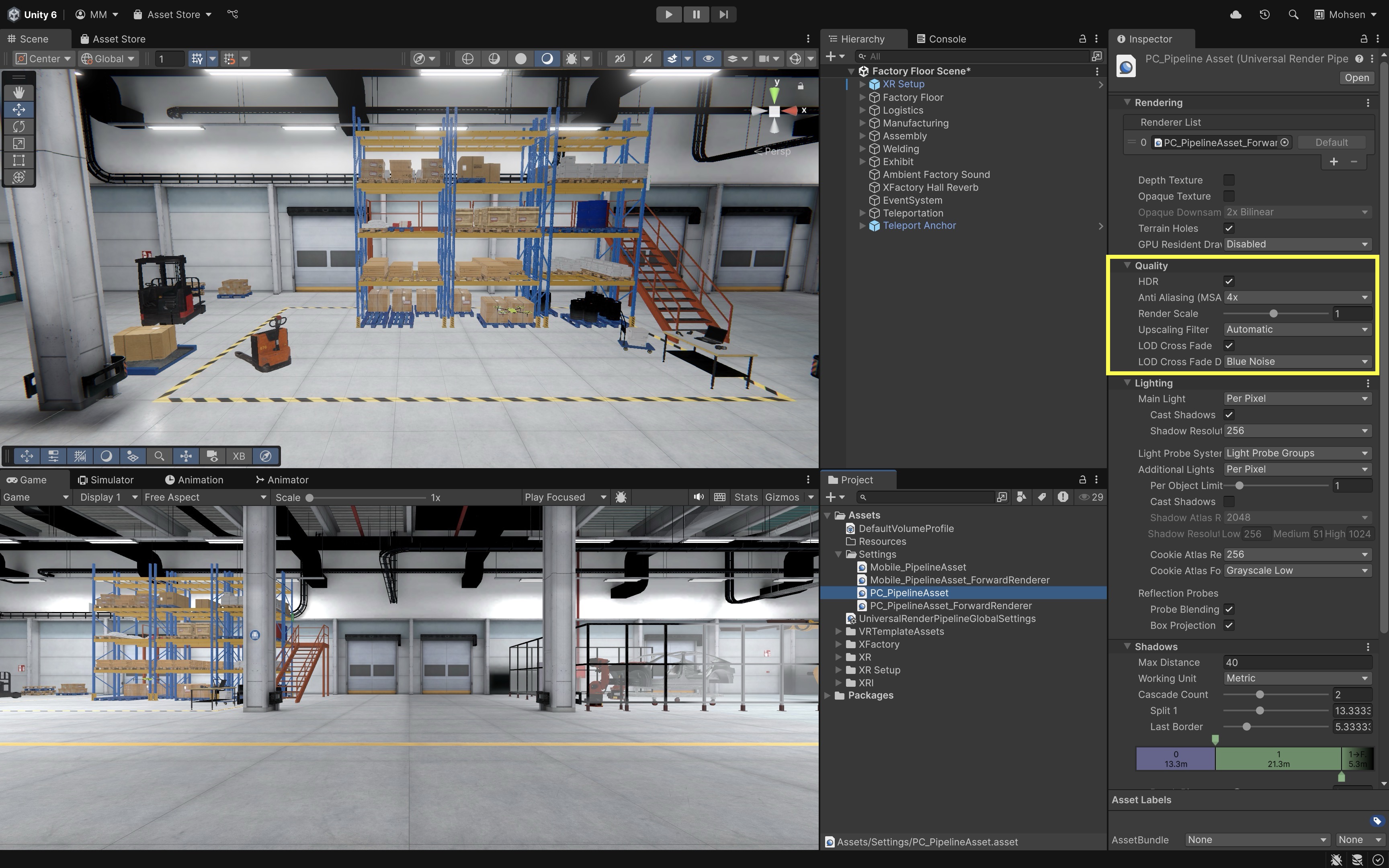

- Set MSAA in URP Asset: Locate your

UniversalRenderPipelineAsset(e.g.,URP-HighQuality). In theInspectorunderQuality, setMSAAto4x. Multi-sample anti-aliasing smooths jagged edges with minimal performance hit, especially important for headset displays.

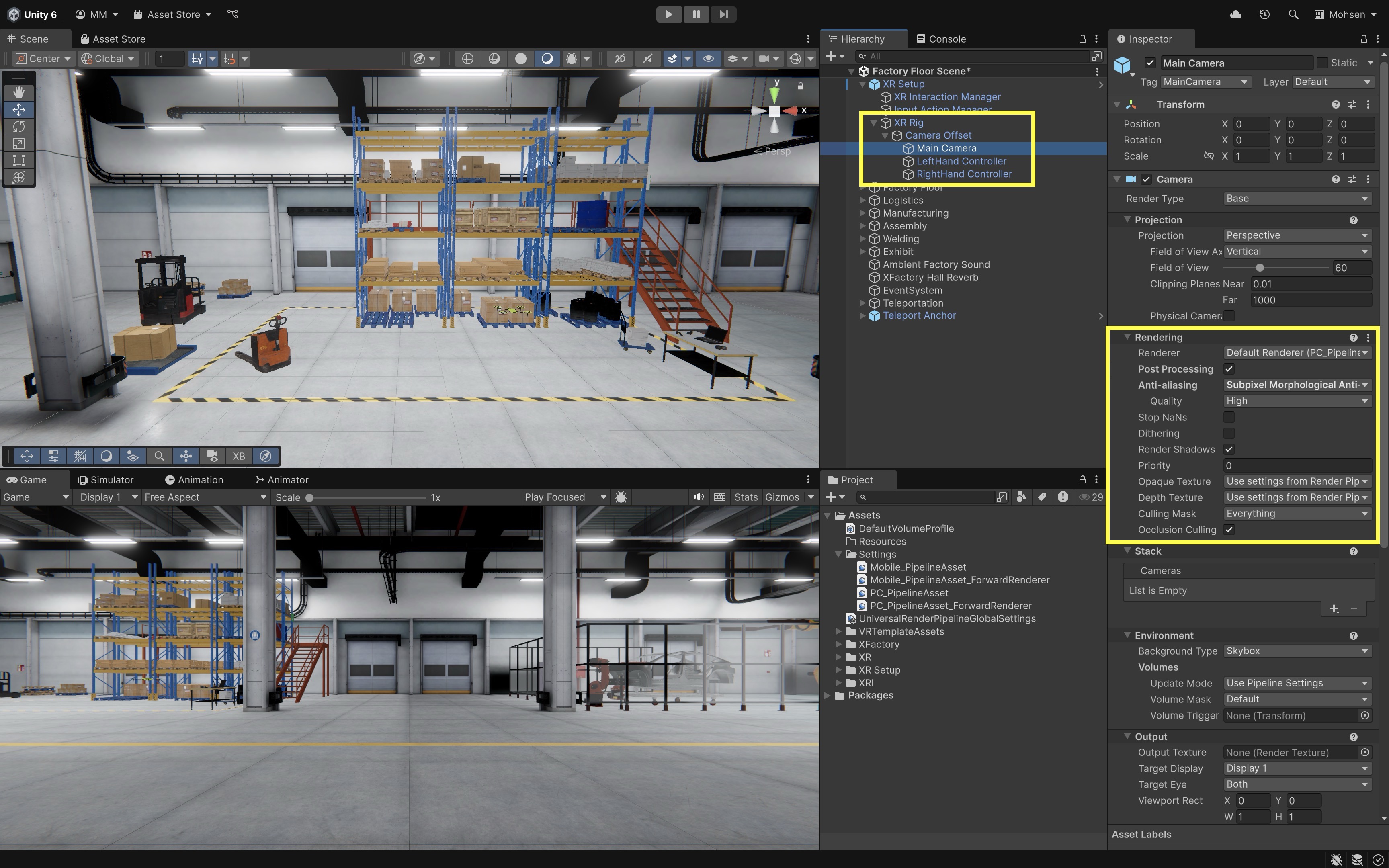

- Enable FXAA on Camera: On the

Main Camera, enablePost Processing. InAnti-Aliasing, chooseFXAAorSMAAas lightweight options. These screen-space algorithms are efficient and help polish visual quality without sacrificing performance.

- Adjust High-Detail Materials: For detailed objects like machines or robots, use baked

Reflection Probesand simpler shaders. Real-time reflections and complex PBR shaders are GPU-intensive and should be avoided or simulated in VR contexts.

Additional Tips

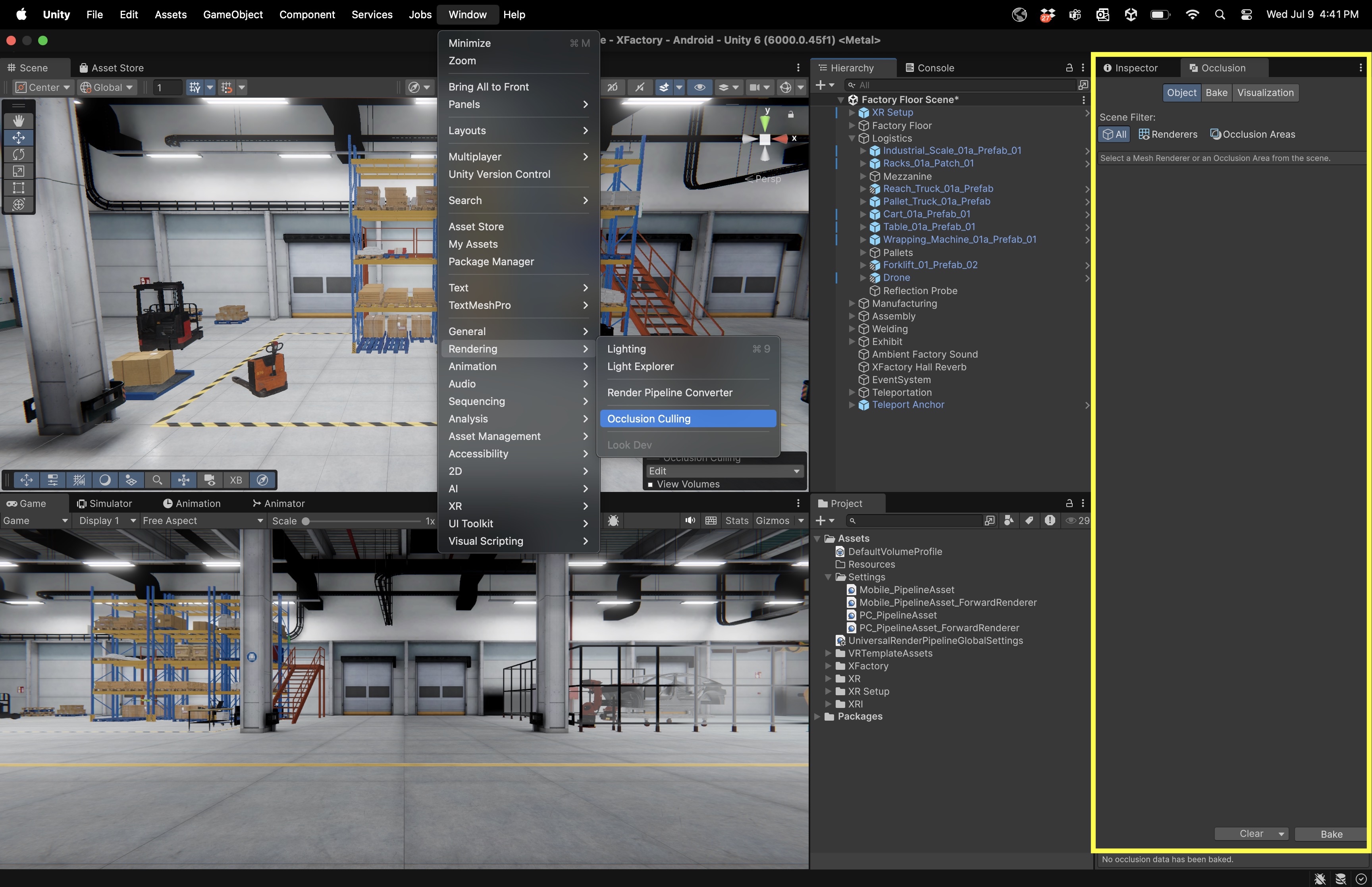

- Occlusion Culling: Open

Window > Rendering > Occlusion Culling. Bake occlusion data to prevent rendering of objects blocked from view. This improves performance by skipping rendering for hidden geometry, especially useful in indoor scenes like XFactory.

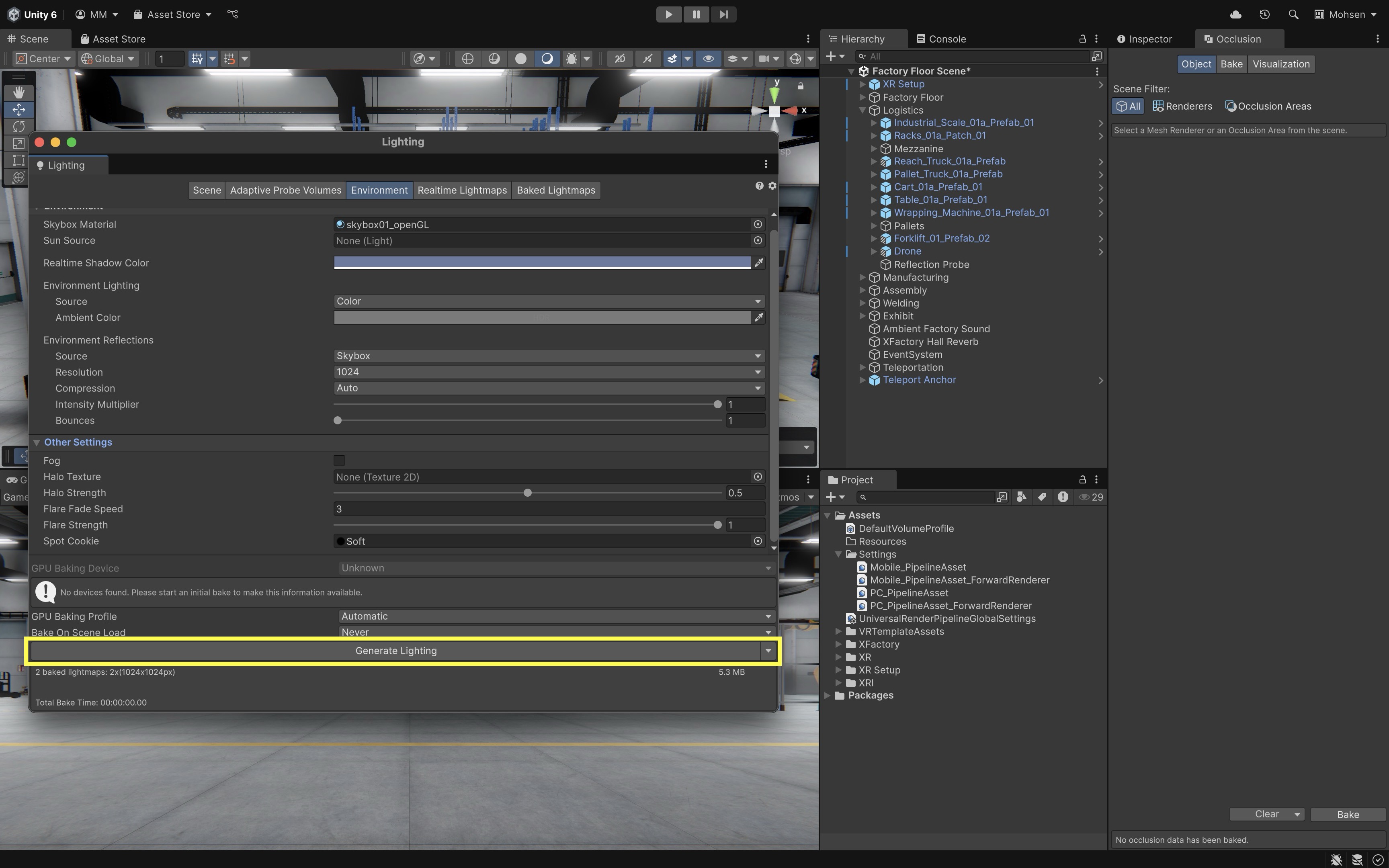

- Lightmapping: Use

Baked Lightingfor static geometry andMixed LightingwithShadowmaskfor semi-dynamic objects. Baked lighting provides high visual fidelity at a fraction of the real-time lighting cost. To generate a lightmap, open theLightingwindow (Window > Rendering > Lighting > Environment), scroll to theLightmapping Settingssection, and clickGenerate Lighting. Make sure your static objects are marked asLightmap Static, and verify thatBaked Global Illuminationis enabled underMixed Lightingfor successful baking.

- Profiler Monitoring: In

Window > Analysis > Profiler, use theRenderingtab to find bottlenecks such asCamera.Render,Shadows.Render, orPostProcessing.Render. Regular use of the Profiler helps catch performance issues early and guides your optimization decisions with real data.

Key Takeaways

Designing effective VR experiences requires a balanced integration of spatial awareness, locomotion, and performance optimization. Understanding and applying world, local, and camera coordinate systems ensures that objects behave consistently and interactions feel natural, while proxemics helps maintain user comfort through respectful spatial boundaries. Implementing locomotion systems like teleportation, snap turning, and continuous movement demands careful input handling, modular design, and attention to user comfort. Finally, optimizing for VR performance—through batching, polygon reduction, texture management, efficient effects, and targeted frame rate goals—ensures smooth, immersive, and comfortable experiences, particularly on standalone headsets.